Meta’s rolling out some new protective elements for teen users, including restrictions on live streaming on IG, advanced protection within DMs, an expansion of its teen accounts to Facebook and Messenger, and more.

The updates will ensure safer experiences for teen users, and are a positive step. And with Meta continuing to come under scrutiny over the risks that its apps pose to teens, it could also help to demonstrate its commitment to improvement on this front.

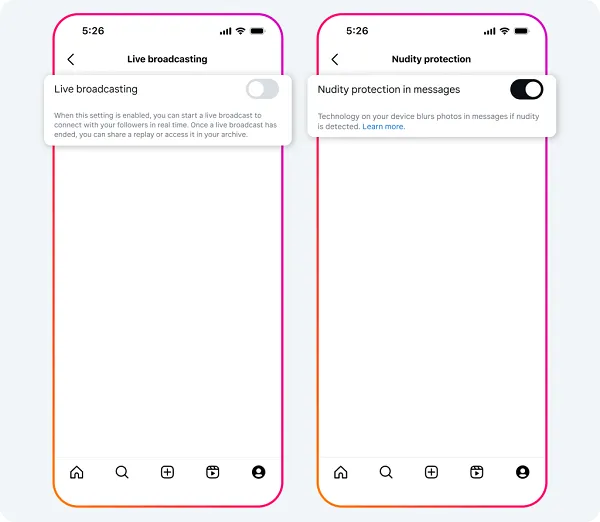

First off, Instagram’s implementing new restrictions for live-streaming and its DM protection elements, which will mean that parents will now need to approve any changes to the default settings.

As explained by Instagram:

“In addition to the existing built-in protections offered by Teen Accounts, we’re adding new restrictions for Instagram Live and unwanted images in DMs. With these changes, teens under 16 will be prohibited from going Live unless their parents give them permission to do so. We’ll also require teens under 16 to get parental permission to turn off our feature that blurs images containing suspected nudity in DMs.”

Live-streams can be a risky proposition for young users, with the immediate engagement eliminating filtering tools, leaving them open to exposure and manipulation. As such, this is a good update, which will help to ensure that parents maintain more awareness of their teen’s online activity.

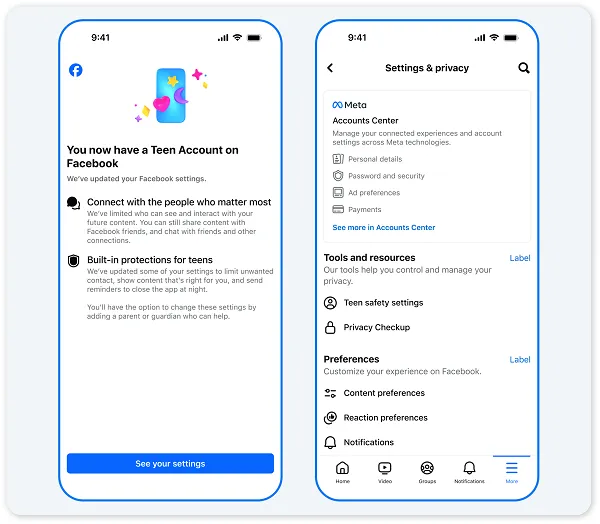

Meta’s also making its Teen Accounts available on Facebook and Messenger, which will replicate Instagram’s teen profiles which it launched last year.

“Teen Accounts on Facebook and Messenger will offer similar, automatic protections to limit inappropriate content and unwanted contact, as well as ways to ensure teens’ time is well spent. We’ll begin rolling Facebook and Messenger Teen Accounts out to teens in the US, UK, Australia and Canada and will bring the experience to teens in other regions soon.”

The changes are the latest safety update for teen users, with IG adding enhanced privacy elements, messaging restrictions, sleep mode, harmful content exposure limits, and more last year alone.

And Instagram says that its teen protection features are having an impact.

“Since making these changes, 97% of teens aged 13-15 have stayed in these built-in restrictions, which we believe offer the most age-appropriate experience for younger teens.”

So while teens can opt out of some of these elements, the data shows that they’re not doing so, with Instagram’s teen accounts also giving parents more oversight into their child’s app use.

So why is Meta making these changes now?

Well, ideally, you’d want to assume that it’s to ensure teen wellbeing and safety, but given that Meta is generally more focused on, well, what benefits Meta, I would view these with a more skeptical eye.

Last year, the U.S. Surgeon General called for cigarette-like labels on social media to warn of the potential mental health risks, while the Senate also passed the Kids Online Safety Act to impose more stringent safety and privacy requirements for children and teenagers on social media.

In August, a teenager filed a $5 million lawsuit against Meta over its implementation of addictive algorithmic features, while a range of lawsuits have been also filed by the attorneys general of 45 U.S. states, accusing Meta of unfairly targeting teenagers and children on Instagram and Facebook, while also using deceptive tactics to play down hazards.

Internationally, several regions are also looking to increase the age at which teenagers are able to access social media due to concerns about its negative impacts, and with all of these concerns in mind, it’s hard to assume that Meta is simply looking to do what’s right.

But whatever the reason, based on the rising evidence that points to the harms caused by social media platforms, this does seem like a positive step. Though whether it will be enough to stem the tide against Zuck and Co. on this front remains to be seen.

It remains a significant area of concern, and with more and more academic research highlighting the links between social media use and mental health impacts, it also raises more questions about the push for innovation, and the “move fast and break things” approach that Silicon Valley leaders generally prefer to take.

The reasons for this are market forces and international competition, and the need for U.S. companies to lead the way on all fronts. But at the same time, accelerating development means overlooking the risks, while it also provides direct incentives for platforms with mass reach and influence to ignore or obfuscate such concerns in the name of progress.

Sadly, we’re now seeing the exact same happen in the AI race, as well as VR development, with American tech platforms looking to push back on regulations to get their latest innovations out to the public before anybody else. But what are the mental health impacts of facilitating human relationships with digital beings? What will be the eventual harms caused by AI bots engaging with user posts in social apps?

Will VR environments be more harmful for mental health, given their more immersive nature?

At a guess, I would say that these developments will end up being a negative impact for many users, just as social media has been, and even if you can argue that the positives do outweigh the harms, it’s still the harms that should be getting more focus.

So, yes, this latest teen safety update is a positive, but it’s not necessarily a voluntary move from Meta, and we still have a long way to go in factoring in such concerns versus accelerated development.