Google has announced a heap of new updates at its annual I/O conference, including a range of AI tools, ad updates, shopping advances, the evolution of Search, and more.

Here’s a look at the most digital marketing-relevant updates from Google’s big event.

Some of the most interesting announcements of the day relate to Google Shopping, and Google’s advancing AI elements to enhance the online shopping experience.

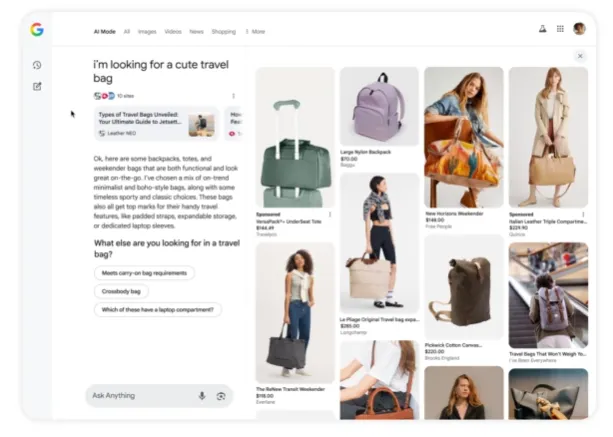

Google says that it will soon launch a new shopping experience in AI Mode, which will provide more assistance in narrowing down your product search process.

As you can see in this example, Google’s latest updates to its AI-powered shopping experience will give you a broader overview of results, and enable you to ask more questions based on its original findings to hone in on the right product matches.

As per Google:

“Say you tell AI Mode you’re looking for a cute travel bag. It understands that you’re looking for visual inspiration and so it will show you a beautiful, browsable panel of images and product listings personalized to your tastes. If you want to narrow your options down to bags suitable for a trip to Portland, Oregon in May, AI Mode will start a query fan-out, which means it runs several simultaneous searches to figure out what makes a bag good for rainy weather and long journeys, and then use those criteria to suggest waterproof options with easy access to pockets.”

That’ll make it easier to find more specific, custom products that best align with your needs, while it’ll also mean that brands will want to include as many relevant details along these lines as possible to maximize discovery potential.

Google says that it’s bringing these advanced discovery elements to AI Mode users in the U.S. in the coming months.

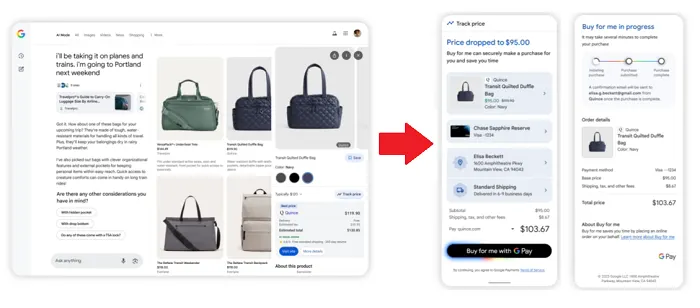

Google’s also looking to help shoppers get the product they want for the best price, with an updated “agentic checkout” option, which will buy a product for you if/when the price drops.

So now, you’ll be able to track price changes, and build an alert based on your preferred price (along with color preferences, sizing info, etc.). Then, when the price drops, Google will let you know, and will be able to buy the product for you immediately.

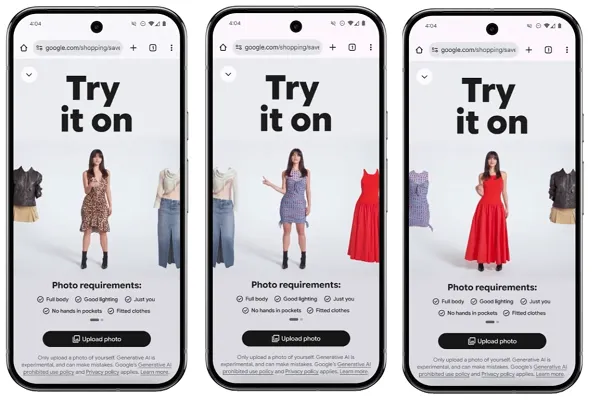

Google’s also adding an advanced virtual try-on process, which will make it easier to see how a product looks on you.

Based on a photo, Google will now be able to overlay alternate clothing options to give you a better idea of how they’ll look on you personally.

“It’s powered by a new custom image generation model for fashion, which understands the human body and nuances of clothing – like how different materials fold, stretch, and drape on different bodies. It preserves these subtleties when applied to poses in your photos. The result is a try-on experience that works with photos of you.”

Google says that this new “try on” experiment is rolling out in Search Labs in the U.S. today (more info here).

On another front, Google’s also unveiled new speech translation advances within Google Meet, which can now translate spoken language in near real time, while also matching the speaker’s tone and voice.

It’s also announced Google Beam, an AI-powered video communications platform which can transform 2D video streams into a 3D experience.

The system will use multiple cameras (six in total) to capture your scene, then use AI to merge this into a single replication, rendering you on a 3D lightfield display.

“It has near perfect head tracking, down to the millimeter, and at 60 frames per second, all in real-time. The result is a much more natural and deeply immersive conversational experience. In collaboration with HP, the first Google Beam devices will be available for early customers later this year.”

So it’s sort of like Google’s own metaverse-type experience, aiming to enhance digital connection with a more engaging replication of real-life meet-ups.

On Search, Google has previewed its latest advancements in “Project Mariner,” which incorporates web search into its Gemini chatbot, enabling the system to undertake tasks for you.

It’s also adding “AI Mode” to Google Search, which will incorporate more advanced reasoning, so that you can ask AI Mode longer and more complex queries.

So it’s like going direct to Google’s AI-generated Search summaries, which can provide more in-depth responses to your questions.

It’s also adding new deep research capabilities into AI Mode:

“Deep Search can issue hundreds of searches, reason across disparate pieces of information, and create an expert-level fully-cited report in just minutes, saving you hours of research.”

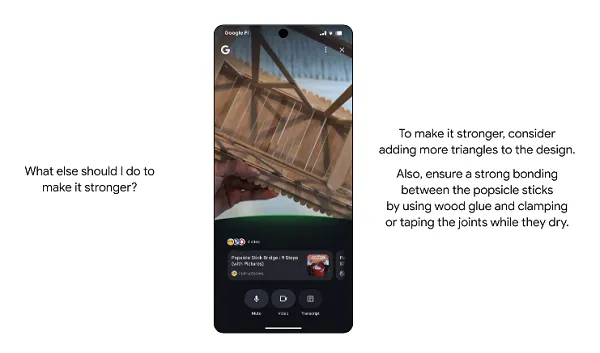

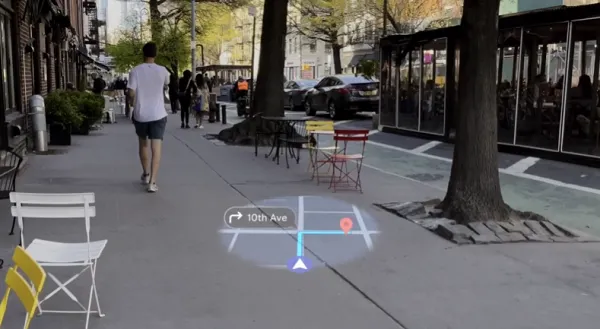

While it’s also adding multimodal search functionality into Search, so you can talk back-and-forth with Search about what you see in real-time, using your camera.

Google also adding new elements to its AI generation models, which will soon be able to provide responses based on uploaded files, or access the same via Google Drive and Gmail. Using this info, it will be able to generate custom reports, infographics, and more, which could expedite your presentations and updates.

Google’s also announced updates to its Imagen 4 image generation model, and its Veo 3 video generation tools, which will now be able to produce even better visual outputs.

And it’s got a new tool called “Flow,” which will be able to create AI-generated films, incorporating all of Google’s visual generation tools.

Finally, Google’s also working on AI avatars, which will provide another way to present yourself in digital form, and could enable things like shopping streams with digital presenters.

Oh, and AR glasses:

Google’s working with Gentle Monster and Warby Parker, to create stylish AR glasses, as it looks to stake its claim in the emerging AI-powered smart glasses market.

So a heap of updates, with a range of implications, that will facilitate broader adoption of AI into many more elements of the Google experience.

They also bring new considerations for marketers, and it’s worth considering how each of these will impact your outreach process.