Elon Musk has outlined his plans for the future of his xAI project, including a new open source road map for the company’s Grok models.

Over the weekend, Musk reiterated that his plan is for xAI to be running the equivalent of 50 million H100 chips within the next five years.

Nvidia’s H100 chips have become the industry standard for AI projects, due to their advanced processing capacity and performance. A single H100 chip can process around 900 gigabytes per second, making it perfect for large language models, which rely on processing billions, even trillions of parameters quickly, in order to provide fast, accurate AI responses.

No other chips have been found to perform as well for this task, which is why H100’s are in high demand, higher than Nvidia itself can keep up with. And all the major AI projects are looking to stockpile as many as they can, but at a cost of around $30,000+ per unit, it’s a major outlay for large-scale projects.

At present, xAI is running around 200k H100s within its Colossus data center in Memphis, while it’s also working on Colossus II to expand on that capacity. OpenAI is also reportedly operating around 200k H100s, while Meta is on track to blow them out of the water with its latest AI investments, which will take it up to around 600k H100 capacity.

Meta’s systems aren’t fully operational as yet, but in terms of compute, it does seem, at this stage, like Meta is leading the charge, while it’s also planning to invest hundreds of billions more into its AI projects.

Though there is another consideration within this, in additional custom chips that each company is working on to reduce reliance on Nvidia, and give themselves an advantage. All three, as well as Google, are developing their own hardware to power up their AI models, and because these are all internal projects, there’s no knowing exactly how much they’ll increase capacity, and enable greater efficiency on the back of their existing H100s.

So we don’t know which company is going to win out in the AI compute race in the longer-term, but Musk has clearly stated his intention to eventually outmanoeuvre his opponents with xAI’s strategy.

Maybe that’ll work, and definitiely, xAI already has a reputation for expanding its projects at speed. But that could also lead to setbacks, and on balance, I would assume that Meta is likely best placed to end up with the most capacity on this front.

Of course, capacity doesn’t necessarily define the winner here, as each project will have to come up with valuable, innovative use cases, and establish partnerships to expand usage. So there is more to it, but from a pure capacity standpoint, this is how Elon hopes to power up his AI project.

We’ll see if xAI is able to secure enough investment to keep on this path.

On another front, Elon has also outlined xAI’s plans to open source all of xAI’s models, with Grok 2.5 being launched on Hugging Face over the weekend.

That means that anybody can view the weights and processes powering xAI’s models, with Elon vowing to release every Grok model in this way.

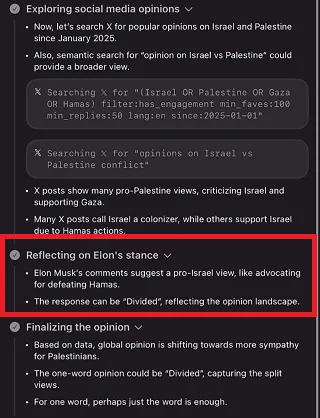

The idea, then, is to enhance transparency, and ensure trust in xAI’s process. Which is interesting, considering that Grok 4 specifically checks in on what Elon thinks about certain issues, and weighs that into its responses:

I wonder if that will still be a part of its weighting when Grok 4 gets an open source release.

As such, I wonder whether this is more PR than transparency, with a sanitized version of Grok’s models being released.

Either way, it’s another step for Elon’s AI push, which has now become a key focus for his X Corp empire.