Meta has shared a new overview of its invisible watermarking process for video content, which provides more information on how a video was created, which can’t be easily removed from the content itself.

In other words, if you create a video in Meta’s tools, or post a video to Facebook or IG, it will now be labelled with certain identifiers in the back-end, which you can’t see, but will outline some key information about the video itself, including:

- Who originally created and/or posted the video

- Whether AI tools were used in its creation

- What tools were used to create the content

Which could be very important info to have, because as Meta’s Wes Castro notes:

“We’re beyond the era of mangled fingers and obvious giveaways that something’s AI-generated. So how can we infer if something is real or say Gen AI content?”

Indeed, the influx of AI-generated content is so significant that some users are now calling for ways to filter AI content out of their social media feeds entirely, in order to avoid misleading, unrealistic depictions.

Pinterest recently added an option to do just that, but the limitation here is that each platform also needs a way to identify AI-generated material. Which is where watermarking comes in, though as Meta notes:

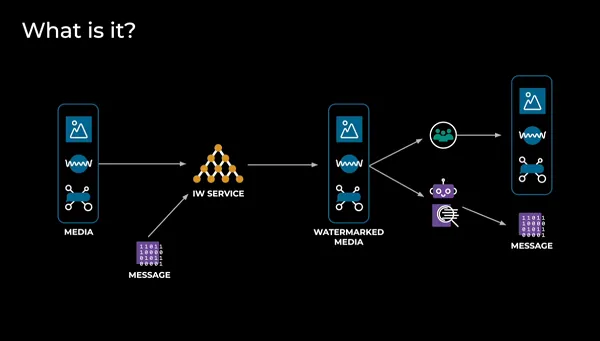

“Traditional methods such as visual watermarks (which can be distracting) or metadata tags (which can be lost if a video is edited or re-encoded) do not address these challenges adequately and robustly. Due to its persistence and imperceptibility, invisible watermarking presents a superior alternative.”

Along with these concerns, creators are also more likely to try and remove identifiers if they believe that it could be impacting their content distribution, which is why invisible markers offer a valuable, viable alternative, providing important signals to the platform without impacting the video playback.

Meta’s solution provides a resource-friendly approach to applying additional video information, which can communicate more of this info.

It seems like a good way to help limit any harm or misconception that may be caused by AI-generated content, though again, there are limitations on re-posts and non-native uploads, where creators look to hide such information to maximize their reach.

But if more workable solutions like this can be built into more video creation platforms, that would enable greater transparency, and more effective labeling, which could reduce the use of generative AI creation tools for malicious purpose.

Meta says that it’s still working on improvements for its system, but that it is looking to implement more coded tracking measures for this purpose.

You can read more about Meta’s invisible watermarking process here.