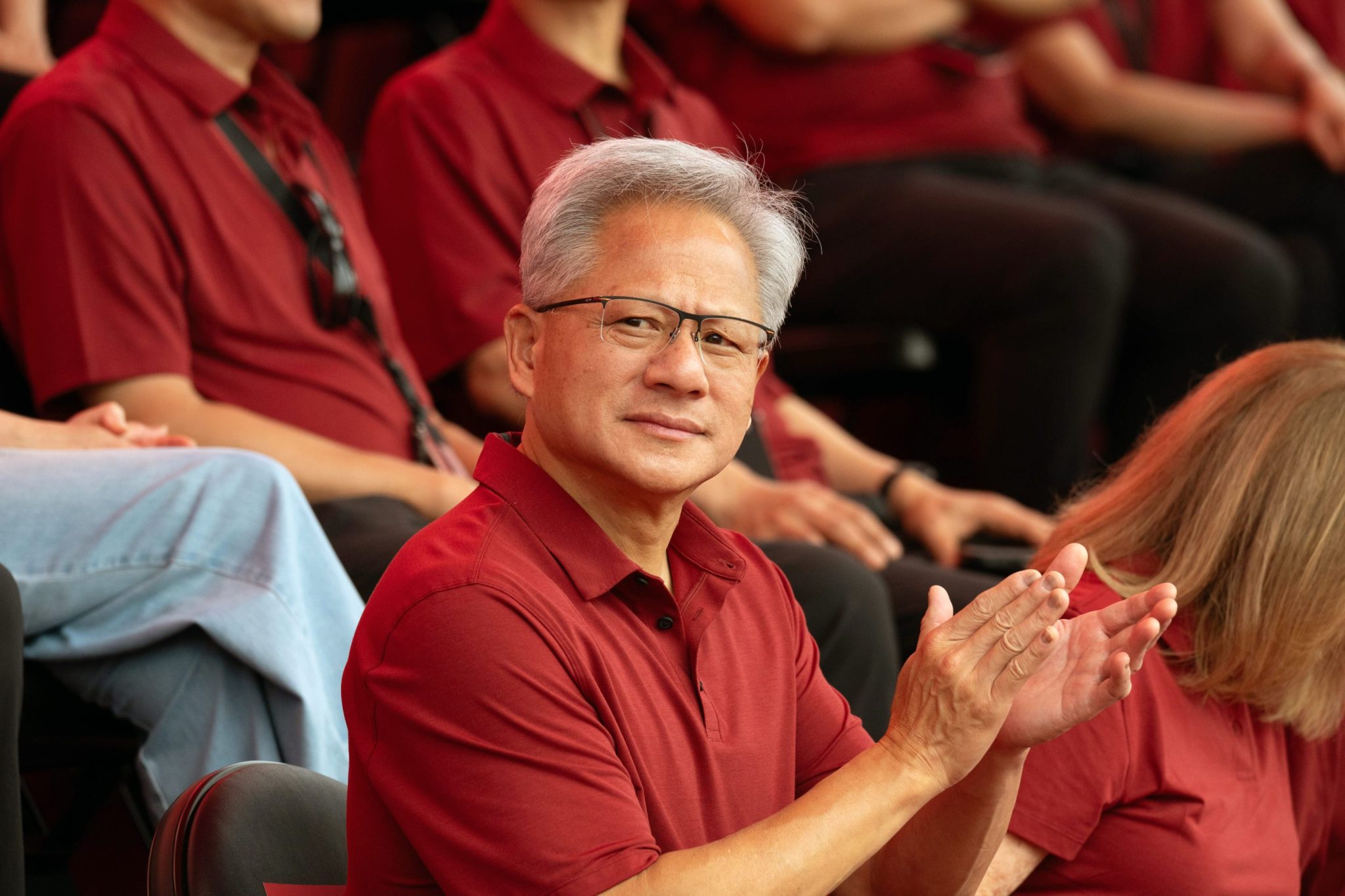

Nvidia is usually the company other firms have to respond to. Not the other way around. But on Tuesday, the $4 trillion chipmaker did something rare: It took to X to publicly defend itself after a report suggested that one of its biggest customers, Meta, is considering shifting part of its AI infrastructure to Google’s in-house chips, called TPUs.

The defensive move came after Nvidia stock fell over 2.5% on the news, and near the close, while shares of Alphabet—buoyed by its well-reviewed new Gemini 3 model, which was acclaimed by well-known techies such as SalesforceCEO Marc Benioff—climbed for a third day in a row.

The catalyst was a report from The Information claiming that Google has been pitching its AI chips, known as TPUs, to outside companies including Meta and several major financial institutions. Google already rents those chips to customers through its cloud service, but expanding TPU use into customers’ own data centers would mark a major escalation of its rivalry with Nvidia.

That was enough to rattle Wall Street, and also Nvidia itself.

“We’re delighted by Google’s success—they’ve made great advances in AI, and we continue to supply to Google,” Nvidia wrote in a post on X. “Nvidia is a generation ahead of the industry—it’s the only platform that runs every AI model and does it everywhere computing is done.”

It’s not hard to read between the lines. Google’s TPUs might be gaining traction, but Nvidia wants investors, and its customers, to know that it still sees itself as unstoppable.

Brian Kersmanc, a bearish portfolio manager at GQG Partners, had predicted this moment. In an interview with Fortune late last week, he warned that the industry was beginning to recognize Google’s chips as a viable alternative.

“Something I think was very understated in the media, which is fascinating, but Alphabet, Google’s Gemini 3 model, they said that they use their own TPUs to train that model,” Kersmanc said. “So the Nvidia argument is that they’re on all platforms, while arguably the most successful AI company now, which is [Google], didn’t even use GPUs to train their latest model.”

Why Google suddenly matters again

For most of the past decade, Google’s AI chips were treated as a clever in-house tool: fast, efficient, and tightly integrated with Google’s own systems, but not a true threat to Nvidia’s general-purpose GPUs, which monopolize more than 90% of the AI accelerator market.

Part of that is architectural. TPUs are ASICs, custom chips optimized for a narrow set of workloads. Nvidia, in its X post, made sure to underline the contrast.

“Nvidia offers greater performance, versatility, and fungibility than ASICs,” the company said, positioning its GPUs as the universal option that can train and run any model across cloud, on-premise, and edge environments. Nvidia also pointed to its latest Blackwell architecture, which it insists remains a generation ahead of the field.

But the past month has changed the tone. Google’s Gemini 3—trained entirely on TPUs—has drawn strong reviews and is being framed by some as a true peer to OpenAI’s top models. And the idea that Meta could deploy TPUs directly inside its data centers—reducing reliance on Nvidia GPUs in parts of its stack—signals a potential shift that investors have long wondered about but hadn’t seen materialize.

Meanwhile, the Burry battle escalates

The defensive posture wasn’t limited to Google. Behind the scenes, Nvidia has also been quietly fighting another front: a growing feud with Michael Burry, the investor famous for predicting the 2008 housing collapse and a central character in Michael Lewis’s classic The Big Short.

After Burry posted a series of warnings comparing today’s AI boom to the dotcom and telecom bubbles—arguing Nvidia is the Cisco of this cycle, meaning that it similarly supplies the hardware for the build-out but might suffer intensive corrections—the chipmaker circulated a seven-page memo to Wall Street analysts specifically rebutting his claims. Burry himself revealed the memo on Substack.

Burry has accused the company of excessive stock-based compensation, inflated depreciation schedules that make data center build-outs appear more profitable, and enabling “circular financing” in the AI startup ecosystem. Nvidia, in its memo, pushed back line by line.

“Nvidia does not resemble historical accounting frauds because Nvidia’s underlying business is economically sound, our reporting is complete and transparent, and we care about our reputation for integrity,” it said in the memo, on which Barron’s was first to report.