Meta says that its shift to a Community Notes model is working, based on its latest Community Standards Enforcement Report update, which provides insight into its efforts to moderate content, and address harmful elements across its apps.

As you no doubt recall, back in January, Meta announced that it would be moving to a Community Notes model, following the lead of X, while also ending its third-party fact-checking process. The idea behind this, according to Meta CEO Mark Zuckerberg, was that Meta had reached a point where it was censoring too much, so it wanted to give users more input into content decisions, as opposed to making heavy-handed decisions from on high.

The fact that this approach aligns with what U.S. President Donald Trump would prefer was completely coincidental and had nothing to do with Meta’s approach on this front.

So how is Meta measuring the success of its Community Notes initiative in the U.S.?

As per Meta:

“Of the hundreds of billions of pieces of content produced on Facebook and Instagram in Q3 globally, less than 1% was removed for violating our policies and less than 0.1% was removed incorrectly. For the content that was removed, we measured our enforcement precision – that is, the percentage of correct removals out of all removals – to be more than 90% on Facebook and more than 87% on Instagram.”

So Meta says that it’s making fewer mistakes, and therefore fewer people are complaining about Meta’s automated system removing their posts incorrectly.

Which Meta says is a win, but at the same time, if you’re removing more content overall, of course there’s going to be fewer mistakes. And while Meta’s saying that “enforcement precision” was at 90%, it’s hard to quantify the success of Community Notes, as an alternative to systematic enforcement, if the only measure is user complaints, or not.

In terms of specific policy areas, Meta says that:

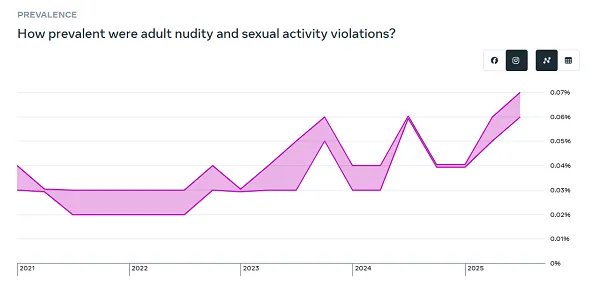

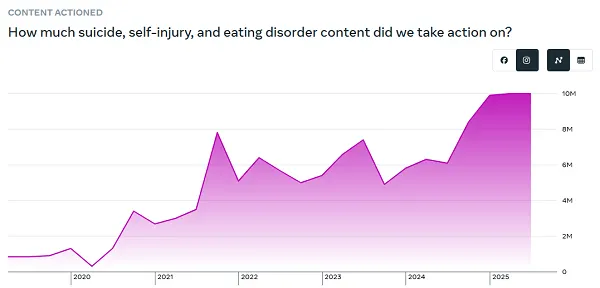

“On both Facebook and Instagram, prevalence increased for adult nudity and sexual activity, and for violent and graphic content, and on Facebook it increased for bullying and harassment. This is largely due to changes made during the quarter to improve reviewer training and enhance review workflows, which impacts how samples are labeled when measuring prevalence.”

Given this proviso, it’s hard to say whether this is a significant point of note or not, because Meta’s saying that the increase is only due to a change in methodology.

So maybe these spikes are worth noting, maybe they’re actually indicators of improvement.

Though in light of the recent push to increase social media restrictions among young teens, this chart remains a concern:

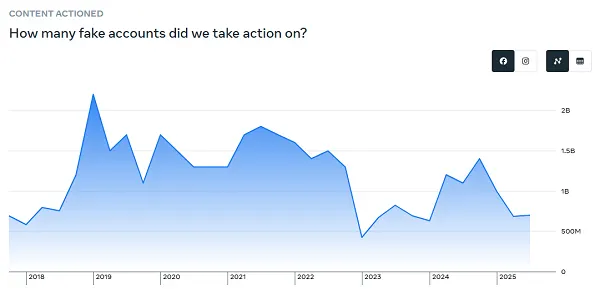

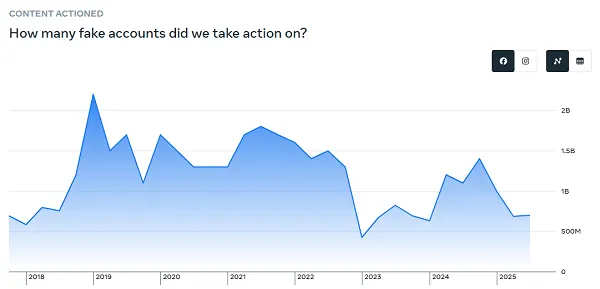

In terms of fake accounts, Meta maintains that around 4% of its more than 3 billion monthly active users are fakes.

Many have questioned this, based on their own experiences of fake profiles on Facebook and IG. Though these days, it’s hard to tell what’s a fake profile, and what’s AI, and whether both are considered to be the same thing? I mean, Meta has been working on a project to add in AI profiles to its apps, which will interact like real people. So are those fakes?

Either way, for context, 4% of 3.54 billion total users still equates to more than 140 million fake profiles in its apps, which Meta is officially acknowledging.

So there’s that.

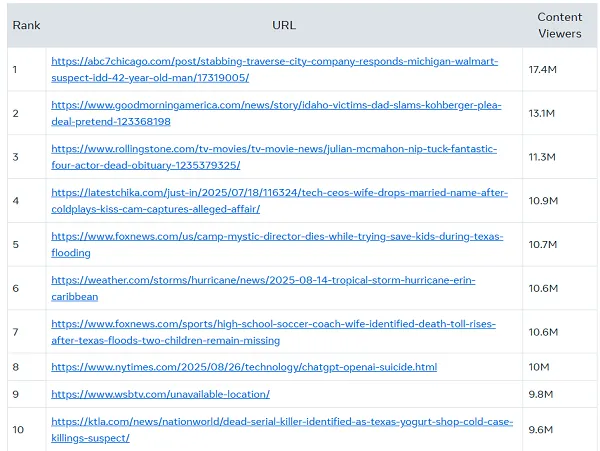

Meta has also published an update to its “Widely Viewed Content Report,” which provides more context around what people are actually seeing on Facebook in the U.S.

The report was originally launched back in 2021, in order to dispel the notion that Facebook’s algorithms amplify divisive content and misinformation. By showing what types of posts and Pages actually see the most engagement, Meta’s seeking to highlight that it’s not as big of a problem in this respect as people might perceive, though again, I would note that at Meta’s scale, even content that gets relatively small traction is still reaching potentially millions of people.

But in terms of what’s getting the most reach, Meta reports that it’s mostly trending news stories that Facebook users are most interested in.

Crime stories, the death of actor Julian McMahon, the Coldplay concert couple, these are the types of updates that gained the most traction on Facebook in Q3.

So it’s not politically divisive stuff, it’s intriguing stories of real-life events. Which is actually better than the usual content that populates this list (normally, tabloid gossip wins out), but the bottom line that Facebook wants to put forward is that political content is not a major element of discussion in the app.

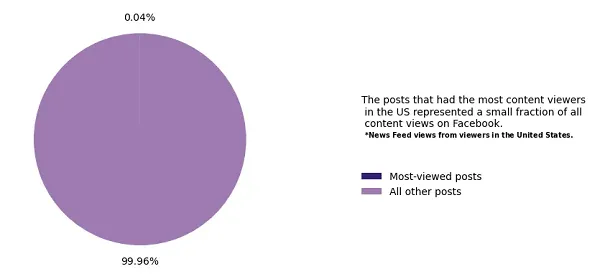

Though, overall, it is worth noting that the share of attention for top posts is miniscule at Facebook’s scale.

In broader context, the platform’s scale and reach is so big that any amplification is significant, so even with these notes on the most shared posts, we’re not really getting much of an understanding of Facebook’s overall influence in this respect.

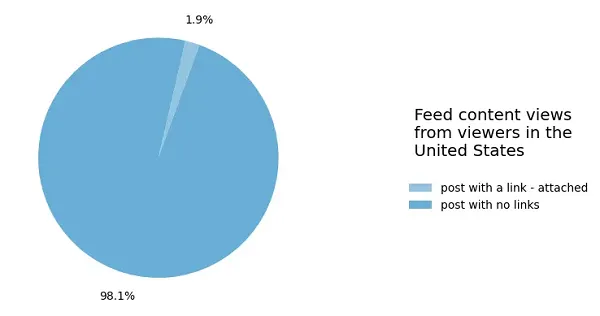

Oh, there’s also this chart, which digital marketers hate:

Link posts are a tiny, tiny part of Facebook overall, so if you’re looking to drive traffic, you’re likely to be disappointed.

The prevalence of link posts has decreased from Q1, when it was at 2.7%, while back in 2022, it was at 9.8%.

So a significant decline for link posts in the app.

Overall, there’s no major standout findings in Meta’s latest transparency reports, with its efforts to evolve moderation leading to fewer mistakes, but potentially more exposure to harm as a result. Though in Meta’s metrics, it’s doing better on this front as well.