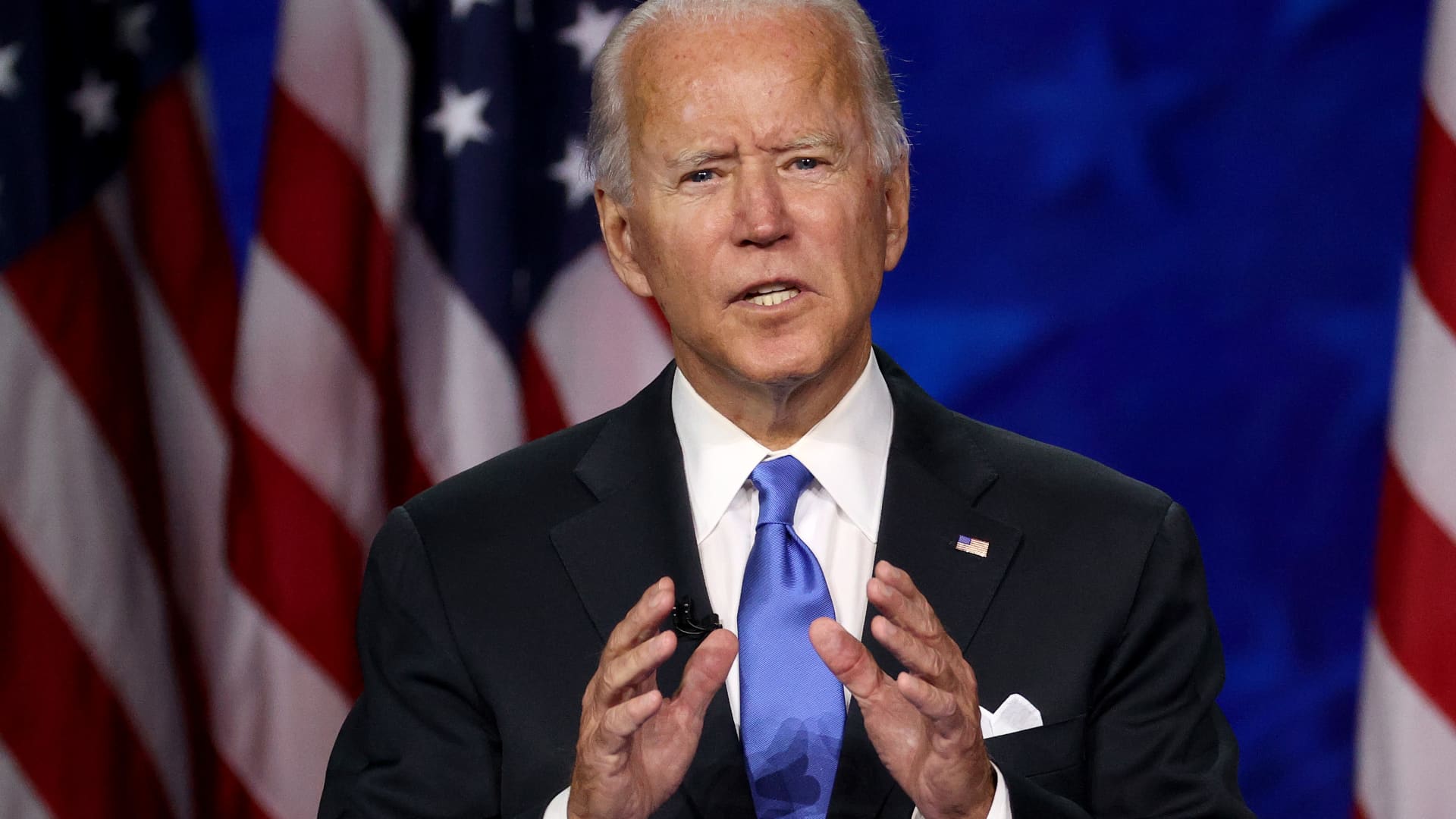

The creator of an audio deepfake of US President Joe Biden urging individuals to not vote on this week’s New Hampshire major has been suspended by ElevenLabs, in keeping with an individual acquainted with the matter.

ElevenLabs’ know-how was used to make the deepfake audio, in keeping with Pindrop Security Inc., a voice-fraud detection firm that analyzed it.

ElevenLabs was made conscious this week of Pindrop’s findings and is investigating, the individual stated. As soon as the deepfake was traced to its creator, that consumer’s account was suspended, stated the individual, asking to not be recognized as a result of the data isn’t public.

ElevenLabs, a startup that makes use of synthetic intelligence software program to copy voices in additional than two dozen languages, stated in an announcement that it couldn’t touch upon particular incidents. However added, “We are dedicated to preventing the misuse of audio AI tools and take any incidents of misuse extremely seriously.”

Earlier this week, ElevenLabs introduced an $80 million financing spherical from buyers together with Andreessen Horowitz and Sequoia Capital. Chief Government Officer Mati Staniszewski said the newest financing provides his startup a $1.1 billion valuation.

In an interview final week, Staniszewski stated that audio that impersonate voices with out permission can be eliminated. On its website, the corporate says it permits voice clones of public figures, like politicians, if the clips “express humor or mockery in a way that it is clear to the listener that what they are hearing is a parody.”

The pretend robocall of Biden urging individuals to avoid wasting their votes for the US elections in November has alarmed disinformation specialists and elections officers alike. Not solely did it illustrate the relative ease of making audio deepfakes, but in addition hints on the potential of unhealthy actors to make use of the know-how to maintain voters away from the polls.

A spokesperson for the New Hampshire Lawyer Common stated on the time that the messages appeared “to be an unlawful attempt to disrupt the New Hampshire Presidential Primary Election and to suppress New Hampshire voters.” The company has opened an investigation.

Customers who wish to clone voices on ElevenLabs should use a bank card to pay for the function. It isn’t clear if ElevenLabs handed this info to New Hampshire authorities.

Bloomberg Information acquired a duplicate of the recording on Jan. 22 from the Lawyer Common’s workplace and tried to find out which know-how was used to create it. These efforts included working it by ElevenLabs personal “speech classifier” instrument, which is meant to indicate if the audio was created utilizing synthetic intelligence and ElevenLabs’ know-how. The recording confirmed a 2% probability of being artificial or created utilizing ElevenLabs, in keeping with the instrument.

Different deepfake instruments confirmed it was a deepfake however couldn’t detect the know-how behind the audio.

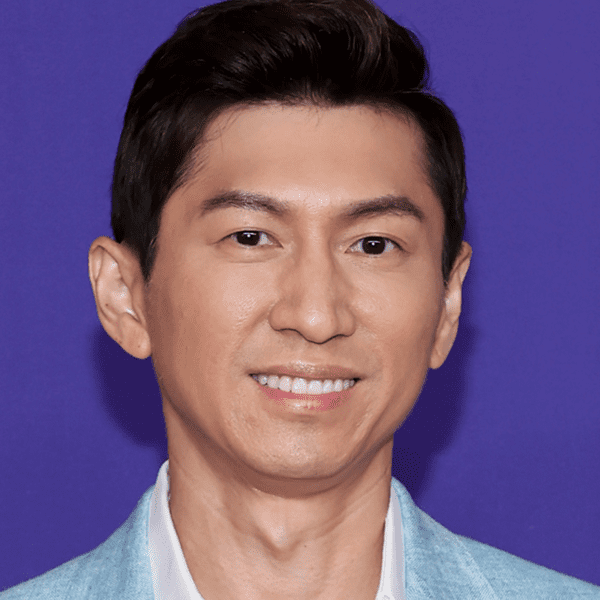

Pindrop’s researchers cleaned the audio by eradicating background noise, silence and breaking the audio into 155 segments of 250 milliseconds every for deep evaluation, Pindrop’s founder Vijay Balasubramaniyan stated in an interview. The corporate then in contrast the audio to a database of different samples it has collected from greater than 100 text-to-speech programs which might be generally used to supply deepfakes, he stated.

The researchers concluded that it was virtually definitely created with ElevenLabs’ know-how, Balasubramaniyan stated.

In an ElevenLabs’ help channel on Discord, a moderator indicated on a public discussion board that the corporate’s speech classifier can’t detect its personal audio until it’s analyzing the uncooked file, a degree echoed by Balasubramaniyan. With the Biden name, the one recordsdata obtainable for speedy evaluation have been recordings of the cellphone name, he stated, explaining that it made it tougher to research as a result of bits of metadata have been eliminated and it was tougher to detect wavelengths.

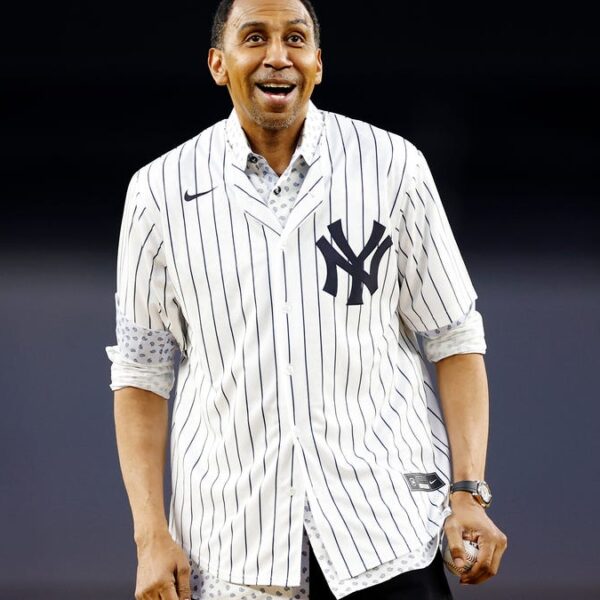

Siwei Lyu, a professor on the College of Buffalo who makes a speciality of deepfakes and digital media forensics, additionally analyzed a duplicate of the deepfake and ran it by ElevenLabs’ classifier, concluding that it was doubtless made with that firm’s software program, he informed Bloomberg Information. Lyu stated ElevenLabs’ classifier is likely one of the first he checks when attempting to find out an audio deepfake’s origins as a result of the software program is so generally used.

“We’re going to see a lot more of this with the general election coming,” he stated. “This is definitely a problem everyone should be aware of.”

Pindrop shared a model of the audio that its researchers had scrubbed and refined with Bloomberg Information. Utilizing that recording, ElevenLabs’ speech classifier concluded that it was an 84% match with its personal know-how.

Voice-cloning know-how permits a “crazy combination of scale and personalization” that may idiot individuals into pondering they’re listening to native politicians or high-ranking elected officers, Balasubramaniyan stated, describing it as “a worrisome thing.”

Tech buyers are throwing cash at AI startups creating artificial voices, movies and pictures within the hope it is going to remodel the media and gaming business.

Staniszewski stated within the interview last week that his 40-person firm had 5 individuals dedicated to dealing with content material moderation. “Ninety-nine percent of use cases we are seeing are in a positive realm,” the CEO stated. With its funding announcement, the corporate additionally shared that its platform had generated greater than 100 years of audio up to now 12 months.