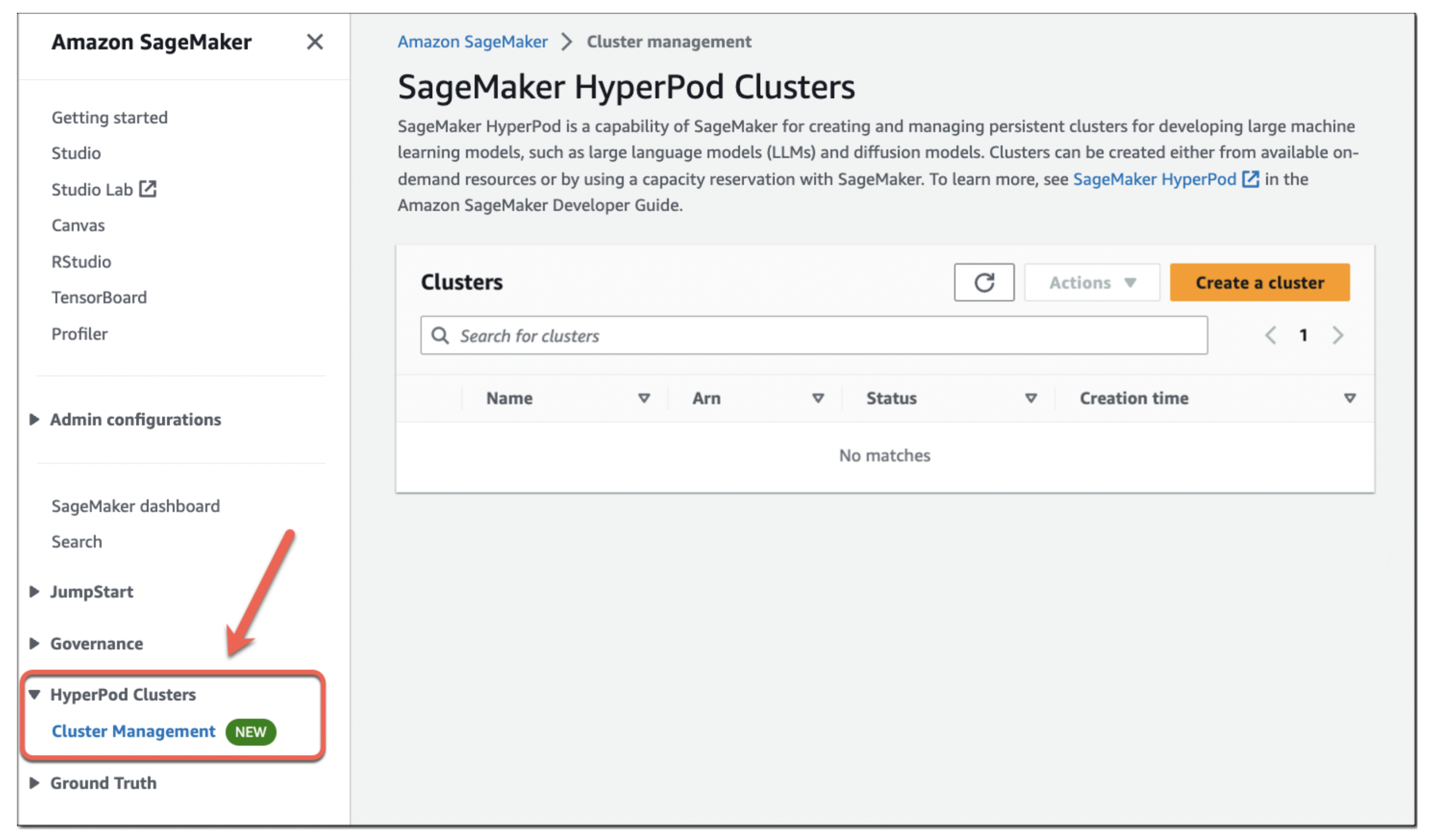

At its re:Invent convention, Amazon’s AWS cloud arm as we speak introduced the launch of SageMaker HyperPod, a brand new purpose-built service for coaching and fine-tuning massive language fashions. SageMaker HyperPod is now usually out there.

Amazon has lengthy guess on SageMaker, its service for constructing, coaching and deploying machine studying fashions, because the spine of its machine studying technique. Now, with the arrival of generative AI, it’s perhaps no shock that it’s also leaning on SageMaker because the core product to make it simpler for its customers to coach and fine-tune massive language fashions (LLMs).

“SageMaker HyperPod gives you the ability to create a distributed cluster with accelerated instances that’s optimized for disputed training,” Ankur Mehrotra, AWS’ common supervisor for SageMaker, instructed me in an interview forward of as we speak’s announcement. “It gives you the tools to efficiently distribute models and data across your cluster — and that speeds up your training process.”

He additionally famous that SageMaker HyperPod permits customers to ceaselessly save checkpoints, permitting them to pause, analyze and optimize the coaching course of with out having to start out over. The service additionally consists of a lot of fail-safes in order that when a GPUs goes down for some purpose, your complete coaching course of doesn’t fail, too.

“For an ML team, for instance, that’s just interested in training the model — for them, it becomes like a zero-touch experience and the cluster becomes sort of a self-healing cluster in some sense,” Mehrotra defined. “Overall, these capabilities can help you train foundation models up to 40 percent faster, which, if you think about the cost and the time to market, is a huge differentiator.”

Customers can decide to coach on Amazon’s personal customized Trainium (and now Trainium 2) chips or Nvidia-based GPU situations, together with these utilizing the H100 processor. The corporate guarantees that HyperPod can pace up the coaching course of by as much as 40%.

The corporate already has some expertise with this utilizing SageMaker for constructing LLMs. The Falcon 180B mannequin, for instance, was trained on SageMaker, utilizing a cluster of 1000’s of A100 GPUs. Mehrotra famous that AWS was capable of take what it discovered from that and its earlier expertise with scaling SageMaker to construct HyperPod.

Perplexity AI’s co-founder and CEO Aravind Srinivas instructed me that his firm bought early entry to the service throughout its personal beta. He famous that his workforce was initially skeptical about utilizing AWS for coaching and fine-tuning its fashions.

“We did not work with AWS before,” he mentioned. “There was a myth — it’s a myth, it’s not a fact — that AWS does not have great infrastructure for large model training and obviously we didn’t have time to do due diligence, so we believed it.” The workforce bought related with AWS, although, and the engineers there requested them to check the service out (free of charge). he additionally famous that he has discovered it simple to get help from AWS — and entry to sufficient GPUs for Perplexity’s use case. It clearly helped that the workforce was already accustomed to doing inference on AWS.

Srinivas additionally confused that the AWS HyperPod workforce targeted strongly on dashing up the interconnects that hyperlink Nvidia’s graphics playing cards. “They went and optimized the primitives — Nvidia’s various primitives — that allow you to communicate these gradients and parameters across different nodes,” he defined.