Hello and welcome to Eye on AI…In this edition: DeepSeek drops another impressive model…China tells companies not to buy Nvidia chips…and OpenEvidence scores an impressive result on the medical licensing exam.

Hi, it’s Jeremy here, just back from a few weeks of much needed vacation. It was nice to be able to get a little distance and perspective on the AI news cycle. (Although I did make an appearance on Rana el Kaliouby’s “Pioneers of AI” podcast to discuss the launch of GPT-5. You can check that out here.)

Returning this week, the news has been all about investor fears we’re in an “AI bubble”—and that it is about to either pop or deflate. Nervous investors drove the shares of many publicly-traded tech companies linked to AI-related trades, such as Nvidia, CoreWeave, Microsoft, and Alphabet down significantly this week.

To me, one of the clearest signs that we are in a bubble—at least in terms of publicly-traded AI stocks—is the extent to which investors are actively looking for reasons to bail. Take the supposed rationale for this week’s sell-off, which were Altman’s comments that he thought there was an AI bubble in venture-backed, privately-held AI startups and that MIT report which found that 95% of AI pilots fail. Altman wasn’t talking about the public companies that stock market investors have in their portfolios, but traders didn’t care. They chose to only read the headlines and interpret Altman’s remarks broadly. As for that MIT report, the market chose to read it as an indictment of AI as a whole and head for the exits—even though that’s not exactly what the research said, as we’ll see in a moment.

I’m going to spend the rest of this essay on the MIT report because I think it is relevant for Eye on AI readers beyond its implications for investors. The report looked at what companies are actually trying to do with AI and why they may not be succeeding. Entitled The GenAI Divide: State of AI in Business 2025, the report was published by MIT Media Lab’s NANDA Initiative. (My Fortune colleague Sheryl Estrada was one of the first to cover the report’s findings. You can read her coverage here.)

NANDA is an acronym for “Networked-Agents and Decentralized AI” and it is a project designed to create new protocols and a new architecture for an internet full of autonomous AI agents. NANDA might have an incentive to suggest that current AI methods aren’t working—but that if companies created more agentic AI systems using the NANDA protocol, their problems would disappear. There’s no indication that NANDA did anything to skew its survey results or to frame them in a particular light, but it is always important to consider the source.

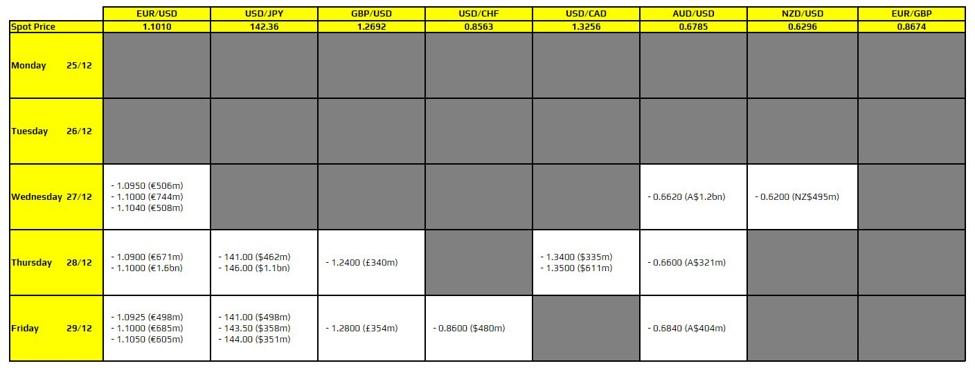

Ok, now let’s look at what the report actually says. It interviewed 150 executives, surveyed 350 employees, and looked at 300 individual AI projects. It found that 95% of AI pilot projects failed to deliver any discernible financial savings or uplift in profits. These findings are not actually all that different from what a lot of previous surveys have found—and those surveys had no negative impact on the stock market. Consulting firm Capgemini found in 2023 that 88% of AI pilots failed to reach production. (S&P Global found earlier this year that 42% of generative AI pilots were abandoned—which is still not great).

You’re doing it wrong

But where it gets interesting is what the NANDA study said about the apparent reasons for these failures. The biggest problem, the report found, was not that the AI models weren’t capable enough (although execs tended to think that was the problem.) Instead, the researchers discovered a “learning gap—people and organizations simply did not understand how to use the AI tools properly or how to design workflows that could capture the benefits of AI while minimizing downside risks.

Large language models seem simple—you can give them instructions in plain language, after all. But it takes expertise and experimentation to embed them in business workflows. Wharton professor Ethan Mollick has suggested that the real benefits of AI will come when companies abandon trying to get AI models to follow existing processes—many of which he argues reflect bureaucracy and office politics more than anything else—and simply let the models find their own way to produce the desired business outcomes. (I think Mollick underestimates the extent to which processes in many large companies reflect regulatory demands, but he no doubt has a point in many cases.)

This phenomenon may also explain why the MIT NANDA research found that startups, which often don’t have such entrenched business processes to begin with, are much more likely to find genAI can deliver ROI.

Buy, don’t build

The report also found that companies which bought-in AI models and solutions were more successful than enterprises that tried to build their own systems. Purchasing AI tools succeeded 67% of the time, while internal builds panned out only one-third as often. Some large organizations, especially in regulated industries, feel they have to build their own tools for legal and data privacy reasons. But in some cases organizations fetishize control—when they would be better off handing the hard work off to a vendor whose entire business is creating AI software.

Building AI models or systems from scratch requires a level of expertise many companies don’t have and can’t afford to hire. It is also means that companies are building their AI systems on open source or open weight LLMs—and while the performance of these models has improved markedly in the past year, most open source AI models still lag their proprietary rivals. And when it comes to using AI in actual business cases, a 5% difference in reasoning abilities or hallucination rates can result in a substantial difference in outcomes.

Finally, the MIT report found that many companies are deploying AI in marketing and sales, when the tools might have a much bigger impact if used to take costs out of back-end processes and procedures. This too may contribute to AI’s missing ROI.

The overall thrust of the MIT report was that the problem was not the tech. It was how companies were using the tech. But that’s not how the stock market chose to interpret the results. To me, that says more about the irrational exuberance in the stock market than it does about the actual impact AI will have on business in five years time.

With that, here’s the rest of the AI news.

Jeremy Kahn

[email protected]

@jeremyakahn

FORTUNE ON AI

Why the NFL drafted Microsoft’s gen AI for the league’s next big play—by John Kell

OpenAI’s chairman says ChatGPT is ‘obviating’ his own job—and says AI is like an ‘Iron Man suit’ for workers—by Marco Quiroz-Gutierrez

Meta wants to speed its race to ‘superintelligence’—but investors will still want their billions in ad revenue—by Sharon Goldman

AI IN THE NEWS

China moves to restrict Nvidia H20 sales after Lutnick remarks. That’s according a story in the Financial Times that said Beijing had found U.S. Commerce Secretary Howard Lutnick’s comments that the U.S. was withholding its best technology from China to be “insulting.” CAC, China’s internet regulator, issued an informal notice to major tech companies such as ByteDance and Alibaba, asking them to halt new orders for Nvidia H20s. MIIT, the country’s telecom and software regulator, and the NDRC, the state planning agency which is leading a drive for tech independence, have also issued guidance telling companies not to purchase Nvidia chips. The agencies have cited security concerns as the rationale for their stance, but unnamed Chinese officials told the newspaper that Lutnick’s comments also played a role.

DeepSeek launches its V3.1 model to enthusiastic reviews. The Chinese frontier AI company released an updated version of its powerful V3 LLM open source AI model. V3.1 features a larger context window than its predecessor, meaning it can handle longer prompts and more data. It also uses a hybrid architecture that only activates a fraction of its 685 billion parameters for each prompt token, making it faster and more efficient than some rival models. It also features better reasoning and agentic capabilities than the original V3, which was the underlying model DeepSeek then used to create its wildly successful R1 reasoning model. On benchmark tests so far, the V3.1 is competitive with proprietary models from OpenAI, Google, and Anthropic at a much lower price point—just over $1 for some coding tasks compared to $70 for rivals. Read more from Bloomberg here.

Google unveils its latest Pixel phones full of AI features. Google unveiled its Pixel 10 smartphone lineup, heavily centered on its Gemini AI assistant. The phones have features such as “Magic Cue” that provides suggested next actions based on contextual information, an AI “Camera Coach” for smarter photography, and Gemini Live for real-time screen interactions. The new AI features may allow Google to gain some marketshare from Apple, which has delayed the roll-out of many AI features for its iPhones until 2026. You can read more from CNBC here.

OpenAI considers renting AI infrastructure to others. OpenAI CFO Sara Friar told Bloomberg that the company is considering renting out AI-optimized data centers and infrastructure to other companies in the future, similar to Amazon’s AWS—even though OpenAI currently struggles to find enough data center capacity for its own operations. Friar also said the company is exploring financing options beyond debt as it faces immense costs, with CEO Sam Altman predicting trillions of dollars in future data center spending. Friar also confirmed in an interview with CNBC that the company recently hit $1 billion in monthly revenue for the first time, while Bloomberg reported that secondary share sales have valued the company at $500 billion.

AI CALENDAR

Sept. 8-10: Fortune Brainstorm Tech, Park City, Utah. Apply to attend here.

Oct. 6-10: World AI Week, Amsterdam

Oct. 21-22: TedAI San Francisco. Apply to attend here.

Dec. 2-7: NeurIPS, San Diego

Dec. 8-9: Fortune Brainstorm AI San Francisco. Apply to attend here.

EYE ON AI NUMBERS

100%

That’s the score medical AI startup OpenEvidence says its new AI model achieved on the U.S. Medical Licensing Exam (USMLE), the three-part exam all new doctors must take before they can practice. This beats the 90% its model scored two years ago as well as the 97% that OpenAI’s GPT-5 recently scored. OpenEvidence says its model offers case-based, literature-grounded explanations for its answers and the startup is offering the model to medical students as a free educational tool through a partnership with the American Medical Association, its associated journal, and the New England Journal of Medicine. You can read more from the healthcare-focused publication Fierce Healthcare here.