AI startup Anthropic, backed by hundreds of millions in enterprise capital (and maybe quickly hundreds of millions more), immediately announced the newest model of its GenAI tech, Claude. And the corporate claims that it rivals OpenAI’s GPT-4 when it comes to efficiency.

Claude 3, as Anthropic’s new GenAI is known as, is a household of fashions — Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus, Opus being essentially the most highly effective. All present “increased capabilities” in evaluation and forecasting, Anthropic claims, in addition to enhanced efficiency on particular benchmarks versus fashions like GPT-4 (however not GPT-4 Turbo) and Google’s Gemini 1.0 Ultra (however not Gemini 1.5 Pro).

Notably, Claude 3 is Anthropic’s first multimodal GenAI, that means that it might probably analyze textual content in addition to photographs — just like some flavors of GPT-4 and Gemini. Claude 3 can course of images, charts, graphs and technical diagrams, drawing from PDFs, slideshows and different doc varieties.

In a the first step higher than some GenAI rivals, Claude 3 can analyze a number of photographs in a single request (as much as a most of 20). This permits it to check and distinction photographs, notes Anthropic.

However there’s limits to Claude 3’s picture processing.

Anthropic has disabled the fashions from figuring out folks — little question cautious of the moral and authorized implications. And the corporate admits that Claude 3 is susceptible to creating errors with “low-quality” photographs (below 200 pixels) and struggles with duties involving spatial reasoning (e.g. studying an analog clock face) and object counting (Claude 3 can’t give actual counts of objects in photographs).

Picture Credit: Anthropic

Claude 3 additionally gained’t generate art work. The fashions are strictly image-analyzing — at the least for now.

Whether or not fielding textual content or photographs, Anthropic says that clients can typically anticipate Claude 3 to raised comply with multi-step directions, produce structured output in codecs like JSON and converse in languages apart from English in comparison with its predecessors,. Claude 3 also needs to refuse to reply questions much less typically due to a “more nuanced understanding of requests,” Anthropic says. And shortly, Claude 3 will cite the supply of its solutions to questions so customers can confirm them.

“Claude 3 tends to generate more expressive and engaging responses,” Anthropic writes in a help article. “[It’s] easier to prompt and steer compared to our legacy models. Users should find that they can achieve the desired results with shorter and more concise prompts.”

A few of these enhancements stem from Claude 3’s expanded context.

A mannequin’s context, or context window, refers to enter knowledge (e.g. textual content) that the mannequin considers earlier than producing output. Fashions with small context home windows are likely to “forget” the content material of even very latest conversations, main them to veer off matter — typically in problematic methods. As an added upside, large-context fashions can higher grasp the narrative movement of information they absorb and generate extra contextually wealthy responses (hypothetically, at the least).

Anthropic says that Claude 3 will initially help a 200,000-token context window, equal to about 150,000 phrases, with choose clients getting up a 1-milion-token context window (~700,000 phrases). That’s on par with Google’s latest GenAI mannequin, the above-mentioned Gemini 1.5 Professional, which additionally provides as much as a 1-million-context window.

Now, simply because Claude 3 is an improve over what got here earlier than it doesn’t imply it’s excellent.

In a technical whitepaper, Anthropic admits that Claude 3 isn’t immune from the problems plaguing different GenAI fashions, particularly bias and hallucinations (i.e. making stuff up). In contrast to some GenAI fashions, Claude 3 can’t search the net; the fashions can solely reply questions utilizing knowledge from earlier than August 2023. And whereas Claude is multilingual, it’s not as fluent in sure “low-resource” languages versus English.

However Anthropic’s promising frequent updates to Claude 3 within the months to return.

“We don’t believe that model intelligence is anywhere near its limits, and we plan to release [enhancements] to the Claude 3 model family over the next few months,” the corporate writes in a weblog publish.

Opus and Sonnet can be found now on the net and through Anthropic’s dev console and API, Amazon’s Bedrock platform and Google’s Vertex AI. Haiku will comply with later this 12 months.

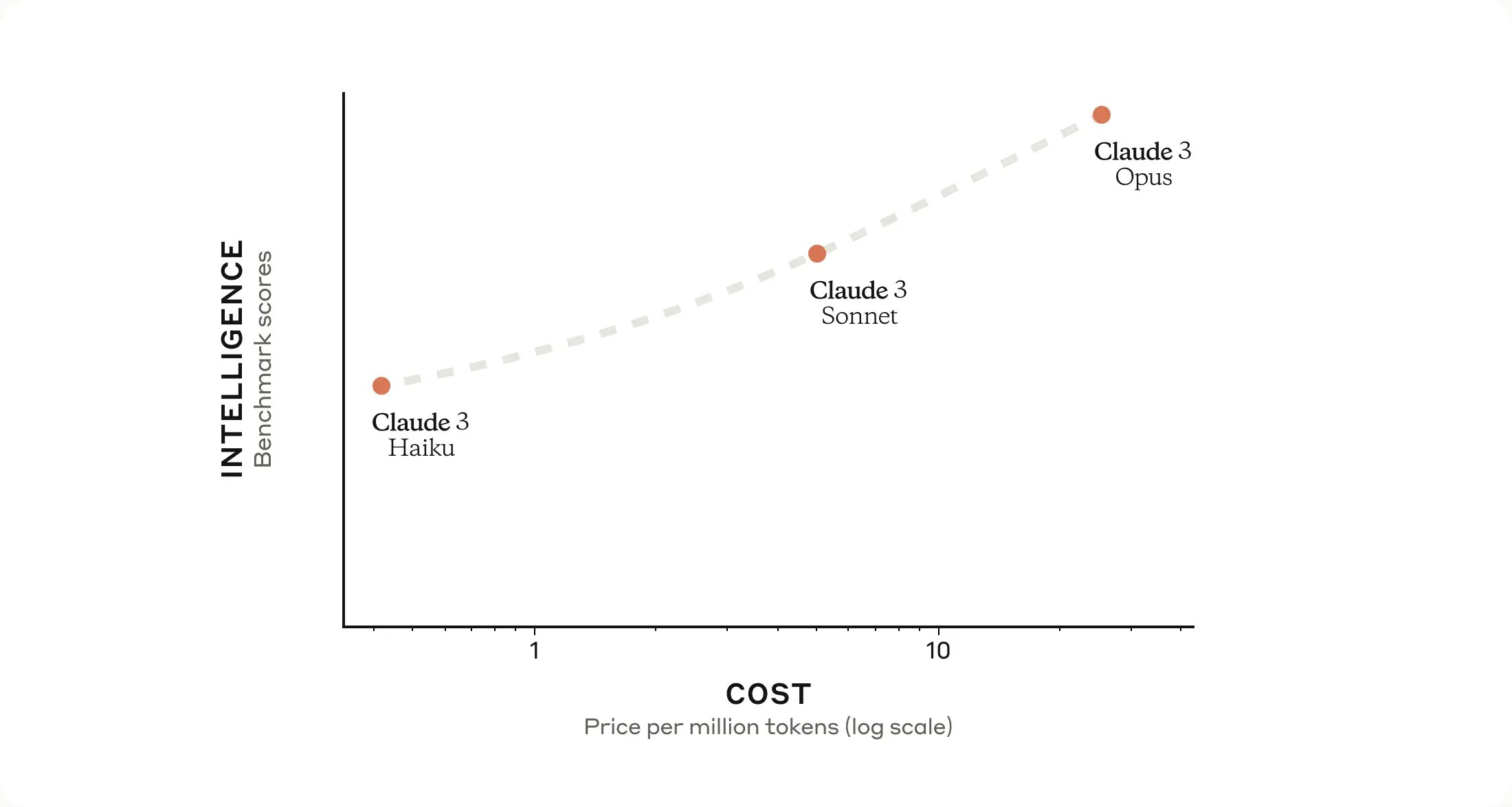

Right here’s the pricing breakdown:

- Opus: $15 per million enter tokens, $75 per million output tokens

- Sonnet: $3 per million enter tokens, $15 per million output tokens

- Haiku: $0.25 per million enter tokens, $1.25 per million output tokens

In order that’s Claude 3. However what’s the 30,000-foot view?

Properly, as we’ve reported beforehand, Anthropic’s ambition is to create a “next-gen algorithm for AI self-teaching.” Such an algorithm may very well be used to construct digital assistants that may reply emails, carry out analysis and generate artwork, books and extra — a few of which we’ve already gotten a style of with the likes of GPT-4 and different giant language fashions.

Anthropic hints at this within the aforementioned weblog publish, saying that it plans so as to add options to Claude 3 that improve its out-of-the-gate capabilities, together with permitting Claude 3 to work together with different techniques, interactive coding and “more advanced agentic capabilities.”

That final bit calls to thoughts OpenAI’s reported ambitions to construct a type of software program agent to automate advanced duties, like transferring knowledge from a doc to a spreadsheet for evaluation or mechanically filling out expense studies and getting into them in accounting software program. OpenAI already offers an API that enables builders to construct “agent-like experiences” into their apps, and Anthropic, it appears, is intent on delivering comparable performance.

May we see a picture generator from Anthropic subsequent? It’d shock me, frankly. Picture mills are the topic of a lot controversy lately — for copyright- and bias-related causes, primarily. Google was just lately pressured to disable its picture generator after it injected variety into footage with a farcical disregard for historic context, and quite a few picture generator distributors are in legal battles with artists who accuse them of profiting off of their work by coaching GenAI on it with out offering credit score or compensation.

I’m curious to see the evolution of Anthropic’s approach for coaching GenAI, “constitutional AI,” which the corporate claims makes the conduct of its fashions each simpler to know and less complicated to regulate as wanted. Constitutional AI seeks to offer a method to align AI with human intentions, having fashions reply to questions and carry out duties utilizing a easy set of guiding rules. For instance, for Claude 3, Anthropic stated that it added a constitutional precept — knowledgeable by buyer suggestions — that instructs the fashions to be understanding of and accessible to folks with disabilities.

No matter Anthropic’s endgame, it’s in it for the lengthy haul. According to a pitch deck leaked in Might of final 12 months, the corporate goals to lift as a lot as $5 billion over the following 12 months or so — which could simply be the baseline required to stay aggressive with OpenAI. (Coaching fashions isn’t low-cost, in any case.) It’s nicely on its manner, with $2 billion and $4 billion in dedicated capital and pledges from Google and Amazon, respectively.