Given the latest revelations about xAI’s Grok producing illicit content en masse, sometimes of minors, this feels like a timely overview.

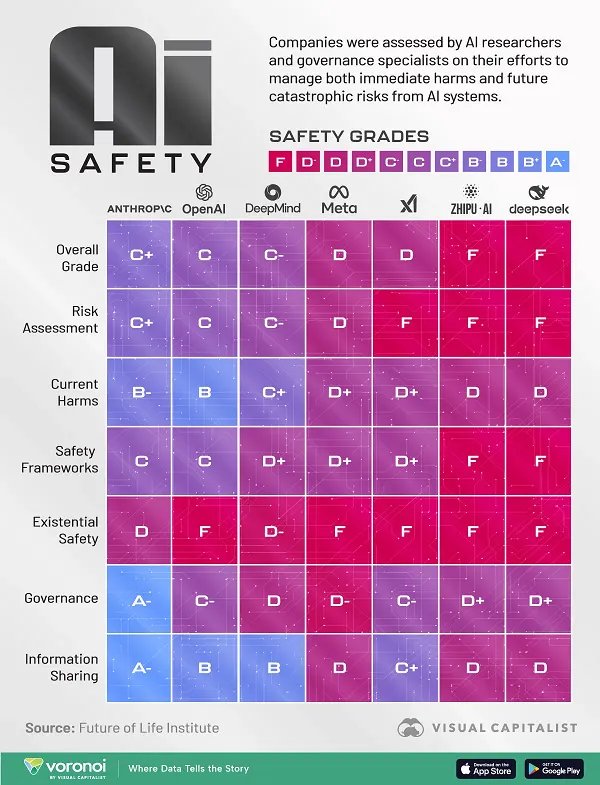

The team from the Future of Life Institute recently undertook a safety review of some of the most popular AI tools on the market, including Meta AI, OpenAI’s ChatGPT, Grok, and more.

The review looked at six key elements:

- Risk assessment – Efforts to ensure that the tool can’t be manipulated or used for harm

- Current harms – Including data security risks and digital watermarking

- Safety frameworks – The process each platform has for identifying and addressing risk

- Existential safety – Whether the project is being monitored for unexpected evolutions in programming

- Governance – The company’s lobbying on AI governance and AI safety regulations

- Information sharing – System transparency and insight into how it works

Based on these six elements, the report then gave each AI project an overall safety score, which reflects a broader assessment of how each is managing developmental risk.

The team from Visual Capitalist have translated these results into the below infographic, which provides some additional food for thought about AI development, and where we might be heading (especially with the White House looking to remove potential impediments to AI development).