Welcome to Eye on AI! AI reporter Sharon Goldman here, filling in for Jeremy Kahn, who is on holiday. In this edition… China tells firms to avoid Nvidia H20 Chips after Trump ends ban but takes cut of revenue… Students are flocking to college courses in AI… Anthropic will offer Claude AI to the U.S. government for $1.

Las Vegas in August feels like another planet—blazing heat, flashing lights, and the constant clatter of slot machines. That otherworldly vibe carried over to the two conferences I attended last week: Black Hat and DEF CON, two of the year’s biggest security and “ethical” hacking conferences, where cutting-edge security research is presented; hackers race to expose flaws in everything from AI chatbots to power grids; and governments, corporations, and hobbyists swap notes and learn the latest threat-fighting techniques.

I was there because AI now occupies a strange place in the security world—it is both a vulnerable target, often under threat from malicious AI; an armed defender using that same technology to protect systems and networks from bad actors; and an offensive player, deployed to probe weaknesses or carry out attacks (illegally in criminal hands). Sound confusing? It is—and that contradiction was on full display at the very corporate Black Hat, as well as DEF CON, often referred to as “hacker summer camp.”

Here are three of my favorite takeaways from the conferences:

- ChatGPT agents can be hacked. At Black Hat, researchers from security firm Zenity showed how hackers could exploit OpenAI’s new Connectors feature, which lets ChatGPT pull in data from apps like Google Drive, SharePoint, and GitHub. Their proof-of-concept attack, called AgentFlayer, used an innocent-looking “poisoned” document with hidden instructions to quietly make ChatGPT search the victim’s files for sensitive information and send it back to the attacker. The kicker: It required no clicks or downloads from the user—this video shows how it was done. OpenAI fixed the flaw after being alerted, but the episode underscores a growing risk as AI systems link to more outside apps: sneaky “prompt injection” attacks, known as zero-click attacks (like the one I reported on in June) that trick chatbots into doing the hacker’s bidding without the user realizing it.

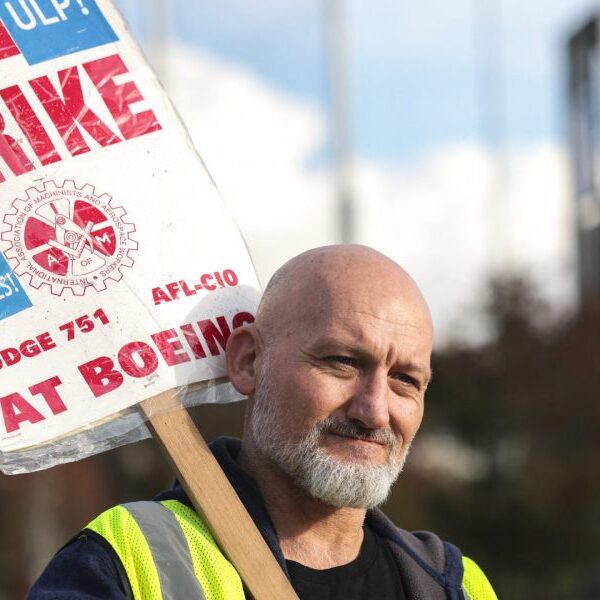

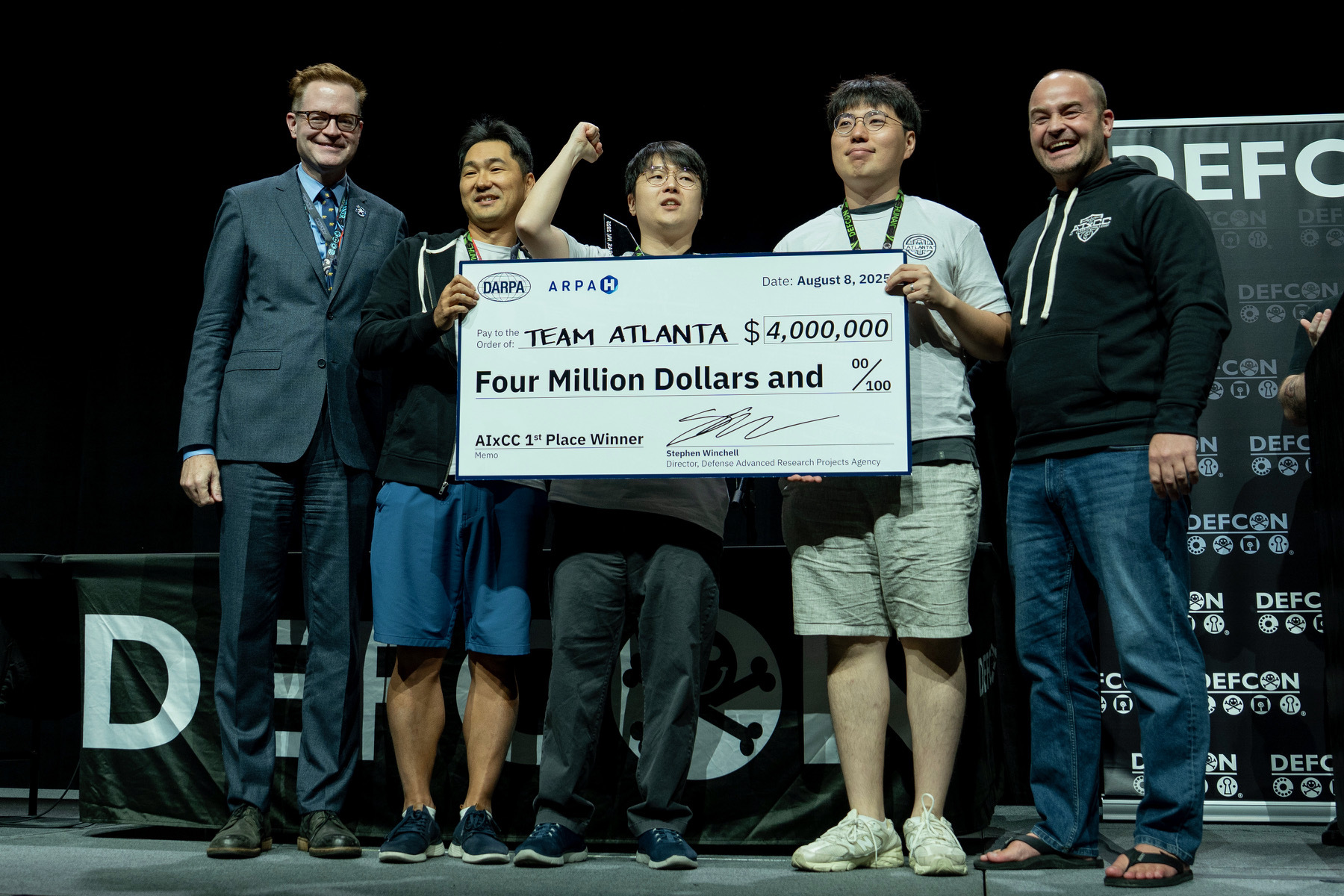

- AI can protect our most critical infrastructure. That idea was the driving force behind the two-year AI Cyber Challenge (AIxCC), which tasked teams of developers with building generative AI tools to find and fix software vulnerabilities in the code that powers everything from banks and hospitals to public utilities. The competition—run by DARPA in partnership with ARPA-H—wrapped up at this year’s DEF CON, where winners showed off autonomous AI systems capable of securing the open-source software that underpins much of the world’s critical infrastructure. The top three teams will receive $4 million, $3 million, and $1.5 million, respectively, for their performance in the finals.

- Anthropic’s Claude AI proved it can sometimes match elite hackers but still struggles on hard problems. Keane Lucas, a member of Anthropic’s Frontier Red Team, presented the fascinating case study of how the company quietly entered its Claude AI into seven major cybersecurity competitions—events typically dominated by human hackers and security pros—to see how it stacked up. Claude often landed in the top quarter of competitors and showed standout speed on simple challenges, sometimes matching elite human teams, but it lagged badly on the hardest problems. There were also quirks unique to AI—such as getting derailed by ASCII art or lapsing into philosophical rambling during long tasks. Anthropic says these experiments highlight both the offensive potential of AI (by lowering the skill and cost barriers to launching attacks) and its defensive promise, while underscoring persistent limits like long-term memory and complex reasoning.

Also: In just a few weeks, I will be headed to Park City, Utah, to participate in our annual Brainstorm Tech conference at the Montage Deer Valley! Space is limited, so if you’re interested in joining me, register here. I highly recommend: There’s a fantastic lineup of speakers, including Ashley Kramer, chief revenue officer of OpenAI; John Furner, president and CEO of Walmart U.S.; Tony Xu, founder and CEO of DoorDash; and many, many more!

With that, here’s more AI news.

Sharon Goldman

[email protected]

@sharongoldman

AI IN THE NEWS

China tells firms to avoid Nvidia H20 Chips after Trump ends ban but takes cut of revenue. According to Bloomberg, Beijing has quietly advised Chinese companies, especially those involved in government or national security projects, to steer clear of Nvidia’s H20 processors, undermining the U.S. chipmaker’s efforts to regain billions in lost China sales after the Trump administration lifted an effective ban on such exports. The guidance, delivered in recent weeks to a range of firms, stops short of an outright prohibition but signals strong official disapproval for H20 use in sensitive contexts. The news comes after President Trump announced the U.S. government will take 15% of the revenue that chipmaker Nvidia pulls in from sales in China of its H20 chips.

Students are flocking to college courses in AI. As AI transforms workplaces, growing numbers of professionals and students are pursuing advanced degrees, certificates, and training in artificial intelligence to boost skills and job prospects. The Washington Post reported that universities like the University of Texas at Austin, University of Michigan at Dearborn, University of San Diego, and MIT are seeing surging enrollment in AI programs, fueled by ChatGPT’s rise and employer demand for AI literacy. Courses range from technical training in algorithms and model-building to broader instruction in creativity, ethics, and problem-solving. Corporate partnerships, such as Meta inviting university instructors to train engineers, reflect industry urgency, while new initiatives at schools like Ohio State aim to make AI literacy universal by 2029. Workers see AI skills as a competitive edge—PwC reports wages for AI-skilled employees are 56% higher—and experts stress curiosity and adaptability over credentials alone.

Anthropic will offer Claude AI to U.S. government for $1. Reuters reported that Anthropic will offer its Claude AI model to the U.S. government for $1. The announcement comes days after OpenAI also offered ChatGPT to the U.S. government for $1, while Google’s Gemini was also added to the government’s list of approved AI vendors. “America’s AI leadership requires that our government institutions have access to the most capable, secure AI tools available,” CEO Dario Amodei said.

EYE ON AI RESEARCH

AI training to have whopping power needs, report says

According to Axios, a new report from the Electric Power Research Institute and Epoch AI reveals the staggering energy requirements for training large “frontier” AI models: By 2028, individual training projects could demand 1–2 gigawatts (GW) of power, and by 2030 that range may extend to 4–16 GW—with the high end representing nearly 1% of total U.S. electricity capacity. Overall, U.S. AI‑related power needs (covering both training and inference) are projected to surge from 5 GW today to 50 GW by 2030 according to the report, giving policymakers and hyperscale tech firms essential insights to help plan infrastructure and energy strategies despite considerable uncertainty.

FORTUNE ON AI

Exclusive: Profound raises $35M as Sequoia backs its ambitious bid to become the Salesforce of AI search —by Sharon Goldman

How deals with Apple and Trump’s Pentagon turned rare earth miner MP Materials into a red-hot stock —by Jordan Blum

OpenAI’s open-source pivot shows how U.S. tech is trying to catch up to China’s AI boom —by Nicholas Gordon

AI’s endless thirst for power is driving a natural gas boom in Appalachia—and industry stocks are booming along with it —by Jordan Blum

AI CALENDAR

Sept. 8-10: Fortune Brainstorm Tech, Park City, Utah. Apply to attend here.

Oct. 6-10: World AI Week, Amsterdam

Oct. 21-22: TedAI San Francisco. Apply to attend here.

Dec. 2-7: NeurIPS, San Diego

Dec. 8-9: Fortune Brainstorm AI San Francisco. Apply to attend here.

BRAIN FOOD

An apple for the teacher? These days, it’s AI. A new article in The Atlantic focuses on the other side of AI use in schools: Chatbots like ChatGPT may be popular with high school students, but teachers, too, are embracing AI to save time on grading, lesson planning, and paperwork, with platforms like MagicSchool AI now used by millions of U.S. educators. School districts are split—some banning AI, others integrating tools like Google’s Gemini into classrooms—but examples from Houston show the risks of poor-quality, AI-generated materials. Federal policy is pushing for widespread AI adoption, with major funding and partnerships from Microsoft, OpenAI, and Anthropic. Yet consensus is lacking on how to balance AI’s efficiencies with maintaining students’ critical thinking skills, leaving schools at a crossroads where increased reliance on AI now could entrench it deeply in future education.