Florida is the most recent U.S. state to implement its personal provisions round social media use, with Florida Governor Ron DeSantis signing a new bill that may ban kids aged beneath 14 from social media platforms completely, whereas additionally making it obligatory that 14 and 15 12 months previous customers achieve express parental permission to enroll.

Which may add some new checks and balances for the key social apps, although the particular wording of the invoice is fascinating.

The primary impetus, as famous, is to cease children from utilizing social media completely, in an effort to shield them from the “harms of social media” interaction.

Social platforms shall be required to terminate the accounts of people under 14, in addition to these of customers aged beneath 16 who don’t have parental consent. And that seemingly applies to devoted, underage targeted experiences as nicely, together with TikTok’s younger users setting.

Which may show problematic in itself, as there are not any excellent measures for detecting underage customers who might have lied about their age at join. Numerous programs have been put in place to improve this, whereas the invoice additionally calls on platforms to offer improved verification measures to implement this component.

Which some privateness teams have flagged as a priority, as it could cut back anonymity in social platform utilization.

Every time an underage person account is detected, the platforms can have 10 enterprise days to take away such, or they might face fines of as much as $10,000 per violation.

The precise parameters of the invoice state that the brand new guidelines will apply to any on-line platform of which 10% or extra of its day by day lively customers are youthful than 16.

There’s additionally a selected provision across the variance between social platforms and messaging apps, which aren’t topic to those new guidelines:

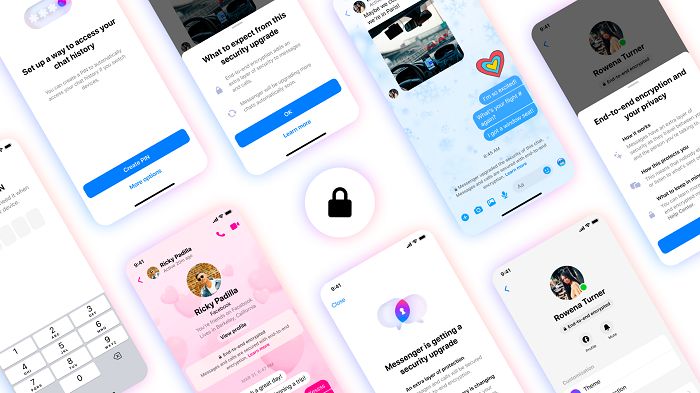

“The term does not include an online service, website, or application where the exclusive function is e-mail or direct messaging, consisting of text, photographs, pictures, images, or videos shared only between the sender and the recipients, without displaying or posting publicly or to other users not specifically identified as the recipients by the sender.”

That would imply that Meta’s “Messenger for Kids” is excluded, whereas additionally, relying in your definition, enabling Snapchat to keep away from restriction.

Which looks like a niche, particularly given Snapchat’s reputation with youthful audiences, however once more, the specifics shall be clarified over time.

It’s one other instance of a U.S. state going it alone on its social media guidelines, with each Utah and Arkansas additionally implementing guidelines that impose restrictions on social media use for kids. In a associated push, Montana sought to ban TikTok entirely within its borders final 12 months, although that was much less about defending children and extra because of issues round its hyperlinks to China, and the potential use of the app as a spying instrument for the C.C.P. Montana’s TikTok ban was rejected by the District Courtroom again in December.

The priority right here is that by implementing regional guidelines, every state may ultimately be tied to particular parameters, as carried out by the ruling celebration on the time, and there are wildly various views on the potential hurt of social media and on-line interplay.

China, for instance, has implemented tough restrictions on online game time amongst children, in addition to caps on in-app spending, in an effort to curb detrimental behaviors related to gaming habit. Heavy handed approaches like this, as initiated by regional governments, may have a big effect on the broader sector, forcing main shifts in consequence.

And actually, as Meta has noted, such restrictions needs to be carried out on a broader nationwide stage. Like, say, by way of the app shops that facilitate app entry within the first place.

Late final 12 months, Meta put forward its case that the app shops ought to tackle an even bigger position in conserving younger children out of adult-focused apps, or in any case, in making certain that folks are conscious of such earlier than they obtain them.

As per Meta:

“US states are passing a patchwork of different laws, many of which require teens (of varying ages) to get their parent’s approval to use certain apps, and for everyone to verify their age to access them. Teens move interchangeably between many websites and apps, and social media laws that hold different platforms to different standards in different states will mean teens are inconsistently protected.”

Certainly, by forcing the app suppliers to incorporate age verification, in addition to parental consent for downloads by children, that might guarantee better uniformity, and improved safety, by way of programs that will allow broader controls, with out every platform having to provoke its personal processes on the identical.

To this point, that pitch doesn’t appear to be resonating, however it might, at the least in idea, clear up loads of key challenges on this entrance.

And with out a nationwide strategy, we’re left to regional variances, which may turn into extra restrictive over time, relying on how every native authorities approaches such.

Which suggests extra payments, extra debates, extra regional rule modifications, and extra customized processes inside every app for every area.

Broader coverage looks like a greater strategy, however coordination can be a problem.