The FTC has had a significant victory against deceptive practices by social media apps, albeit via a smaller player in the space.

Today, the FTC has announced that private messaging app NGL, which became a hit with teen users back in 2022, will be fined $5 million, and be banned from allowing people under 18 to use the app at all, due to misleading approaches and regulatory violations.

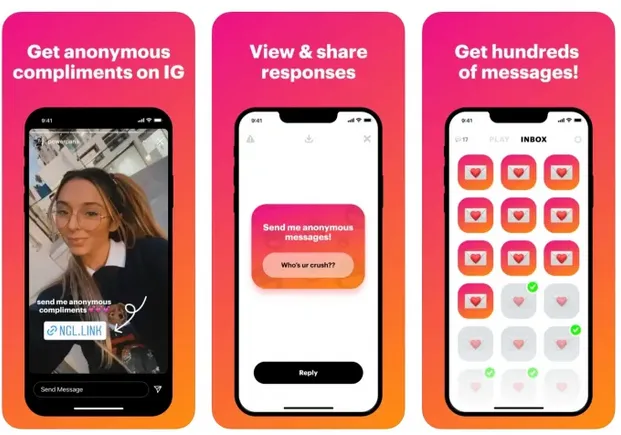

NGL’s key value proposition is that it enables users to submit anonymous replies to questions posed by users of the app. Users can share their NGL questions on IG and Snapchat, prompting recipients to submit their responses via the NGL platform. Users are then able to view those responses, without info on who sent them. If they want to know who actually sent each message, however, they can pay a monthly subscription fee for full functionality.

The FTC found that NGL had acted deceptively, in several ways, first by simulating responses when real humans didn’t reply.

As per the FTC:

“Many of those anonymous messages that users were told came from people they knew – for example, “one of your friends is hiding s[o]mething from u” – were actually fakes sent by the company itself in an effort to induce additional sales of the NGL Pro subscription to people eager to learn the identity of who had sent the message.”

So if you paid, you were only revealing that a bot had sent you a message.

The FTC also alleges that NGL’s UI did not clearly state that its charges for revealing a sender’s identity were a recurring fee, as opposed to a one-off cost.

But even more concerningly, the FTC found that NGL failed to implement adequate protections for teens, despite “touting “world class AI content moderation” that enabled them to “filter out harmful language and bullying.”

“The company’s much vaunted AI often failed to filter out harmful language and bullying. It shouldn’t take artificial intelligence to anticipate that teens hiding behind the cloak of anonymity would send messages like “You’re ugly,” “You’re a loser,” “You’re fat,” and “Everyone hates you.” But a media outlet reported that the app failed to screen out hurtful (and all too predictable) messages of that sort.”

The FTC was particularly pointed about the proclaimed use of AI to reassure users (and parents):

“The defendants’ unfortunately named “Safety Center” accurately anticipated the apprehensions parents and educators would have about the app and attempted to assure them with promises that AI would solve the problem. Too many companies are exploiting the AI buzz du jour by making false or deceptive claims about their supposed use of artificial intelligence. AI-related claims aren’t puffery. They’re objective representations subject to the FTC ‘s long-standing substantiation doctrine.”

It’s the first time that the FTC has implemented a full ban on youngsters using a messaging app, and it could help it establish new precedent around teen safety measures across the industry.

The FTC is also looking to implement expanded restrictions on how Meta utilizes teen user data, while it’s also seeking to establish more definitive rules around ads targeted at users under 13.

Meta’s already implementing more restrictions on this front, stemming both from EU law changes and proposals from the FTC. But the regulatory group is seeking more concrete enforcement measures, along with industry standard processes for verifying user ages.

In the case of NGL, some of these violations were more blatant, leading to increased scrutiny overall. But the case does open up more scope for expanded measures in other apps.

So while you may not use NGL, and may not have been exposed to the app, the expanded ripple effect could still be felt.