Google’s new Gemini AI model is getting a mixed reception after its massive debut yesterday, however customers might have much less confidence within the firm’s tech or integrity after discovering out that essentially the most spectacular demo of Gemini was just about faked.

A video known as “Hands-on with Gemini: Interacting with multimodal AI” hit 1,000,000 views during the last day, and it’s not exhausting to see why. The spectacular demo “highlights some of our favorite interactions with Gemini,” displaying how the multimodal mannequin (that’s, it understands and mixes language and visible understanding) could be versatile and conscious of a wide range of inputs.

To start with, it narrates an evolving sketch of a duck from a squiggle to a totally colored-in drawing, then evinces shock (“What the quack!”) when seeing a toy blue duck. It then responds to numerous voice queries about that toy, then the demo strikes on to different show-off strikes, like monitoring a ball in a cup-switching recreation, recognizing shadow puppet gestures, reordering sketches of planets, and so forth.

It’s all very responsive, too, although the video does warning that “latency has been reduced and Gemini outputs have been shortened.” So that they skip a hesitation right here and an overlong reply there, received it. All in all it was a reasonably mind-blowing present of power within the area of multimodal understanding. My very own skepticism that Google may ship a contender took successful once I watched the hands-on.

Only one drawback: the video isn’t actual. “We created the demo by capturing footage in order to test Gemini’s capabilities on a wide range of challenges. Then we prompted Gemini using still image frames from the footage, and prompting via text.” (Parmy Olsen at Bloomberg was the first to report the discrepancy.)

So though it would form of do the issues Google reveals within the video, it didn’t, and perhaps couldn’t, do them stay and in the way in which they implied. Actually, it was a collection of rigorously tuned textual content prompts with nonetheless photographs, clearly chosen and shortened to misrepresent what the interplay is definitely like. You may see a few of the precise prompts and responses in a related blog post — which, to be truthful, is linked within the video description, albeit beneath the “…more”.

On one hand, Gemini actually does seem to have generated the responses proven within the video. And who needs to see some housekeeping instructions like telling the mannequin to flush its cache? However viewers are misled about how the velocity, accuracy, and elementary mode of interplay with the mannequin.

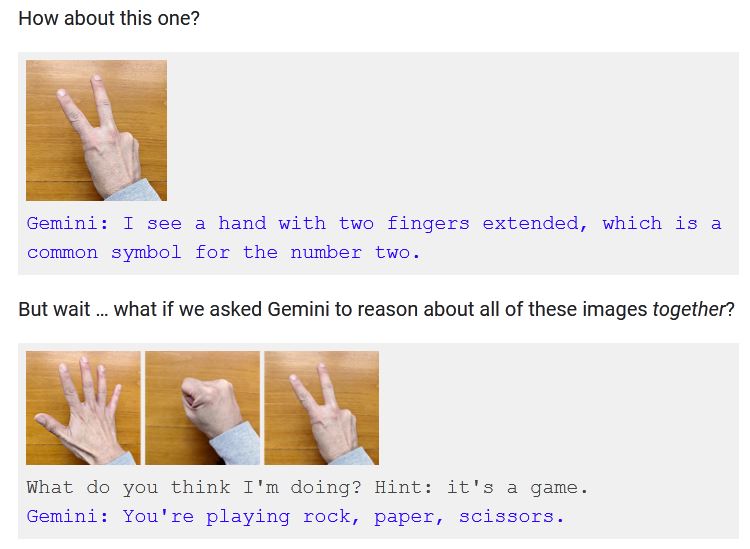

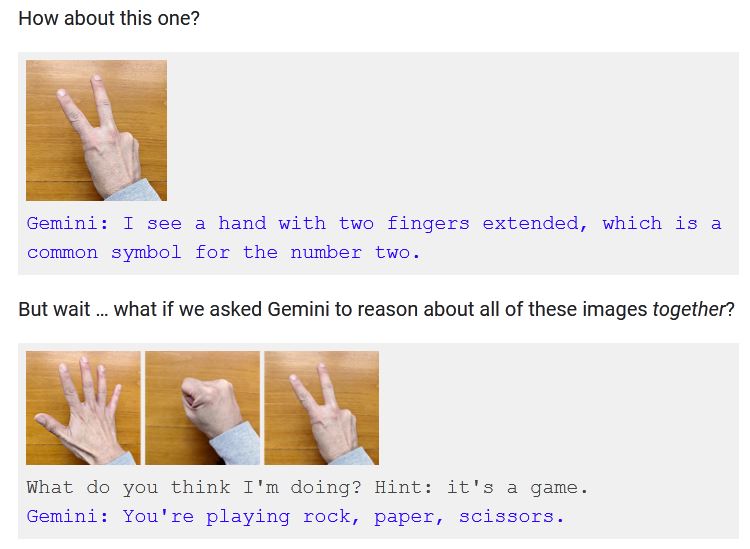

For example, at 2:45 within the video, a hand is proven silently making a collection of gestures. Gemini rapidly responds “I know what you’re doing! You’re playing Rock, Paper, Scissors!”

Picture Credit: Google/YouTube

However the very very first thing within the documentation of the aptitude is how the mannequin doesn’t cause based mostly on seeing particular person gestures. It should be proven all three gestures directly and prompted: “What do you think I’m doing? Hint: it’s a game.” It responds, “You’re playing rock, paper, scissors.”

Picture Credit: Google

Regardless of the similarity, these don’t really feel like the identical interplay. They really feel like basically completely different interactions, one an intuitive, wordless analysis that captures an summary concept on the fly, one other an engineered and closely hinted interplay that demonstrates limitations as a lot as capabilities. Gemini did the latter, not the previous. The “interaction” confirmed within the video didn’t occur.

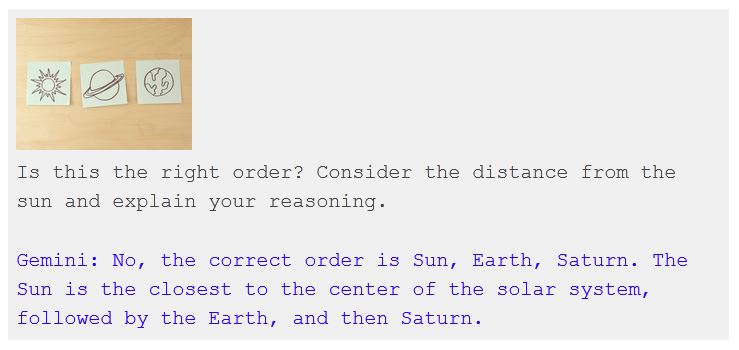

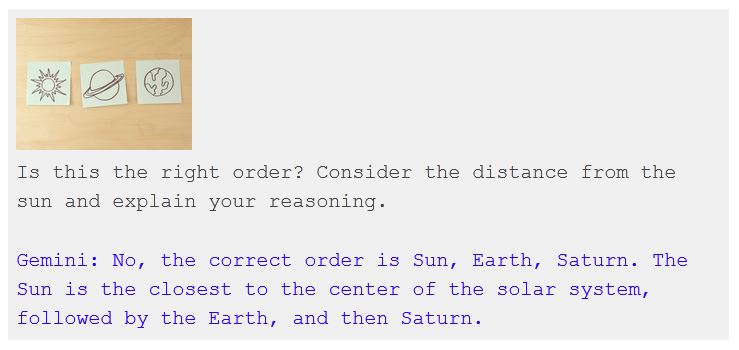

Later, three sticky notes with doodles of the Solar, Saturn, and Earth are positioned on the floor. “Is this the correct order?” Gemini says no, it goes Solar, Earth, Saturn. Appropriate! However within the precise (once more, written) immediate, the query is “Is this the right order? Consider the distance from the sun and explain your reasoning.”

Picture Credit: Google

Did Gemini get it proper? Or did it get it unsuitable, and wanted a little bit of assist to provide a solution they may put in a video? Did it even acknowledge the planets, or did it need assistance there as effectively?

These examples might or might not appear trivial to you. In any case, recognizing hand gestures as a recreation so rapidly is definitely actually spectacular for a multimodal mannequin! So is making a judgment name on whether or not a half-finished image is a duck or not! Though now, for the reason that weblog submit lacks a proof for the duck sequence, I’m starting to doubt the veracity of that interplay as effectively.

Now, if the video had mentioned initially, “This is a stylized representation of interactions our researchers tested,” nobody would have batted an eye fixed — we form of count on movies like this to be half factual, half aspirational.

However the video known as “Hands-on with Gemini” and once they say it reveals “our favorite interactions,” it’s implicit that the interactions we see are these interactions. They weren’t. Generally they had been extra concerned; generally they had been completely completely different; generally they don’t actually seem to have occurred in any respect. We’re not even informed what mannequin it’s — the Gemini Professional one folks can use now, or (extra possible) the Extremely model slated for launch subsequent yr?

Ought to now we have assumed that Google was solely giving us a taste video once they described it the way in which they did? Maybe then we should always assume all capabilities in Google AI demos are being exaggerated for impact. I write within the headline that this video was “faked.” At first I wasn’t positive if this harsh language was justified. However this video merely doesn’t replicate actuality. It’s pretend.

Google says that the video “shows real outputs from Gemini,” which is true, and that “we made a few edits to the demo (we’ve been upfront and transparent about this),” which isn’t. It isn’t a demo — not likely — and the video reveals very completely different interactions from these created to tell it.

Replace: In a social media post made after this text was revealed, Google DeepMind’s VP of Analysis Oriol Vinyals confirmed a bit extra of how the sausage was made. “The video illustrates what the multimodal user experiences built with Gemini could look like. We made it to inspire developers.” (Emphasis mine.) Curiously, it reveals a pre-prompting sequence that lets Gemini reply the planets query with out the Solar hinting (although it does inform Gemini it’s an knowledgeable on planets and to think about the sequence of objects pictured).

Maybe I’ll eat crow when, subsequent week, the AI Studio with Gemini Professional is made obtainable to experiment with. And Gemini might effectively turn into a strong AI platform that genuinely rivals OpenAI and others. However what Google has achieved right here is poison the effectively. How can anybody belief the corporate once they declare their mannequin does one thing now? They had been already limping behind the competitors. Google might have simply shot itself within the different foot.