It doesn’t take a lot to get GenAI spouting mistruths and untruths.

This previous week supplied an instance, with Microsoft’s and Google’s chatbots declaring a Super Bowl winner earlier than the sport even began. The actual issues begin, although, when GenAI’s hallucinations get dangerous — endorsing torture, reinforcing ethnic and racial stereotypes and writing persuasively about conspiracy theories.

An rising variety of distributors, from incumbents like Nvidia and Salesforce to startups like CalypsoAI, supply merchandise they declare can mitigate undesirable, poisonous content material from GenAI. However they’re black bins; in need of testing every independently, it’s unimaginable to know the way these hallucination-fighting merchandise examine — and whether or not they truly ship on the claims.

Shreya Rajpal noticed this as a serious downside — and based an organization, Guardrails AI, to aim to resolve it.

“Most organizations … are struggling with the same set of problems around responsibly deploying AI applications and struggling to figure out what’s the best and most efficient solution,” Rajpal advised TechCrunch in an e mail interview. “They often end up reinventing the wheel in terms of managing the set of risks that are important to them.”

To Rajpal’s level, surveys counsel complexity — and by extension danger — is a high barrier standing in the way in which of organizations embracing GenAI.

A current poll from Intel subsidiary Cnvrg.io discovered that compliance and privateness, reliability, the excessive value of implementation and an absence of technical abilities had been issues shared by round a fourth of firms implementing GenAI apps. In a separate survey from Riskonnect, a danger administration software program supplier, over half of execs mentioned that they had been nervous about staff making selections based mostly on inaccurate data from GenAI instruments.

Rajpal, who beforehand labored at self-driving startup Drive.ai and, after Apple’s acquisition of Drive.ai, in Apple’s particular initiatives group, co-founded Guardrails with Diego Oppenheimer, Safeer Mohiuddin and Zayd Simjee. Oppenheimer previously led Algorithmia, a machine studying operations platform, whereas Mohiuddin and Simjee held tech and engineering lead roles at AWS.

In some methods, what Guardrails presents isn’t all that completely different from what’s already in the marketplace. The startup’s platform acts as a wrapper round GenAI fashions, particularly open supply and proprietary (e.g. OpenAI’s GPT-4) text-generating fashions, to make these fashions ostensibly extra reliable, dependable and safe.

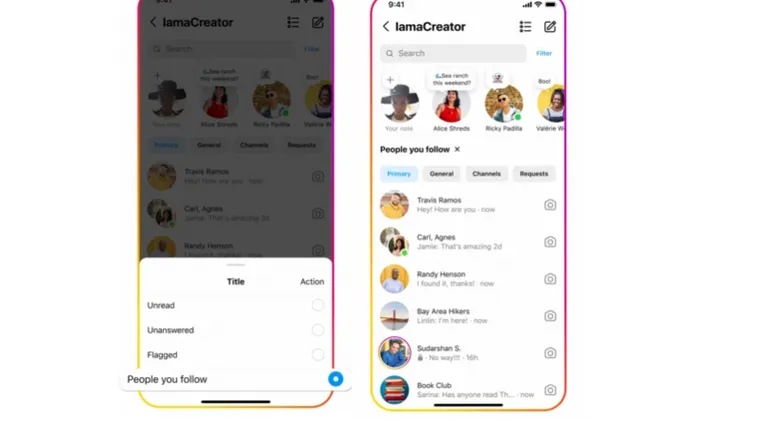

Picture Credit: Guardrails AI

However the place Guardrails differs is its open supply enterprise mannequin — the platform’s codebase is accessible on GitHub, free to make use of — and crowdsourced strategy.

By a market known as the Guardrails Hub, Guardrails lets builders submit modular elements known as “validators” that probe GenAI fashions for sure behavioral, compliance and efficiency metrics. Validators will be deployed, repurposed and reused by different devs and Guardrails clients, serving because the constructing blocks for customized GenAI model-moderating options.

“With the Hub, our goal is to create an open forum to share knowledge and find the most effective way to [further] AI adoption — but also to build a set of reusable guardrails that any organization can adopt,” Rajpal mentioned.

Validators within the Guardrails Hub vary from easy rule-based checks to algorithms to detect and mitigate points in fashions. There’s about 50 at current, starting from hallucination and coverage violations detector to filters for proprietary data and insecure code.

“Most companies will do broad, one-size-fits-all checks for profanity, personally identifiable information and so on,” Rajpal mentioned. “However, there’s no one, universal definition of what constitutes acceptable use for a specific organization and team. There’s org-specific risks that need to be tracked — for example, comms policies across organizations are different. With the Hub, we enable people to use the solutions we provide out of the box, or use them to get a strong starting point solution that they can further customize for their particular needs.”

A hub for mannequin guardrails is an intriguing thought. However the skeptic in me wonders whether or not devs will trouble contributing to a platform — and a nascent one at that — with out the promise of some type of compensation.

Rajpal is of the optimistic opinion that they are going to, if for no different purpose than recognition — and selflessly serving to the trade construct towards “safer” GenAI.

“The Hub allows developers to see the types of risks other enterprises are encountering and the guardrails they’re putting in place to solve for and mitigate those risks,” she added. “The validators are an open source implementation of those guardrails that orgs can apply to their use cases.”

Guardrails AI, which isn’t but charging for any providers or software program, just lately raised $7.5 million in a seed spherical led by Zetta Enterprise Companions with participation from Manufacturing facility, Pear VC, Bloomberg Beta, Github Fund and angles together with famend AI knowledgeable Ian Goodfellow. Rajpal says the proceeds might be put towards increasing Guardrails’ six-person staff and extra open supply initiatives.

“We talk to so many people — enterprises, small startups and individual developers — who are stuck on being able ship GenAI applications because of lack of assurance and risk mitigation needed,” she continued. “This is a novel problem that hasn’t existed at this scale, because of the advent of ChatGPT and foundation models everywhere. We want to be the ones to solve this problem.”