The funding local weather for AI chip startups, as soon as as sunny as a mid-July day, is starting to cloud over as Nvidia asserts its dominance.

In response to a current report, U.S. chip companies raised simply $881 million from January 2023 to September 2023 — down from $1.79 billion within the first three quarters of 2022. AI chip firm Mythic ran out of money in 2022 and was practically compelled to halt operations, whereas Graphcore, a once-well-capitalized rival, now faces mounting losses.

However one startup seems to have discovered success within the ultra-competitive — and more and more crowded — AI chip house.

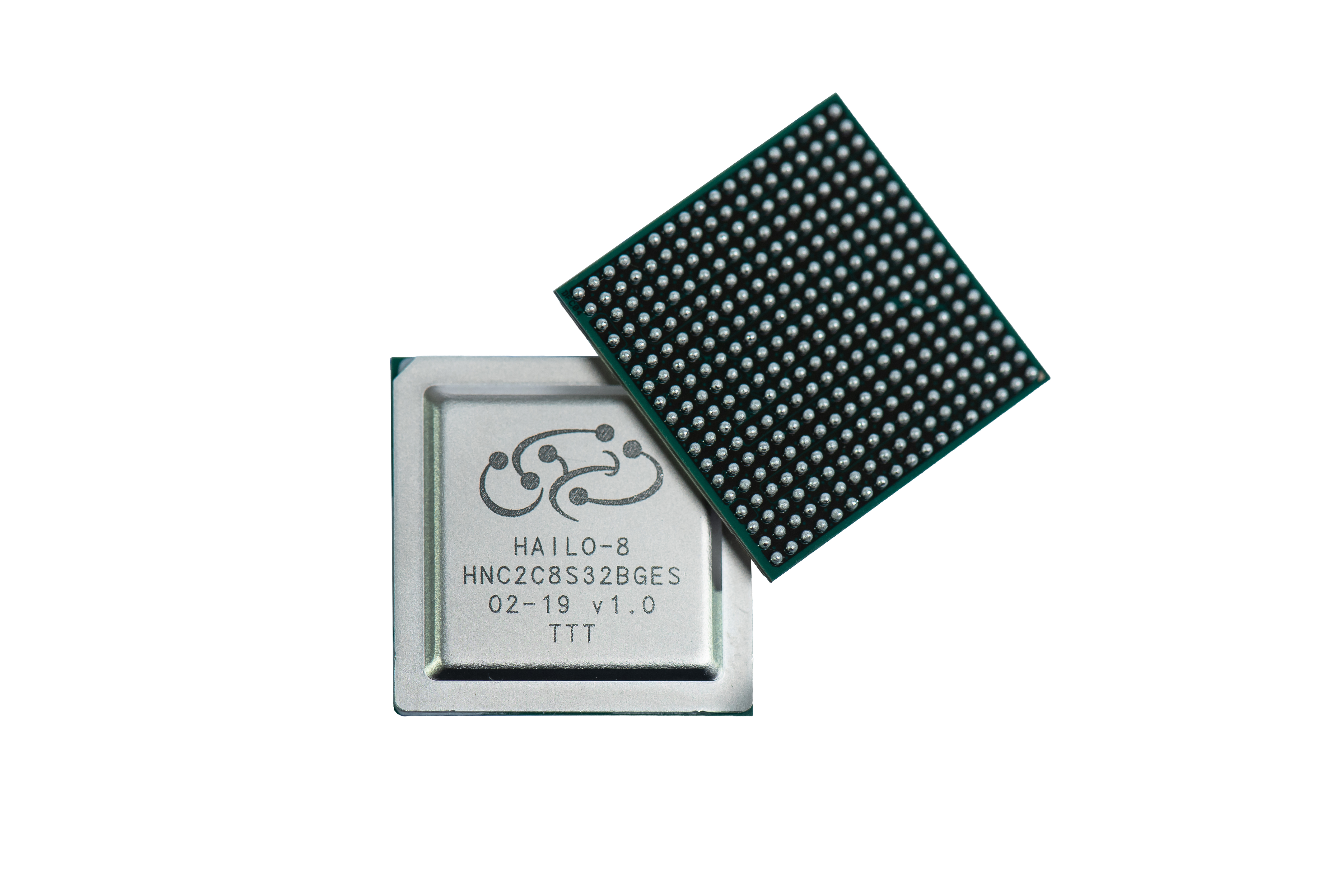

Hailo, co-founded in 2017 by Orr Danon and Avi Baum, beforehand CTO for wi-fi connectivity on the microprocessor outfit Texas Instruments, designs specialised chips to run AI workloads on edge units. Hailo’s chips execute AI duties with decrease reminiscence utilization and energy consumption than a typical processor, making them a robust candidate for compact, offline and battery-powered units similar to automobiles, good cameras and robotics.

“I co-founded Hailo with the mission to make high-performance AI available at scale outside the realm of data centers,” Danon informed TechCrunch. “Our processors are used for tasks such as object detection, semantic segmentation and so on, as well as for AI-powered image and video enhancement. More recently, they’ve been used to run large language models (LLMs) on edge devices including personal computers, infotainment electronic control units and more.”

Many AI chip startups have but to land one main contract, not to mention dozens or a whole bunch. However Hailo has over 300 clients at this time, Danon claims, in industries similar to automotive, safety, retail, industrial automation, medical units and protection.

In a wager on Hailo’s future prospects, a cohort of monetary backers together with Israeli businessman Alfred Akirov, automotive importer Delek Motors and the VC platform OurCrowd invested $120 million in Hailo this week, an extension to the corporate’s Collection C. Danon mentioned that the brand new capital will “enable Hailo to leverage all opportunities in the pipeline” whereas “setting the stage for long-term growth.”

“We’re strategically positioned to bring AI to edge devices in ways that will significantly expand the reach and impact of this remarkable new technology,” Danon mentioned.

Now, you is likely to be questioning, does a startup like Hailo actually stand an opportunity in opposition to chip giants like Nvidia, and to a lesser extent Arm, Intel and AMD? One knowledgeable, Christos Kozyrakis, Stanford professor {of electrical} engineering and laptop science, thinks so — he believes accelerator chips like Hailo’s will change into “absolutely necessary” as AI proliferates.

“The energy efficiency gap between CPUs and accelerators is too large to ignore,” Kozyrakis informed TechCrunch. “You use the accelerators for efficiency with key tasks (e.g., AI) and have a processor or two on the side for programmability.”

Kozyrakis does see longevity presenting a problem to Hailo’s management — for instance, if the AI mannequin architectures its chips are designed to run effectively fall out of vogue. Software program assist, too, could possibly be a problem, Kozyrakis says, if a essential mass of builders aren’t keen to study to make use of the tooling constructed round Hailo’s chips.

“Most of the challenges where it concerns custom chips are in the software ecosystem,” Kozyrakis mentioned. “This is where Nvidia, for instance, has a huge advantage over other companies in AI, as they’ve been investing in software for their architectures for 15-plus years.”

However, with $340 million within the financial institution and a workforce numbering round 250, Danon’s feeling assured about Hailo’s path ahead — at the very least within the brief time period. He sees the startup’s expertise addressing lots of the challenges corporations encounter with cloud-based AI inference, significantly latency, value and scalability.

“Traditional AI models rely on cloud-based infrastructure, often suffering from latency issues and other challenges,” Danon mentioned. “They’re incapable of real-time insights and alerts, and their dependency on networks jeopardizes reliability and integration with the cloud, which poses data privacy concerns. Hailo is addressing these challenges by offering solutions that operate independently of the cloud, thus making them able to handle much higher amounts of AI processing.”

Curious for Danon’s perspective, I requested about generative AI and its heavy dependence on the cloud and distant knowledge facilities. Certainly, Hailo sees the present top-down, cloud-centric mannequin (e.g OpenAI’s modus operandi) is an existential risk?

Danon mentioned that, quite the opposite, generative AI is driving new demand for Hailo’s {hardware}.

“In recent years, we’ve seen a surge in demand for edge AI applications in most industries ranging from airport security to food packaging,” he mentioned. “The new surge in generative AI is further boosting this demand, as we’re seeing requests to process LLMs locally by customers not only in the compute and automotive industries, but also in industrial automation, security and others.”

How about that.