There are numerous the reason why house robots have discovered little success post-Roomba. Pricing, practicality, type issue and mapping have all contributed to failure after failure. Even when some or all of these are addressed, there stays the query of what occurs when a system makes an inevitable mistake.

This has been a degree of friction on the economic stage, too, however huge corporations have the assets to correctly handle issues as they come up. We are able to’t, nevertheless, anticipate shoppers to be taught to program or rent somebody who may also help any time a problem arrives. Fortunately, this can be a nice use case for LLMs (giant language fashions) within the robotics house, as exemplified by new analysis from MIT.

A study set to be introduced on the Worldwide Convention on Studying Representations (ICLR) in Might purports to convey a little bit of “common sense” into the method of correcting errors.

“It turns out that robots are excellent mimics,” the college explains. “But unless engineers also program them to adjust to every possible bump and nudge, robots don’t necessarily know how to handle these situations, short of starting their task from the top.”

Historically, after they encounter points, robots will exhaust their pre-programmed choices earlier than requiring human intervention. That is an particularly huge concern in an unstructured setting like a house, the place any numbers of modifications to the established order can adversely influence a robotic’s means to perform.

Researchers behind the research observe that whereas imitation studying (studying to do a activity by way of statement) is fashionable on this planet of house robotics, it typically can’t account for the numerous small environmental variations that may intervene with common operation, thus requiring a system to restart from sq. one. The brand new analysis addresses this, partially, by breaking demonstrations into smaller subsets, quite than treating them as a part of a steady motion.

This, in flip, is the place LLMs enter the image, eliminating the requirement for the programmer to individually label and assign the quite a few subactions.

“LLMs have a way to tell you how to do each step of a task, in natural language. A human’s continuous demonstration is the embodiment of those steps, in physical space,” says grad pupil Tsun-Hsuan Wang. “And we wanted to connect the two, so that a robot would automatically know what stage it is in a task, and be able to replan and recover on its own.”

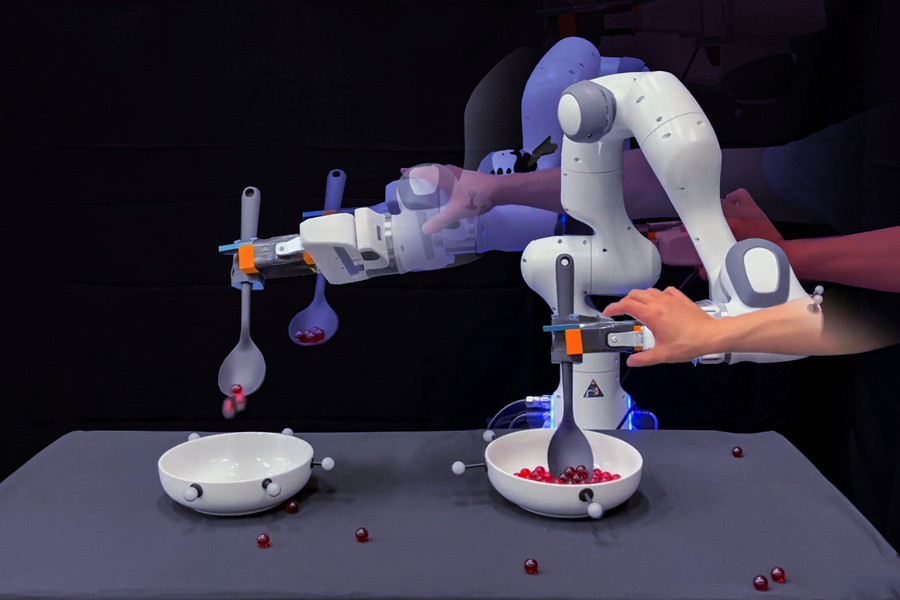

The actual demonstration featured within the research entails coaching a robotic to scoop marbles and pour them into an empty bowl. It’s a easy, repeatable activity for people, however for robots, it’s a mix of assorted small duties. The LLMs are able to itemizing and labeling these subtasks. Within the demonstrations, researchers sabotaged the exercise in small methods, like bumping the robotic off track and knocking marbles out of its spoon. The system responded by self-correcting the small duties, quite than ranging from scratch.

“With our method, when the robot is making mistakes, we don’t need to ask humans to program or give extra demonstrations of how to recover from failures,” Wang provides.

It’s a compelling methodology to assist one keep away from fully shedding their marbles.