2026 is going to be the year that AR comes into the mainstream, and Meta’s looking to take the first steps in order to maximize its position as the leader in the digital wearables field, with the announcement of a range of new Meta Display glasses features at CES.

Meta’s Display glasses are not full AR, but they’re the first device from Meta that includes a heads-up display, and Meta’s new wrist control device, which it hopes will establish new parameters for eventual AR interaction within real-world environments.

And Meta’s Display glasses have seen massive demand, so much so, in fact, that Meta’s had to pause its planned international expansion of the device into more markets, as it works to boost production.

So, even without full AR, interest in Meta’s AI-powered glasses is rising, and these new additions will bring more functionality to the already impressive device.

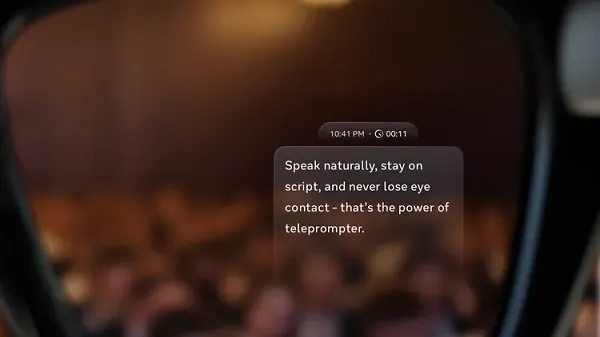

First off, Meta’s announced that it will begin a phased rollout of a new teleprompter feature, which will give users the option to display speech text on the in-lens screen.

As you can see in this example, the teleprompter feature will give presenters a much easier way to read a speech, without losing eye contact with the audience.

As explained by Meta:

“The discreet teleprompter is seamlessly embedded inside your display glasses, with customizable text-based cards and simple navigation with the Meta Neural Band. You can move through your presentation at your own speed, with the confidence of knowing your notes are literally right in front of you.”

It’s another smart addition, which will no doubt appeal to many users.

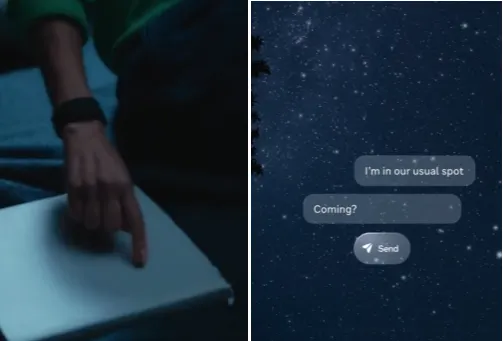

Meta’s also rolling out its new messaging process, which enables Display users to write words with their finger, with the neural band able to detect the relevant signals, and translate those finger movements into text.

As shown in this example, the process will enable Display users to send messages on WhatsApp and Messenger by writing with their finger on any surface.

Which could be a handy way to stay in the moment, and ensure that you’re still keeping your key contacts up to date.

Meta says that availability of the feature will initially be limited to people in its Early Access Program in the US, with more regions to follow.

Meta’s also expanding its pedestrian navigation feature to more cities (now up to 32 total cities in total), while it’s also partnering with Garmin on a new process that will connect Meta neural band with Garmin’s “Unified Cabin” suite of in-vehicle technology.

Which will then enable passengers to play games, and navigate the Unified Cabin UI, scrolling to select an app and pinching to launch it.

I mean, presumably drivers won’t be looking to control this, as that seems like a safety risk. But the idea is that, through expanded connectivity, Meta’s Display device, and neural band, will eventually be able to link into more usage options and types, which will then make it an even more valuable tool for digital interaction.

Which could be even more significant in future, as Meta looks to expand its wearables push.

If Meta can establish partnerships with other tools, and facilitate control functionality from the band, that could help to further solidify this as the must-have tech device for the next generation.

Already, the Display device is an impressive offering, and with Meta also looking to parlay that into full AR glasses, which will likely be available next year, it’s hard to see how others will be able to beat out Meta for superiority in this race.

And of glasses become the new phones, as Zuckerberg predicts, then all of these refinements and updates will play a part in his broader communication takeover plans.