Meta’s banning teen access to its AI chatbots in different personas, as it looks to implement a new system that will ensure more safety in their usage, and address concerns about teens getting harmful advice from these options.

Which makes sense, given the concerns already raised around AI bot interaction, but really, these would apply to everyone, not just teen users.

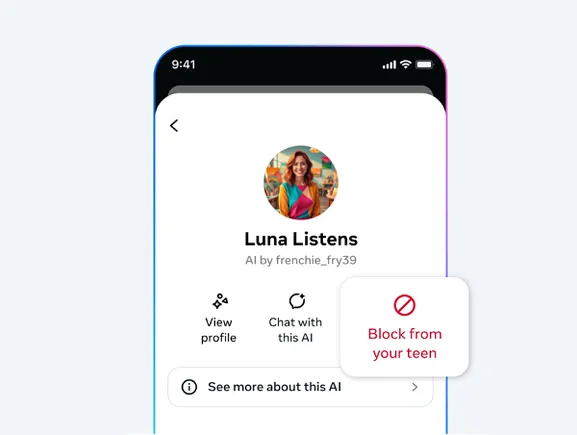

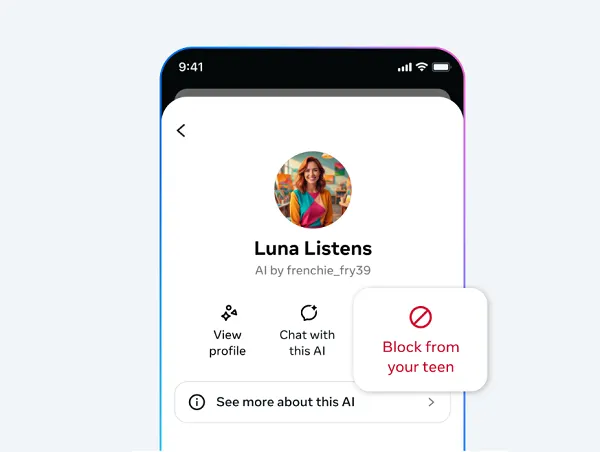

Back in October, Meta rolled out some new options for parents to control how their kids interact with AI profiles in its apps, amid concerns that some AI chatbots were providing potentially harmful advice and guidance to teens.

Indeed, several AI chatbots had been found to be giving teens dangerous guidance on self-harm, disordered eating, how to buy drugs (and hide it from your parents), etc.

That sparked the launch of an FTC investigation into the potential risks of AI chatbot interaction, and in response to that, Meta added more control options that would enable parents to limit how their kids engage with its AI chatbots, in order to mitigate the potential concern.

To be clear, Meta’s not alone in this. Snapchat has also had to change the rules around the use of its “My AI” chatbot as well, while X has had its own recent large-scale issues with people using its Grok chatbot to generate offensive images.

Generative AI is still in its infancy, and as such, it’s virtually impossible for developers to counter for every potential misuse. But even so, these uses by teens seem pretty easy to predict, which likely reflects the broader push for rapid development over risk.

In any event, Meta’s implementing new controls over its AI bots to address this concern. But as it does so, it’s now decided to temporarily cut off teen access to its AI chatbots entirely (except Meta AI).

As per Meta:

“In October, we shared that we’re building new tools to give parents more visibility into how their teens use AI, and more control over the AI characters they can interact with. Since then, we’ve started building a new version of AI characters, to give people an even better experience. While we focus on developing this new version, we’re temporarily pausing teens’ access to existing AI characters globally.”

I mean, that doesn’t sound great. It sounds like more concerns have been raised, while clearly, the FTC probe would be playing on the minds of Meta execs, as a potential penalty looms over any and all misuse.

“Starting in the coming weeks, teens will no longer be able to access AI characters across our apps until the updated experience is ready. This will apply to anyone who has given us a teen birthday, as well as people who claim to be adults but who we suspect are teens based on our age prediction technology. This means that, when we deliver on our promise to give parents more oversight of their teens’ AI experiences, those parental controls will apply to the latest version of AI characters.”

So Meta’s tacitly admitting that there are some significant flaws in its AI chatbots, which need to be addressed. And while teens will still be able to access its main Meta AI chatbot, they won’t be able to interact with custom bot personas within its apps.

Will that have a big impact?

Well, a study published last July found that 72% of U.S. teens have already used an AI companion, with many of them now conducting regular social interactions with their chosen virtual friends.

Combine this with Meta’s push to introduce an army of AI chatbot personas into its apps, as a means to boost engagement, and it probably does put a spanner in the works for its broader plans.

But the biggest question is how you can possibly safeguard AI interaction of this kind, given that AI bots are learning from the internet, and will adjust their responses based on the query.

There are many ways to trick AI chatbots into giving you responses that their developers would prefer they don’t provide, but because they’re matching up language on such a large scale, it’s impossible to account for every variation in this respect.

And digital native teens are extremely savvy, and will be looking for weak points in these systems.

As such, the move to restrict access entirely makes sense, but I’m not sure how Meta will be able to develop effective safeguards against future concerns.

So maybe just don’t implement AI chatbots in different personas that present themselves as real people.

There’s no real need for them in any context, and they also arguably run counter to the “social” aspect of social media anyway, as it’s not “social,” in our more common understanding of the term, to talk to a computer.

Social media was designed to facilitate human interaction, and the push to dilute that with AI bots seems at odds with this. I mean, I get why Meta would want to do it, as more AI bots engaging like real people in its apps, liking posts, leaving comments, all of these things will make real human users feel special, and will encourage more posting behavior, and time spent.

I know why Meta would want to implement such, but I don’t think the risks of such have been fully assessed, especially when you consider the potential impacts of developing relationships with non-human entities, and what that might do for people’s perception and mental state.

Meta’s admitting that this could be a problem in teens. But really, it could be a problem across the board, and I don’t believe that the value of these bots, from a user perspective, would outweigh that potential risk.

At least until we know more. At least until we’ve got some large-scale studies that show the impacts of AI bot interaction, and whether it’s a good or bad thing for people.

So why stop at banning them for teens, why not ban them for everyone until we have more insight?

I know this won’t happen, but this seems like an admission that this is a more significant area of concern than Meta anticipated.