Amid ongoing debate in regards to the parameters that needs to be set round generative AI, and the way it’s used, Meta recently partnered with Stanford’s Deliberative Democracy Lab to conduct a neighborhood discussion board on generative AI, as a way to glean suggestions from precise customers as to their expectations and issues round accountable AI improvement.

The discussion board integrated responses from over 1,500 folks from Brazil, Germany, Spain and the USA, and centered on the important thing points and challenges that individuals see in AI improvement.

And there are some interesting notes across the public notion of AI, and its advantages.

The topline outcomes, as highlighted by Meta, present that:

- The vast majority of members from every nation consider that AI has had a optimistic influence

- The bulk consider that AI chatbots ought to be capable of use previous conversations to enhance responses, so long as individuals are knowledgeable

- The vast majority of members consider that AI chatbots could be human-like, as long as individuals are knowledgeable.

Although the specific detail is attention-grabbing.

As you’ll be able to see on this instance, the statements that noticed probably the most optimistic and unfavourable responses have been completely different by area. Many members did change their opinions on these components all through the method, however it’s attention-grabbing to think about the place folks see the advantages and dangers of AI at current.

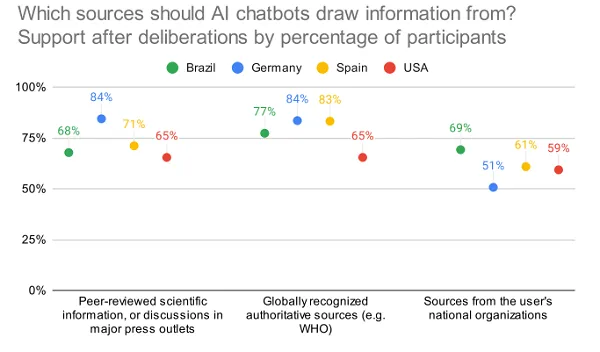

The report additionally checked out shopper attitudes in direction of AI disclosure, and the place AI instruments ought to supply their data:

Fascinating to notice the comparatively low approval for these sources within the U.S.

There are additionally insights on whether or not folks assume that customers ought to be capable of have romantic relationships with AI chatbots.

Bit bizarre, however it’s a logical development, and one thing that may have to be thought of.

One other attention-grabbing consideration of AI improvement not particularly highlighted within the research is the controls and weightings that every supplier implements inside their AI instruments.

Google was not too long ago compelled to apologize for the misleading and non-representative results produced by its Gemini system, which leaned too closely in direction of various illustration, whereas Meta’s Llama mannequin has additionally been criticized for producing more sanitized, politically correct depictions primarily based on sure prompts.

Examples like this spotlight the affect that the fashions themselves can have on the outputs, which is one other key concern in AI improvement. Ought to firms have such management over these instruments? Does there have to be broader regulation to make sure equal illustration and steadiness in every device?

Most of those questions are inconceivable to reply, as we don’t absolutely perceive the scope of such instruments as but, and the way they may affect broader response. However it’s changing into clear that we do have to have some common guard rails in place as a way to defend customers in opposition to misinformation and deceptive responses.

As such, that is an attention-grabbing debate, and it’s value contemplating what the outcomes imply for broader AI improvement.

You possibly can learn the total discussion board report here.