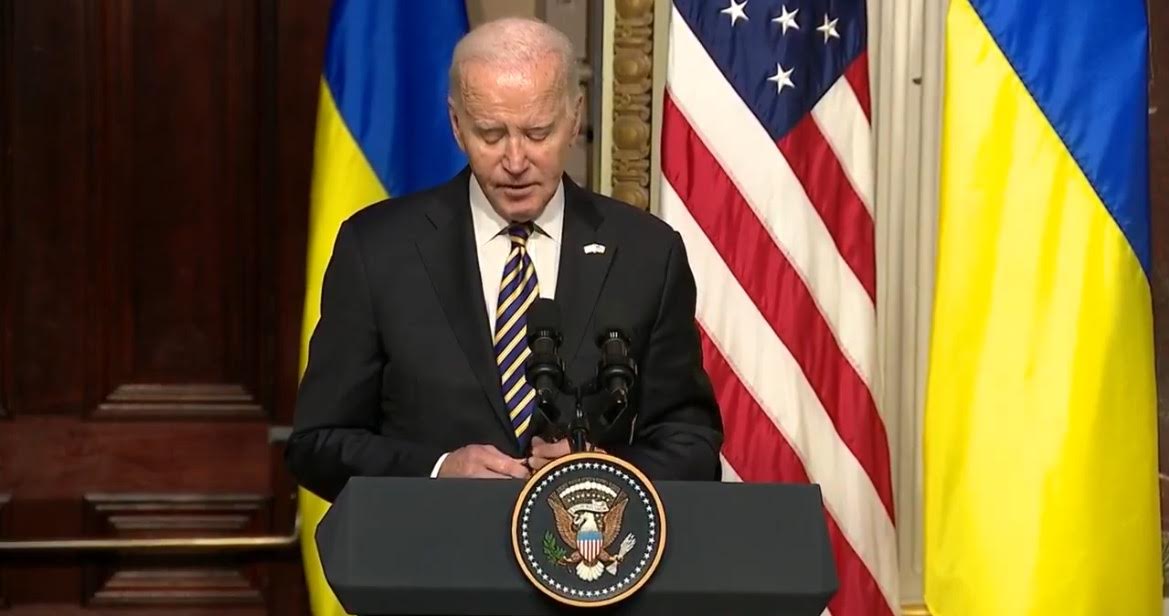

With the 2024 U.S. Presidential Election looming, and varied different pending polls around the globe, Meta is expanding its fact-checking program to cowl Threads content material as effectively, because it continues to see extra utilization in its Twitter-clone app.

As per Meta:

“Early next year, our third-party fact-checking partners will be able to review and rate false content on Threads. Currently, when a fact-checker rates a piece of content as false on Facebook or Instagram, we extend that fact-check rating to near-identical content on Threads, but fact-checkers cannot rate Threads content on its own.”

As famous, given Threads’ rising utilization, this can be a crucial step. The app already has over 100 million users, with seemingly many extra coming to the app week-by-week, as extra new options are rolled out, and extra new communities start to take form inside the Threads ecosystem.

On that entrance, Meta’s been making an enormous push with sports activities communities, which has seen it achieve momentum amongst NBA followers, particularly, with the latest In-Season Event marking a key milestone for NBA engagement via Threads.

However the extra that utilization rises, the extra danger of misinformation and hurt, which is why Meta must broaden its fact-checking course of to cowl distinctive Threads content material, in addition to duplicate posts throughout its different apps.

Along with this, Threads customers can even quickly get extra management over how a lot delicate content material they’re uncovered to within the app:

“We recently gave Instagram and Facebook users more controls, allowing them to decide how much sensitive or, if they’re in the U.S., how much fact-checked content they see on each app. Consistent with that approach, we’re also bringing these controls to Threads to give people in the U.S. the ability to choose whether they want to increase, lower or maintain the default level of demotions on fact-checked content in their Feed. If they choose to see less sensitive content on Instagram, that setting will also be applied on Threads.”

Reality-checking has turn into a extra contentious matter this 12 months, with X proprietor Elon Musk labeling a lot of the fact-checking carried out by social media platforms as “government censorship”, and framing reminiscent of a part of a broader conspiracy to “control the narrative” and restrict dialogue of sure matters.

Which isn’t true, nor appropriate, and all of Musk’s varied commissioned studies into supposed authorities interference at Twitter 1.0 haven’t really confirmed reflective of broad-scale censorship, as steered.

However on the identical time, there’s a want for a degree of fact-checking to cease dangerous misinformation from spreading. As a result of if you’re in command of a platform that may amplify such to tens of millions, even billions of individuals, there’s a accountability to measure and mitigate that hurt, the place attainable.

Which is a extra regarding side of a few of Musk’s modifications on the app, together with the reinstatement of assorted dangerous misinformation peddlers on the platform, the place they’ll now broadcast their false data as soon as once more.

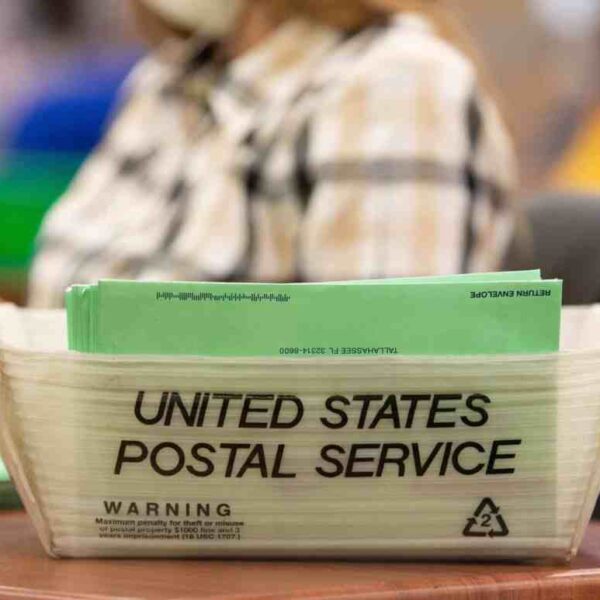

Again in 2016, within the wake of the U.S. Presidential Election in that 12 months, there appeared to lastly be a degree of acknowledgment in regards to the impacts of social media, and the way social media actions can affect voting outcomes, and may thus be manipulated by in poor health intentioned teams.

There have been Russian manipulation campaigns for one, however different teams had additionally been capable of coordinate and proliferate through social apps, together with Q Anon, The Proud Boys, ‘Boogaloo’ teams, and extra.

We then additionally noticed the rise of counter-science actions, like flat-Earthers and anti-vaxxers, the latter even resulting in a resurgence in long-dormant diseases in Western nations.

Following the election, a concerted effort was made to sort out these teams throughout the board, and fight the unfold of misinformation through social apps. However now, eight years eliminated, and heading into one other U.S. election interval, Elon Musk is handing a mic to a lot of them as soon as once more, which is about to trigger chaos within the lead-up to the approaching polls.

The final word end result might be that misinformation will as soon as once more play a major half within the subsequent election cycle, as these pushed by private agendas and affirmation bias will use their renewed platforms to mobilize their followers, and solidify help by expanded attain.

This can be a harmful scenario, and I wouldn’t be shocked if extra motion is taken to cease it. Apple, for instance, is reportedly contemplating eradicating X from its App Retailer after X’s reinstatement of Alex Jones, who’s been banned by each different platform.

That appears to be a logical step. As a result of we already know the hurt that these teams and people may cause, based mostly on spurious, selective reporting, and deliberate manipulation.

With this in thoughts, it’s good to see Meta taking extra steps to fight the identical, and it will turn into a a lot greater challenge the nearer we get to every election around the globe.

As a result of there are not any “alternative facts”, and you may’t merely “do your own research” on extra advanced scientific issues. That’s what we depend on our specialists for, and whereas it’s extra entertaining, and fascinating, to view every thing as a broad conspiracy, for essentially the most half, that’s very, most unlikely to be the case.