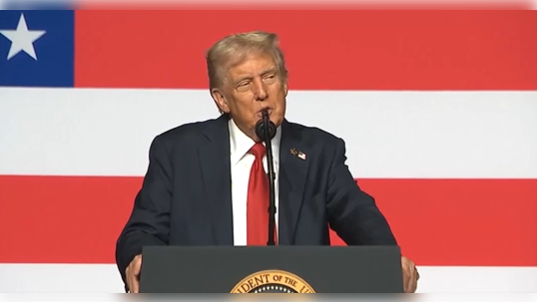

Meta’s expanding its use of facial recognition technology to combat celebrity scams, which use well-known identities to dupe unsuspecting users.

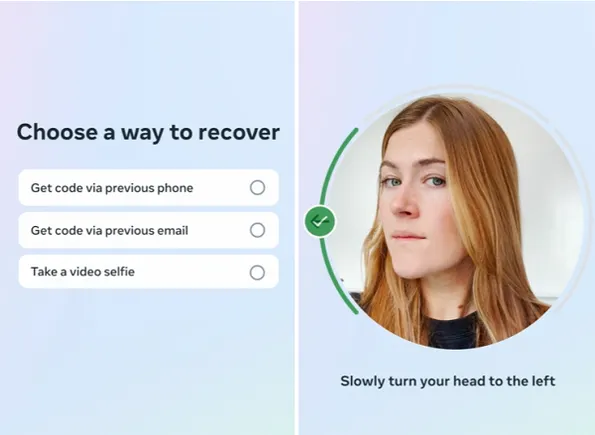

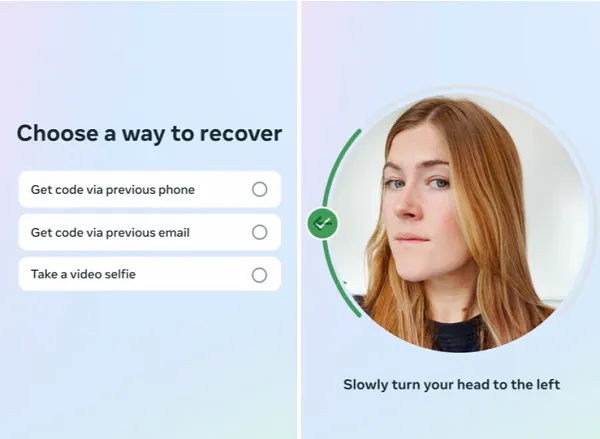

Which it’s been developing for some time. Over the past year, Meta’s been experimenting with the use of facial ID for account recovery, enabling users to scan in a video selfie as a means of identification.

Meta expanded that same process to celebrity accounts in the U.K. and EU back in March, as part of its broader efforts to combat celeb-bait, which is a rising scam vector across its apps. With this, Meta systems are able match the faces used in ads to the images that it has on file of high-profile users. And when there’s a match, Meta will confirm with the users’ official profile as to whether it’s a legitimate, endorsed promotion.

And after initially trying it out with selected celebrities in the U.K. and EU, it’s now expanding its pool of public figures for its celeb-bait detection mix.

As per Meta:

“We’re further expanding our use of facial recognition technology to crack down on suspicious accounts impersonating public figures in the EU, UK, and South Korea. In the coming months we also plan to expand our use of this technology to Instagram.”

So the process still only being used in three markets, but Meta has seemingly gained enough input from its initial testing to view this as a viable option for detecting such scams.

Indeed, Meta says that since deploying celeb-bait detection process, user reports of celebrity-bait ad scams have dropped by 22%.

“Our automated systems are also getting better at catching these scams before they reach people. The expansion of facial recognition technology in particular more than doubled the volume of celebrity-bait scam ads we were able to detect and remove in testing. Today, there are nearly 500,000 public figures that are being protected from having their likeness misused in these scams.”

So positive results all-round, though I would imagine that some people will still be hesitant about uploading their facial ID to Meta, for whatever purpose, and that Meta itself will still be treading carefully on this front.

Because the company’s history on this particular element hasn’t been great.

Back in 2021, Meta shut down its face recognition processes on Facebook entirely, after user backlash around the automated detection of faces in images, particularly via photo tagging, which was found to be in violation of biometric privacy laws. Meta was forced to pay out billions in settlements related to this misuse, which once again sparked a level of distrust and concern around how private companies may be profiting from user data.

There’s also broader concern about the use of facial recognition in general, with such technology being used, for example, to identify people entering sports stadiums, and then match their criminal and/or credit history in real time. In China, facial recognition technology is even being used to catch people jaywalking, and send them fines in the mail, or to further penalize people who’ve not paid parking fines. Or worse, such systems have also been used to identify Uyghur Muslims and single them out for tracking.

The nefarious use of such technology sparks much broader concern, and as such, it is interesting to see Meta taking even cautious steps in implementing such, for any purpose.

But it does make sense, and presumably, Meta’s view is that facial recognition will eventually be more accepted either way.

And as a valuable ID measure, there is a solid use case for such, though again, I would anticipate Meta taking small, cautious steps, as it quietly expands this program over time.