Meta’s dealing with extra questions over its CSAM enforcement efforts, after new investigations discovered that many cases of kid abuse content material are nonetheless being distributed all through Meta’s networks.

As reported by The Wall Street Journal, impartial analysis teams, together with The Stanford Web Observatory and The Canadian Centre for Little one Safety, have tracked numerous cases of teams distributing little one sexual abuse throughout Fb and Instagram.

As per WSJ:

“The tests show that the problem extends beyond Instagram to encompass the much broader universe of Facebook Groups, including large groups explicitly centered on sexualizing children. A Meta spokesman said the company had hidden 190,000 groups in Facebook’s search results and disabled tens of thousands of other accounts, but that the work hadn’t progressed as quickly as it would have liked.”

Much more disturbing, one investigation, which has been monitoring CSAM Instagram networks (a few of which amassing greater than 10 million followers), has discovered that the teams have continued to live-stream movies of kid intercourse abuse within the app even after being repeatedly reported to Meta’s moderators.

In response, Meta says that it’s now working in partnership with different platforms to enhance their collective enforcement efforts, whereas it’s additionally improved its know-how to determine offensive content material. Meta’s additionally increasing its community detection efforts, which determine when adults, for instance, are attempting to get involved with children, with the method now additionally being deployed to cease pedophiles from connecting with one another in its apps.

However the concern stays a continuing problem, as CSAM actors work to evade detection by revising their approaches according to Meta’s efforts.

CSAM is a crucial concern for all social and messaging platforms, with Meta particularly, based mostly on its sheer dimension and attain, bearing even larger accountability on this entrance.

Meta’s personal stats on the detection and elimination of kid abuse materials reinforce such considerations. All through 2021, Meta detected and reported 22 million pieces of child abuse imagery to the Nationwide Centre for Lacking and Exploited Kids (NCMEC). In 2020, NCMEC additionally reported that Facebook was liable for 94% of the 69 million child sex abuse images reported by U.S. technology companies.

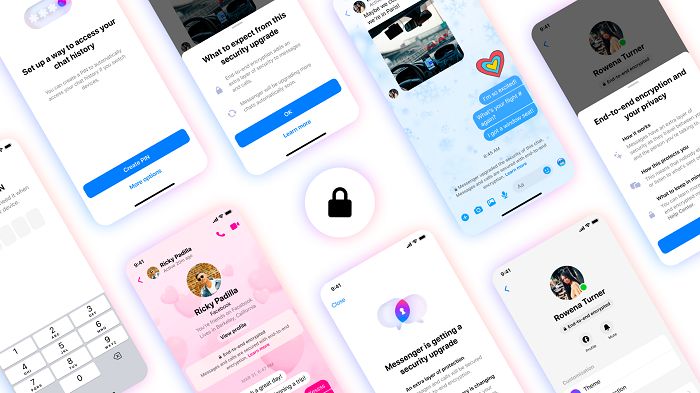

Clearly, Meta’s platforms facilitate a big quantity of this exercise, which has additionally been highlighted as one of many key causes in opposition to Meta’s gradual shift towards enabling full messaging encryption by default throughout all of its messaging apps.

With encryption enabled, nobody will be capable of break into these teams and cease the distribution of such content material, however the counter to that’s the want for normal folks to have extra privateness, and restrict third-party snooping of their personal chats.

Is that well worth the potential danger of expanded CSAM distribution? That’s the weigh-up that regulators have been making an attempt to evaluate, whereas Meta continues to push forward with the project, which can quickly see all messages in Messenger, IG Direct, and WhatsApp hidden from any outdoors view.

It’s a troublesome stability, which underlines the fantastic line that social platforms are all the time strolling between moderation and privateness. This is likely one of the key bugbears of Elon Musk, who’s been pushing to permit extra speech in his social app, however that too comes with its personal downfalls, in his case, within the type of advertisers opting not to display their promotions in his app.

There aren’t any straightforward solutions, and there are all the time going to be troublesome concerns, particularly when an organization’s final motivation is aligned with revenue.

Certainly, in response to WSJ, Meta, underneath rising income stress earlier this yr, instructed its integrity groups to offer precedence to aims that would cut back “advertiser friction”, whereas additionally avoiding errors which may “inadvertently limit well-intended usage of our products.”

One other a part of the problem right here is that Meta’s advice methods inadvertently join extra like-minded customers by serving to them to search out associated teams and folks, and Meta, which is pushing to maximise utilization, has no incentive to restrict its suggestions on this respect.

Meta, as famous, is all the time working to limit the unfold of CSAM associated materials. However with CSAM teams updating the best way that they convey, and the phrases that they use, it’s typically not possible for Meta’s methods to detect and keep away from associated suggestions based mostly on comparable consumer exercise.

The most recent experiences additionally come as Meta faces new scrutiny in Europe, with EU regulators requesting extra particulars on its response to little one security considerations on Instagram, and what, precisely, Meta’s doing to fight CSAM materials within the app.

That might see Meta dealing with hefty fines, or face additional sanctions within the EU as a part of the new DSA regulations within the area.

It stays a crucial focus, and a difficult space for all social apps, with Meta now underneath extra stress to evolve its methods, and guarantee better security in its apps.

The EU Fee has given Meta a deadline of December twenty second to stipulate its evolving efforts on this entrance.