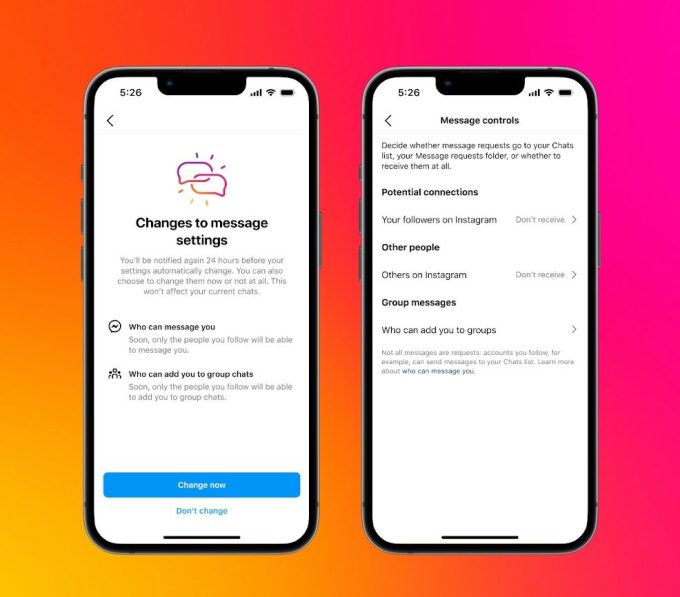

Meta introduced immediately that it’s rolling out new DM restrictions on each Fb and Instagram for teenagers that forestall anybody from messaging teenagers.

Till now, Instagram restricts adults over the age of 18 from messaging teenagers who don’t observe them. The brand new limits will apply to all customers underneath 16 — and in some geographies underneath 18 — by default. Meta stated that it’s going to notify present customers with a notification.

Picture Credit: Meta

On Messenger, customers will solely get messages from Fb mates, or folks they’ve of their contacts.

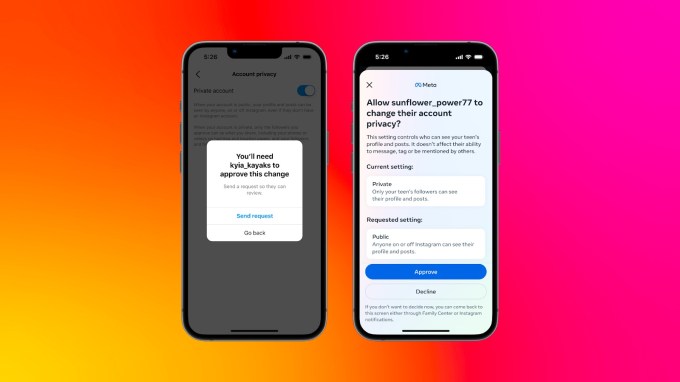

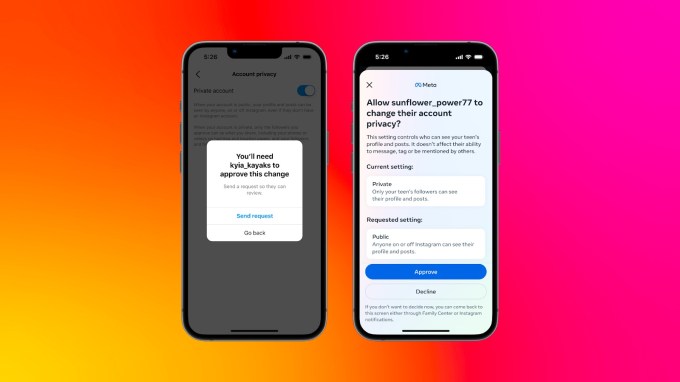

What’s extra, Meta can be making its parental controls extra strong by permitting guardians to permit or deny adjustments in default privateness settings made by teenagers. Beforehand, when teenagers modified these settings, guardians got a notification, however they couldn’t take any motion on them.

The corporate gave an instance that if a teen person tries to make their account public from personal, adjustments the Delicate Content material Management from “Less” to “Standard,” or makes an attempt to alter controls round who can DM them, guardians can block them.

Picture Credit: Meta

Meta first rolled out parental supervision tools for Instagram in 2022, which gave guardians a way of their teenagers’ utilization.

The social media large stated that it is usually planning to launch a characteristic that can forestall teenagers from seeing undesirable and inappropriate photographs of their DMs despatched by folks related to them. The corporate added that this characteristic will work in end-to-end encrypted chats as effectively and can “discourage” teenagers from sending all these photographs.

Meta didn’t specify what work it’s doing to make sure the privateness of teenagers whereas executing these options. It additionally didn’t present particulars about what it considers to be “inappropriate.”

Earlier this month, Meta rolled out new instruments to restrict teens from looking at self-harm or eating disorders on Facebook and Instagram.

Final month, Meta acquired a formal request for information from the EU regulators, who requested the corporate to offer extra particulars concerning the firm’s efforts in stopping the sharing of self-generated youngster sexual abuse materials (SG-CSAM).

On the identical time, the corporate is dealing with a civil lawsuit in the New Mexico state court, alleging that Meta’s social community promotes sexual content material to teen customers and promotes underage accounts to predators. In October, more than 40 US states filed a lawsuit in a federal court in California accusing the corporate of designing merchandise in a means that harmed children’ psychological well being.

The corporate is about to testify earlier than the Senate on points round youngster security on January 31 this yr together with different social networks together with TikTok, Snap, Discord, and X (previously Twitter).