Meta has announced a brand new initiative designed to determine agreed parameters round cybersecurity issues within the improvement giant language fashions (LLMs) and generative AI instruments, which it’s hoping might be adopted by the broader {industry}, as a key step in direction of facilitating larger AI safety.

Known as “Purple Llama”, primarily based by itself Llama LLM, the venture goals to “bring together tools and evaluations to help the community build responsibly with open generative AI models”

In accordance with Meta, the Purple Llama venture goals to determine the primary industry-wide set of cybersecurity security evaluations for LLMs.

As per Meta:

“These benchmarks are based on industry guidance and standards (e.g., CWE and MITRE ATT&CK) and built in collaboration with our security subject matter experts. With this initial release, we aim to provide tools that will help address a range of risks outlined in the White House commitments on developing responsible AI”

The White Home’s latest AI safety directive urges builders to determine requirements and assessments to make sure that AI techniques are safe, to guard customers from AI-based manipulation, and different issues that may ideally cease AI techniques from taking on the world.

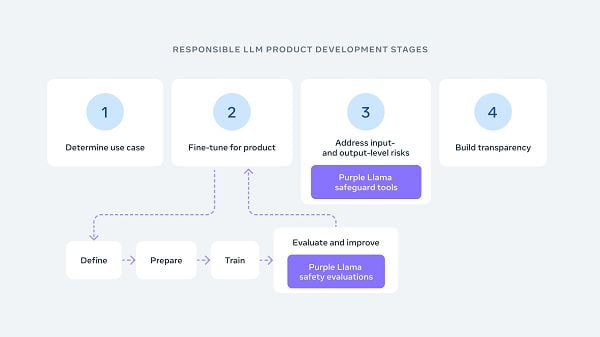

That are the driving parameters for Meta’s Purple Llama venture, which can initially embrace two key parts:

- CyberSec Eval – Trade-agreed cybersecurity security analysis benchmarks for LLMs

- Llama Guard – A framework for shielding towards probably dangerous AI outputs.

“We believe these tools will reduce the frequency of LLMs suggesting insecure AI-generated code and reduce their helpfulness to cyber adversaries. Our initial results show that there are meaningful cybersecurity risks for LLMs, both with recommending insecure code and for complying with malicious requests.”

The Purple Llama will companion with members of the newly-formed AI Alliance which Meta helps to steer, and likewise consists of Microsoft, AWS, Nvidia, and Google Cloud as founding companions.

So what’s “purple” obtained to do with it? I may clarify, however it’s fairly nerdy, and as quickly as you learn it you will remorse having that information take up house inside your head.

AI security is quick changing into a important consideration, as generative AI fashions evolve at speedy velocity, and specialists warn of the hazards in constructing techniques that would probably “think” for themselves.

That’s lengthy been a worry of sci-fi tragics and AI doomers, that at some point, we’ll create machines that may outthink our merely human brains, successfully making people out of date, and establishing a brand new dominant species on the planet.

We’re a good distance from this being a actuality, however as AI instruments advance, these fears additionally develop, and if we don’t absolutely perceive the extent of potential outputs from such processes, there may certainly be important issues stemming from AI improvement.

The counter to that’s that even when U.S. builders gradual their progress, that doesn’t imply that researchers in different markets will comply with the identical guidelines. And if Western governments impede progress, that would additionally change into an existential menace, as potential navy rivals construct extra superior AI techniques.

The reply, then, appears to be larger {industry} collaboration on security measures and guidelines, which can then make sure that all of the related dangers are being assessed and factored in.

Meta’s Purple Llama venture is one other step on this path.

You’ll be able to learn extra in regards to the Purple Llama initiative here.