Meta has released the newest entry in its Llama sequence of open generative AI fashions: Llama 3. Or, extra precisely, the corporate has debuted two fashions in its new Llama 3 household, with the remainder to come back at an unspecified future date.

Meta describes the brand new fashions — Llama 3 8B, which incorporates 8 billion parameters, and Llama 3 70B, which incorporates 70 billion parameters — as a “major leap” in comparison with the previous-gen Llama fashions, Llama 2 8B and Llama 2 70B, performance-wise. (Parameters basically outline the ability of an AI mannequin on an issue, like analyzing and producing textual content; higher-parameter-count fashions are, usually talking, extra succesful than lower-parameter-count fashions.) In truth, Meta says that, for his or her respective parameter counts, Llama 3 8B and Llama 3 70B — skilled on two custom-built 24,000 GPU clusters — are are among the many best-performing generative AI fashions obtainable right this moment.

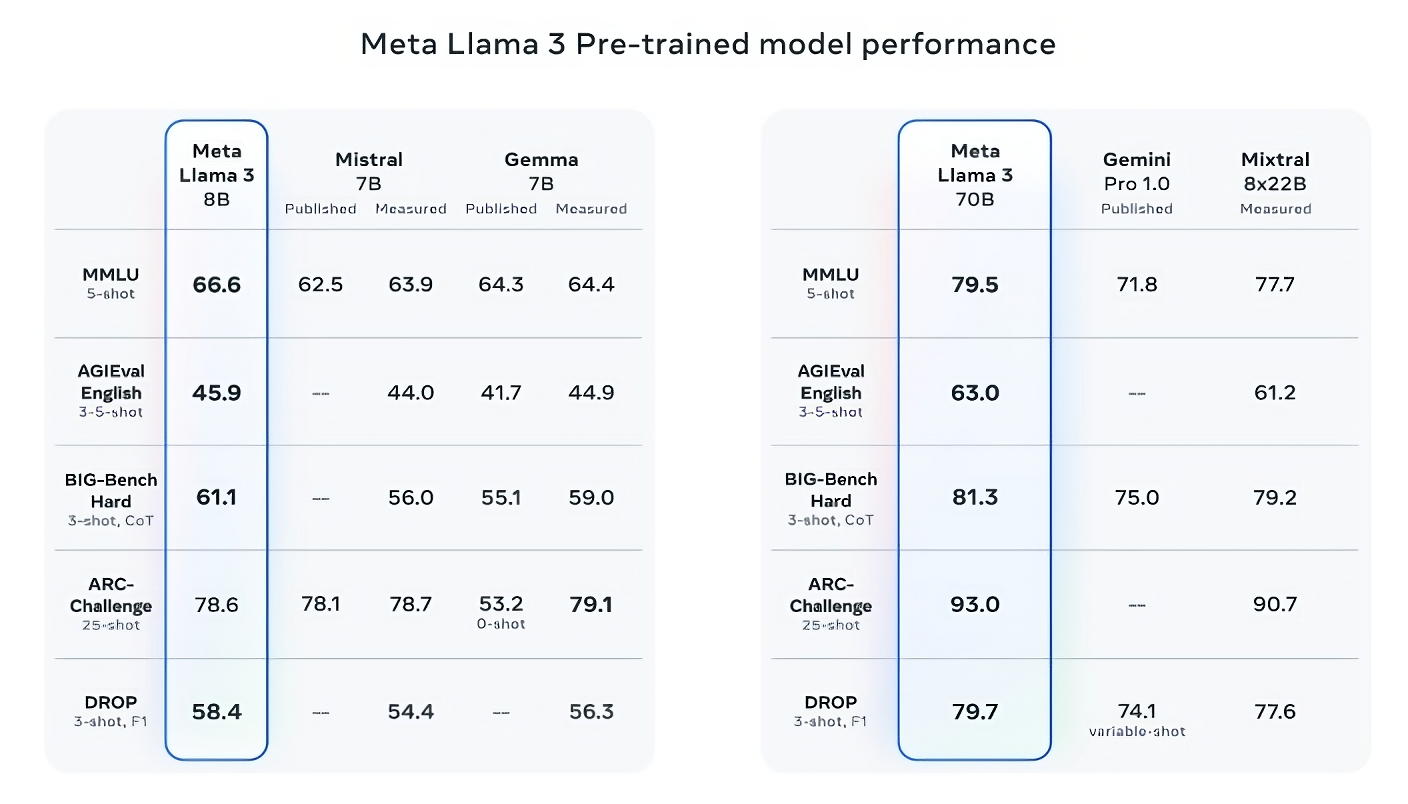

That’s fairly a declare to make. So how is Meta supporting it? Nicely, the corporate factors to the Llama 3 fashions’ scores on widespread AI benchmarks like MMLU (which makes an attempt to measure data), ARC (which makes an attempt to measure ability acquisition) and DROP (which assessments a mannequin’s reasoning over chunks of textual content). As we’ve written about before, the usefulness — and validity — of those benchmarks is up for debate. However for higher or worse, they continue to be one of many few standardized methods by which AI gamers like Meta consider their fashions.

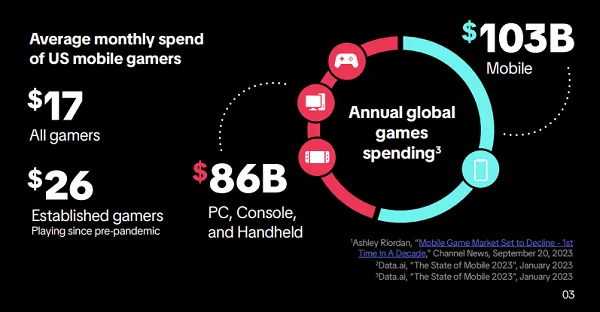

Llama 3 8B bests different open fashions akin to Mistral’s Mistral 7B and Google’s Gemma 7B, each of which include 7 billion parameters, on a minimum of 9 benchmarks: MMLU, ARC, DROP, GPQA (a set of biology-, physics- and chemistry-related questions), HumanEval (a code technology take a look at), GSM-8K (math phrase issues), MATH (one other arithmetic benchmark), AGIEval (a problem-solving take a look at set) and BIG-Bench Onerous (a commonsense reasoning analysis).

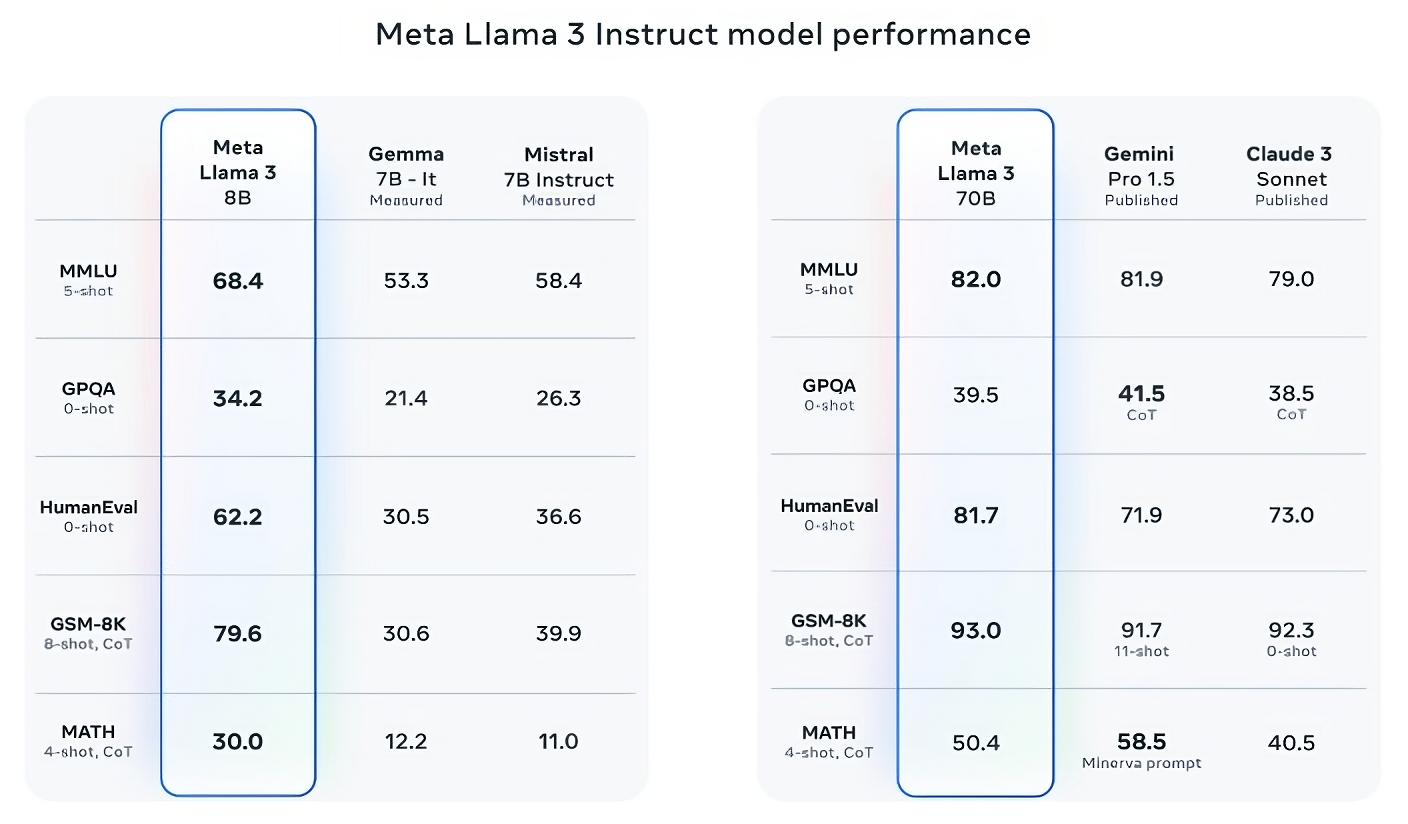

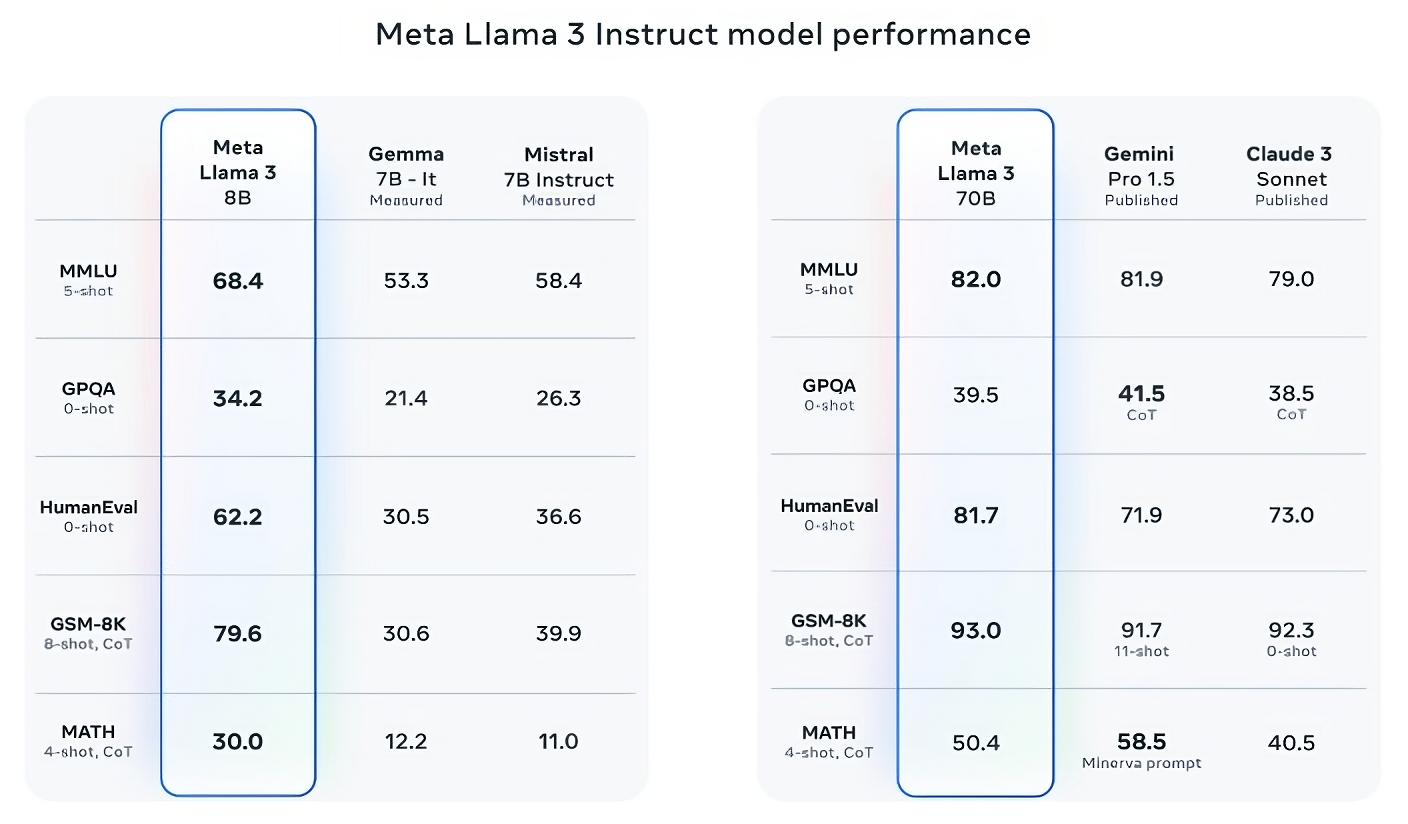

Now, Mistral 7B and Gemma 7B aren’t precisely on the bleeding edge (Mistral 7B was launched final September), and in a number of of the benchmarks Meta cites, Llama 3 8B scores only some proportion factors increased than both. However Meta additionally makes the declare that the larger-parameter-count Llama 3 mannequin, Llama 3 70B, is aggressive with flagship generative AI fashions, together with Gemini 1.5 Professional, the newest in Google’s Gemini sequence.

Picture Credit: Meta

Llama 3 70B beats Gemini 1.5 Professional on MMLU, HumanEval and GSM-8K, and — whereas it doesn’t rival Anthropic’s most performant mannequin, Claude 3 Opus — Llama 3 70B scores higher than the second-weakest mannequin within the Claude 3 sequence, Claude 3 Sonnet, on 5 benchmarks (MMLU, GPQA, HumanEval, GSM-8K and MATH).

Picture Credit: Meta

For what it’s value, Meta additionally developed its personal take a look at set masking use instances starting from coding and inventive writing to reasoning to summarization, and — shock! — Llama 3 70B got here out on high towards Mistral’s Mistral Medium mannequin, OpenAI’s GPT-3.5 and Claude Sonnet. Meta says that it gated its modeling groups from accessing the set to keep up objectivity, however clearly — provided that Meta itself devised the take a look at — the outcomes should be taken with a grain of salt.

Picture Credit: Meta

Extra qualitatively, Meta says that customers of the brand new Llama fashions ought to anticipate extra “steerability,” a decrease probability to refuse to reply questions, and better accuracy on trivia questions, questions pertaining to historical past and STEM fields akin to engineering and science and normal coding suggestions. That’s partially because of a a lot bigger dataset: a group of 15 trillion tokens, or a mind-boggling ~750,000,000,000 phrases — seven instances the scale of the Llama 2 coaching set. (Within the AI area, “tokens” refers to subdivided bits of uncooked knowledge, just like the syllables “fan,” “tas” and “tic” within the phrase “fantastic.”)

The place did this knowledge come from? Good query. Meta wouldn’t say, revealing solely that it drew from “publicly available sources,” included 4 instances extra code than within the Llama 2 coaching dataset and that 5% of that set has non-English knowledge (in ~30 languages) to enhance efficiency on languages aside from English. Meta additionally stated it used artificial knowledge — i.e. AI-generated knowledge — to create longer paperwork for the Llama 3 fashions to coach on, a somewhat controversial approach because of the potential efficiency drawbacks.

“While the models we’re releasing today are only fine tuned for English outputs, the increased data diversity helps the models better recognize nuances and patterns, and perform strongly across a variety of tasks,” Meta writes in a weblog put up shared with TechCrunch.

Many generative AI distributors see coaching knowledge as a aggressive benefit and thus preserve it and data pertaining to it near the chest. However coaching knowledge particulars are additionally a possible supply of IP-related lawsuits, one other disincentive to disclose a lot. Recent reporting revealed that Meta, in its quest to keep up tempo with AI rivals, at one level used copyrighted e-books for AI coaching regardless of the corporate’s personal legal professionals’ warnings; Meta and OpenAI are the topic of an ongoing lawsuit introduced by authors together with comic Sarah Silverman over the distributors’ alleged unauthorized use of copyrighted knowledge for coaching.

So what about toxicity and bias, two different widespread issues with generative AI fashions (including Llama 2)? Does Llama 3 enhance in these areas? Sure, claims Meta.

Meta says that it developed new data-filtering pipelines to spice up the standard of its mannequin coaching knowledge, and that it has up to date its pair of generative AI security suites, Llama Guard and CybersecEval, to aim to forestall the misuse of and undesirable textual content generations from Llama 3 fashions and others. The corporate’s additionally releasing a brand new software, Code Protect, designed to detect code from generative AI fashions which may introduce safety vulnerabilities.

Filtering isn’t foolproof, although — and instruments like Llama Guard, CyberSecEval and Code Protect solely go to date. (See: Llama 2’s tendency to make up answers to questions and leak private health and financial information.) We’ll have to attend and see how the Llama 3 fashions carry out within the wild, inclusive of testing from lecturers on different benchmarks.

Meta says that the Llama 3 fashions — which can be found for obtain now, and powering Meta’s Meta AI assistant on Fb, Instagram, WhatsApp, Messenger and the online — will quickly be hosted in managed kind throughout a variety of cloud platforms together with AWS, Databricks, Google Cloud, Hugging Face, Kaggle, IBM’s WatsonX, Microsoft Azure, Nvidia’s NIM and Snowflake. Sooner or later, variations of the fashions optimized for {hardware} from AMD, AWS, Dell, Intel, Nvidia and Qualcomm can even be made obtainable.

The Llama 3 fashions may be broadly obtainable. However you’ll discover that we’re utilizing “open” to explain them versus “open source.” That’s as a result of, regardless of Meta’s claims, its Llama family of models aren’t as no-strings-attached because it’d have folks consider. Sure, they’re obtainable for each analysis and industrial purposes. However, Meta forbids builders from utilizing Llama fashions to coach different generative fashions, whereas app builders with greater than 700 million month-to-month customers should request a particular license from Meta that the corporate will — or received’t — grant based mostly on its discretion.

Extra succesful Llama fashions are on the horizon.

Meta says that it’s presently coaching Llama 3 fashions over 400 billion parameters in measurement — fashions with the flexibility to “converse in multiple languages,” take extra knowledge in and perceive pictures and different modalities in addition to textual content, which might deliver the Llama 3 sequence in keeping with open releases like Hugging Face’s Idefics2.

Picture Credit: Meta

“Our goal in the near future is to make Llama 3 multilingual and multimodal, have longer context and continue to improve overall performance across core [large language model] capabilities such as reasoning and coding,” Meta writes in a weblog put up. “There’s a lot more to come.”

Certainly.