Meta has published its latest overview of content violations, hacking attempts, and feed engagement, which includes the regular array of stats and notes on what people are seeing on Facebook, what people are reporting, and what’s getting the most attention at any time.

For example, the Widely Viewed content report for Q4 2024 includes the usual gems, like this:

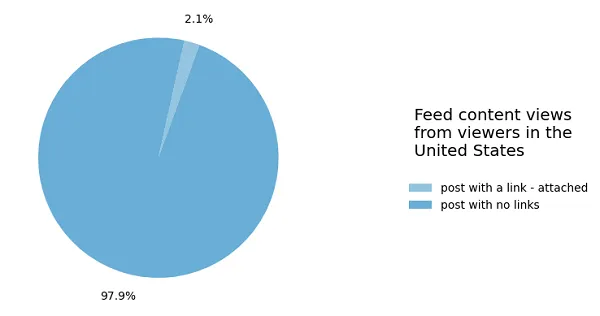

Less than awesome news for publishers, with 97.9% of the views of Facebook posts in the U.S. during Q4 2024 not including a link to a source outside of Facebook itself.

That percentage has steadily crept up over the last four years, with Meta’s first Widely Viewed Content report, published for Q3 2021, showing that 86.5% of the posts shown in feeds did not include a link outside the app.

It’s now radically high, meaning that getting an organic referral from Facebook is harder than ever, while Meta’s also de-prioritized links as part of its effort to move away from news content. Which it may or may not change again now that it’s looking to allow more political discussion to return to its apps. But the data, at least right now, shows that it’s still a pretty link-averse environment.

If you were wondering why your Facebook traffic has dried up, this would be a big part.

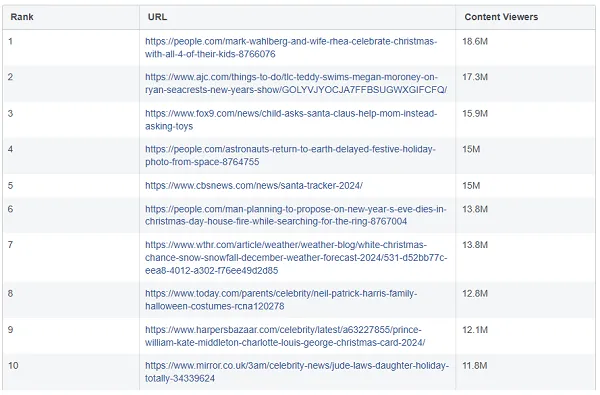

The top ten most viewed links in Q4 also show the regular array of random junk that’s somehow resonated with the Facebook crowd.

Astronauts celebrating Christmas, Mark Wahlberg posted a picture of his family for Christmas, Neil Patrick Harris sang a Christmas song. You get the idea, the usual range of supermarket tabloid headlines now dominate Facebook discussion, along with syrupy tales of seasonal sentiment.

Like: “Child Asks Santa Claus to Help Mom Instead of Asking For Toys”.

Sweet, sure, but also, ugh.

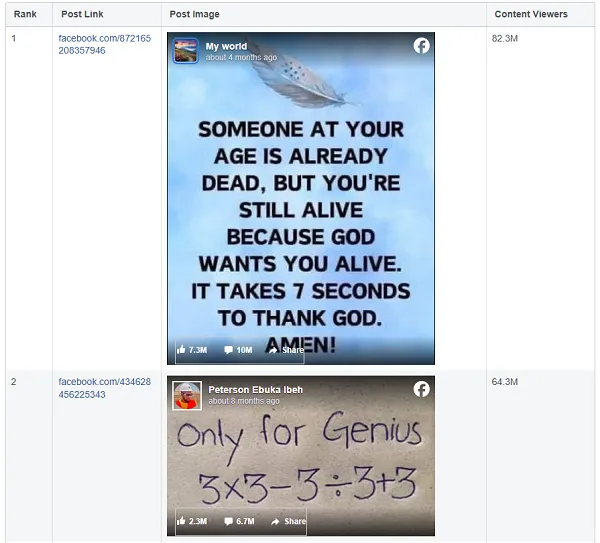

The top most shared posts overall aren’t much better.

If you want to resonate on Facebook, you probably could take notes from celebrity magazines, as it’s that type of material which seemingly gains traction, while displays of virtue or “intelligence” still catch on in the app.

Make of that what you will.

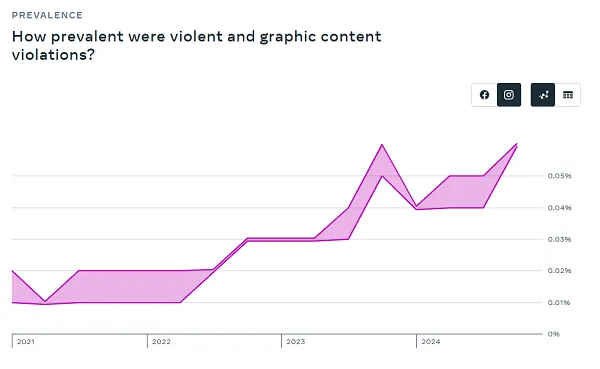

In terms of rule violations, there weren’t any particularly notable spikes in the period, though Meta did report an increase in the prevalence of Violent & Graphic Content on Instagram due to adjustments to its “proactive detection technology.”

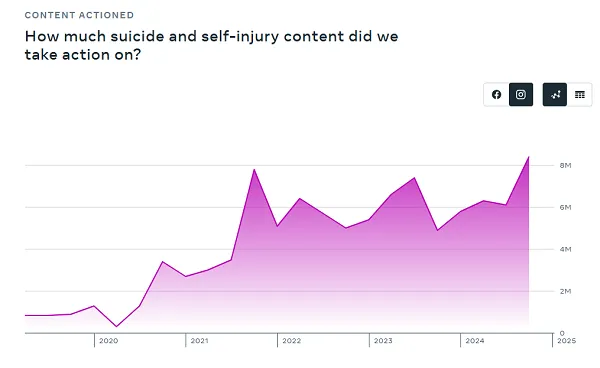

This also seems like a concern:

Also worth noting, Meta says that fake accounts “represented approximately 3% of our worldwide monthly active users (MAU) on Facebook during Q4 2024.”

That’s only notable because Meta usually pegs this number at 5%, which has seemingly become the industry standard, as there’s no real way to accurately determine this figure. But now Meta’s revised it down, which could mean that it’s more confident in its detection processes. Or it’s just changed the base figure.

Meta also shared this interesting note:

“This report is for Q4 2024, and does not include any data related to policy or enforcement changes made in January 2025. However, we have been monitoring those changes and so far we have not seen any meaningful impact on prevalence of violating content despite no longer proactively removing certain content. In addition, we have seen enforcement mistakes have measurably decreased with this new approach.”

That change is Meta’s controversial switch to a Community Notes model, while removing third party fact-checking, while Meta’s also revised some its policies, particularly relating to hate speech, moving them more into line, seemingly, with what the Trump Administration would prefer.

Meta says that it’s seen so major shifts in violative content as a result, at least not yet, but it is banning fewer accounts by mistake.

Which sounds good, right? Sounds like the change is better already.

Right?

Well, it probably doesn’t mean much.

The fact that Meta is seeing fewer enforcement errors makes perfect sense, as it’s going to be enacting a lot less enforcement overall, so of course, mistaken enforcement will inevitably decrease. But that’s not really the question, the real issue is whether rightful enforcement actions remain steady as it shifts to a less supervisory model, with more leeway on certain speech.

As such, the statement here seems more or less pointless at this stage, and more of a blind retort to those who’ve criticized the change.

In terms of threat activity, Meta detected several small-scale operations in Q4, originating from Benin, Ghana, and China.

Though potentially more notable was this explainer in Meta’s overview of a Russian-based influence operation called “Doppleganger”, which it’s been tracking for several years:

“Starting in mid-November, the operators paused targeting of the U.S., Ukraine and Poland on our apps. It’s still focused on Germany, France, and Israel with some isolated attempts to target people in other countries. Based on open source reporting, it appears that Doppelganger has not made this same shift on other platforms.”

It seems that after the U.S. election, Russian influence operations stopped being as interested in influencing sentiment in the U.S. and Ukraine. Seems like an interesting shift.

You can read all of Meta’s latest enforcement and engagement data points in its Transparency Center, if you’re looking to get a better understanding of what’s resonating on Facebook, and the shifts in its safety efforts.