While tech companies are pushing their latest AI tools onto users at every turn, and promoting the benefits of AI use, consumers remain wary of the impacts of such tools, and how beneficial they’ll actually be in the long term.

That’s based on the latest data from Pew Research, which conducted a series of surveys to glean more insight into how people around the world view AI, and the regulation of AI tools to ensure safety.

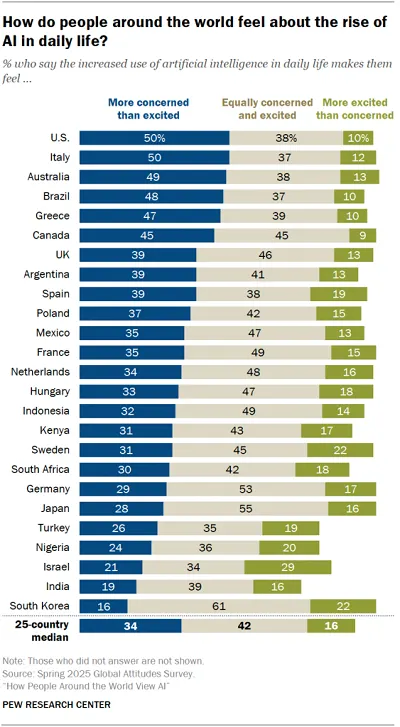

And as you can see in this chart, concerns about AI are particularly high in some regions:

As per Pew:

“Concerns about AI are especially common in the United States, Italy, Australia, Brazil and Greece, where about half of adults say they are more concerned than excited. But as few as 16% in South Korea are mainly concerned about the prospect of AI in their lives.”

In some ways, the data could be indicative of AI adoption in each region, with the regions that have deployed AI tools at a broader scale seeing higher levels of concern.

Which makes sense. More and more reports suggest that AI’s going to take our jobs, while studies have also raised significant concern about the impacts of AI tools on social interaction. And related: The rise of AI bots for romantic purposes could also be problematic, with even teen users engaging in romantic-like relationships with digital entities.

Essentially, we don’t know what the impacts of increased reliance on AI will be, and over time, more alarms are being raised, which expand much further than just the changes to the professional environment.

The answer to this, then, is effective regulation, and ensuring that AI tools can’t be misused in harmful ways. Which is also difficult, because we don’t have enough data to go on to know what those impacts will be, and people in some regions seem increasingly skeptical that their elected representatives will be able to assess such.

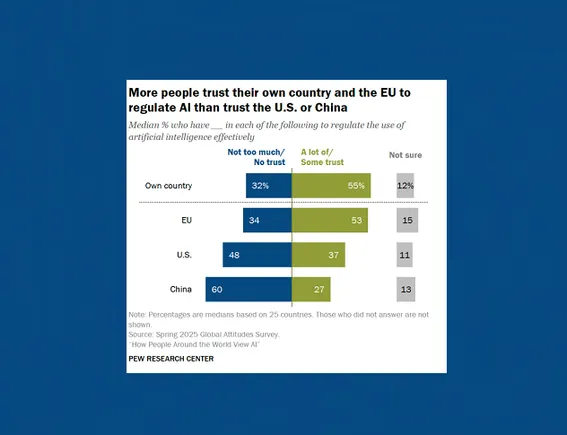

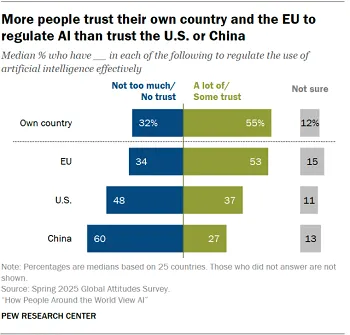

As you can see in this chart, while people in most regions trust in their policy makers to manage potential AI concerns, those in the U.S. and China, the two nations leading the AI race, are seeing lower levels of trust in their capacity to manage such.

That’s likely due to the push for innovation over safety, with both regions concerns that the other will take the lead in this emerging tech if they implement too many restrictions.

Yet, at the same time, allowing so many AI tools to be publicly released is going to exacerbate such concerns, which also expands to copyright abuses, IP theft, misrepresentation, etc.

There’s a whole range of problems that arise with every advanced AI model, and given the relative lack of action on social media till its negative impacts were already well embedded, it’s not surprising that a lot of people are concerned that regulators are not doing enough to keep people safe.

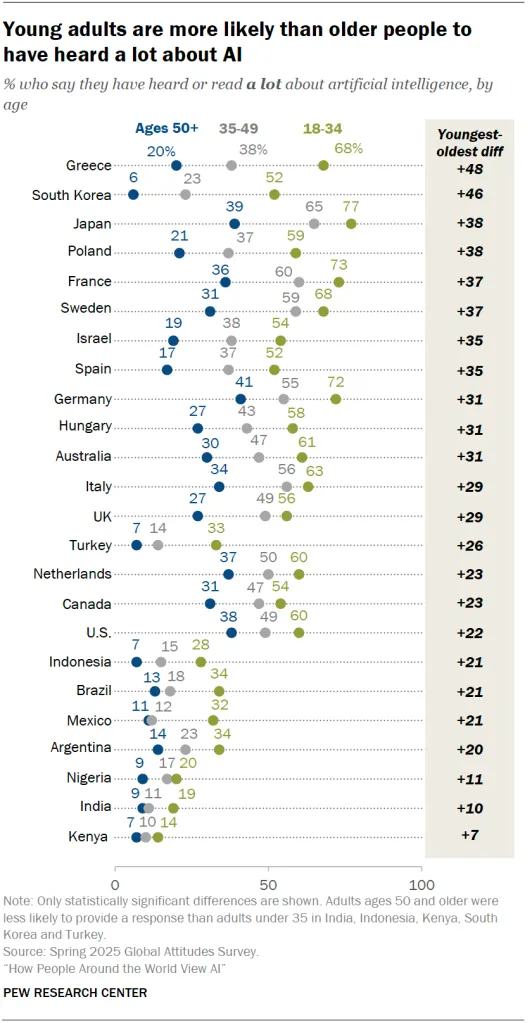

But the AI shift is coming, which is especially prevalent in this demographic awareness chart:

Young people are far more aware of AI, and the capacity of these tools, and many of them have already adopted AI into their daily processes, in a growing range of ways.

That means that AI tools are only going to become more prevalent, and it does feel like the rate of acceleration without adequate guardrails is going to become a problem, whether we like it or not.

But with tech companies investing billions in AI tech, and governments looking to remove red tape to maximize innovation, there’s seemingly not a lot we can do to avoid those impacts.