Deliberately poisoning somebody else is rarely morally proper. But when somebody within the workplace retains swiping your lunch, wouldn’t you resort to petty vengeance?

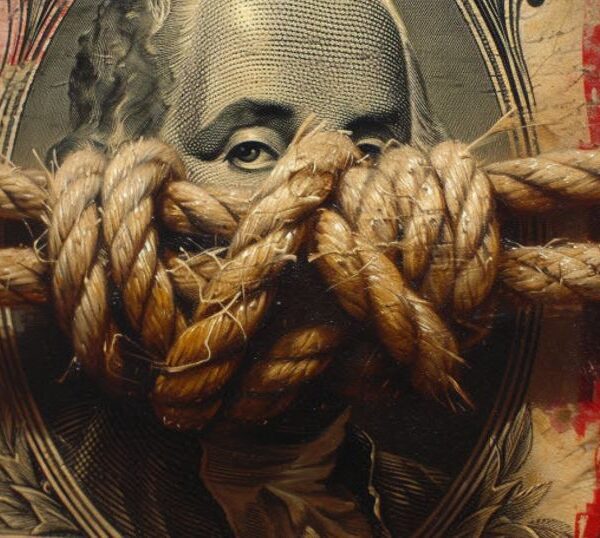

For artists, defending work from getting used to coach AI fashions with out consent is an uphill battle. Opt-out requests and do-not-scrape codes depend on AI firms to have interaction in good religion, however these motivated by revenue over privateness can simply disregard such measures. Sequestering themselves offline isn’t an choice for many artists, who depend on social media publicity for commissions and different work alternatives.

Nightshade, a challenge from the College of Chicago, provides artists some recourse by “poisoning” picture knowledge, rendering it ineffective or disruptive to AI mannequin coaching. Ben Zhao, a pc science professor who led the challenge, in contrast Nightshade to “putting hot sauce in your lunch so it doesn’t get stolen from the workplace fridge.”

“We’re showing the fact that generative models in general, no pun intended, are just models. Nightshade itself is not meant as an end-all, extremely powerful weapon to kill these companies,” Zhao stated. “Nightshade shows that these models are vulnerable and there are ways to attack. What it means is that there are ways for content owners to provide harder returns than writing Congress or complaining via email or social media.”

Zhao and his group aren’t attempting to take down Large AI — they’re simply attempting to power tech giants to pay for licensed work, as a substitute of coaching AI fashions on scraped pictures.

“There is a right way of doing this,” he continued. “The real issue here is about consent, is about compensation. We are just giving content creators a way to push back against unauthorized training.”

Left: The Mona Lisa, unaltered.

Center: The Mona Lisa, after Nightshade.

Proper: How AI “sees” the shaded model of the Mona Lisa.

Nightshade targets the associations between textual content prompts, subtly altering the pixels in pictures to trick AI fashions into decoding a totally totally different picture than what a human viewer would see. Fashions will incorrectly categorize options of “shaded” pictures, and in the event that they’re skilled on a adequate quantity of “poisoned” knowledge, they’ll begin to generate pictures fully unrelated to the corresponding prompts. It could possibly take fewer than 100 “poisoned” samples to deprave a Secure Diffusion immediate, the researchers write in a technical paper currently under peer review.

Take, for instance, a portray of a cow lounging in a meadow.

“By manipulating and effectively distorting that association, you can make the models think that cows have four round wheels and a bumper and a trunk,” Zhao advised TechCrunch. “And when they are prompted to produce a cow, they will produce a large Ford truck instead of a cow.”

The Nightshade group supplied different examples, too. An unaltered picture of the Mona Lisa and a shaded model are just about an identical to people, however as a substitute of decoding the “poisoned” pattern as a portrait of a lady, AI will “see” it as a cat carrying a gown.

Prompting an AI to generate a picture of a canine, after the mannequin was skilled utilizing shaded pictures that made it see cats, yields horrifying hybrids that bear no resemblance to both animal.

It takes fewer than 100 poisoned pictures to begin corrupting prompts.

The consequences bleed via to associated ideas, the technical paper famous. Shaded samples that corrupted the immediate “fantasy art” additionally affected prompts for “dragon” and “Michael Whelan,” who’s an illustrator specializing in fantasy and sci-fi cowl artwork.

Zhao additionally led the group that created Glaze, a cloaking instrument that distorts how AI fashions “see” and decide creative fashion, stopping it from imitating artists’ distinctive work. Like with Nightshade, an individual would possibly view a “glazed” practical charcoal portrait, however an AI mannequin will see it as an summary portray — after which generate messy summary work when it’s prompted to generate high quality charcoal portraits.

Speaking to TechCrunch after the instrument launched final yr, Zhao described Glaze as a technical assault getting used as a protection. Whereas Nightshade isn’t an “outright attack,” Zhao advised TechCrunch extra not too long ago, it’s nonetheless taking the offensive towards predatory AI firms that disregard choose outs. OpenAI — one of many firms going through a class action lawsuit for allegedly violating copyright law — now allows artists to opt out of getting used to coach future fashions.

“The problem with this [opt-out requests] is that it is the softest, squishiest type of request possible. There’s no enforcement, there’s no holding any company to their word,” Zhao stated. “There are plenty of companies who are flying below the radar, that are much smaller than OpenAI, and they have no boundaries. They have absolutely no reason to abide by those opt out lists, and they can still take your content and do whatever they wish.”

Kelly McKernan, an artist who’s a part of the class action lawsuit towards Stability AI, Midjourney and DeviantArt, posted an example of their shaded and glazed painting on X. The portray depicts a lady tangled in neon veins, as pixelated lookalikes feed off of her. It represents generative AI “cannibalizing the authentic voice of human creatives,” McKernan wrote.

McKernan started scrolling previous pictures with hanging similarities to their very own work in 2022, as AI picture turbines launched to the general public. After they discovered that over 50 of their items had been scraped and used to train AI models, they misplaced all curiosity in creating extra artwork, they advised TechCrunch. They even discovered their signature in AI-generated content material. Utilizing Nightshade, they stated, is a protecting measure till satisfactory regulation exists.

“It’s like there’s a bad storm outside, and I still have to go to work, so I’m going to protect myself and use a clear umbrella to see where I’m going,” McKernan stated. “It’s not convenient and I’m not going to stop the storm, but it’s going to help me get through to whatever the other side looks like. And it sends a message to these companies that just take and take and take, with no repercussions whatsoever, that we will fight back.”

Many of the alterations that Nightshade makes must be invisible to the human eye, however the group does observe that the “shading” is extra seen on pictures with flat colours and easy backgrounds. The instrument, which is free to download, can also be out there in a low depth setting to protect visible high quality. McKernan stated that though they might inform that their picture was altered after utilizing Glaze and Nightshade, as a result of they’re the artist who painted it, it’s “almost imperceptible.”

Illustrator Christopher Bretz demonstrated Nightshade’s impact on certainly one of his items, posting the results on X. Working a picture via Nightshade’s lowest and default setting had little influence on the illustration, however adjustments had been apparent at increased settings.

“I have been experimenting with Nightshade all week, and I plan to run any new work and much of my older online portfolio through it,” Bretz advised TechCrunch. “I know a number of digital artists that have refrained from putting new art up for some time and I hope this tool will give them the confidence to start sharing again.”

Ideally, artists ought to use each Glaze and Nightshade earlier than sharing their work on-line, the group wrote in a blog post. The group remains to be testing how Glaze and Nightshade work together on the identical picture, and plans to launch an built-in, single instrument that does each. Within the meantime, they advocate utilizing Nightshade first, after which Glaze to reduce seen results. The group urges towards posting paintings that has solely been shaded, not glazed, as Nightshade doesn’t defend artists from mimicry.

Signatures and watermarks — even these added to a picture’s metadata — are “brittle” and will be eliminated if the picture is altered. The adjustments that Nightshade makes will stay via cropping, compressing, screenshotting or enhancing, as a result of they modify the pixels that make up a picture. Even a photograph of a display displaying a shaded picture can be disruptive to mannequin coaching, Zhao stated.

As generative fashions develop into extra refined, artists face mounting strain to guard their work and struggle scraping. Steg.AI and Imatag assist creators set up possession of their pictures by making use of watermarks which might be imperceptible to the human eye, although neither guarantees to guard customers from unscrupulous scraping. The “No AI” Watermark Generator, launched final yr, applies watermarks that label human-made work as AI-generated, in hopes that datasets used to coach future fashions will filter out AI-generated pictures. There’s additionally Kudurru, a instrument from Spawning.ai, which identifies and tracks scrapers’ IP addresses. Web site homeowners can block the flagged IP addresses, or select to ship a distinct picture again, like a center finger.

Kin.art, one other instrument that launched this week, takes a distinct method. In contrast to Nightshade and different applications that cryptographically modify a picture, Kin masks elements of the picture and swaps its metatags, making it tougher to make use of in mannequin coaching.

Nightshade’s critics declare that this system is a “virus,” or complain that utilizing it can “hurt the open source community.” In a screenshot posted on Reddit within the months earlier than Nightshade’s launch, a Discord consumer accused Nightshade of “cyber warfare/terrorism.” One other Reddit consumer who inadvertently went viral on X questioned Nightshade’s legality, evaluating it to “hacking a vulnerable computer system to disrupt its operation.”

Believing that Nightshade is illegitimate as a result of it’s “intentionally disrupting the intended purpose” of a generative AI mannequin, as OP states, is absurd. Zhao asserted that Nightshade is completely authorized. It’s not “magically hopping into model training pipelines and then killing everyone,” Zhao stated — the mannequin trainers are voluntarily scraping pictures, each shaded and never, and AI firms are profiting off of it.

The last word aim of Glaze and Nightshade is to incur an “incremental price” on each bit of information scraped with out permission, till coaching fashions on unlicensed knowledge is now not tenable. Ideally, firms should license uncorrupted pictures to coach their fashions, guaranteeing that artists give consent and are compensated for his or her work.

It’s been executed earlier than; Getty Images and Nvidia not too long ago launched a generative AI instrument totally skilled utilizing Getty’s in depth library of inventory photographs. Subscribing prospects pay a price decided by what number of photographs they wish to generate, and photographers whose work was used to coach the mannequin obtain a portion of the subscription income. Payouts are decided by how a lot of the photographer’s content material was contributed to the coaching set, and the “performance of that content over time,” Wired reported.

Zhao clarified that he isn’t anti-AI, and identified that AI has immensely helpful purposes that aren’t so ethically fraught. On the earth of academia and scientific analysis, developments in AI are trigger for celebration. Whereas a lot of the advertising and marketing hype and panic round AI actually refers to generative AI, conventional AI has been used to develop new medicines and fight local weather change, he stated.

“None of these things require generative AI. None of these things require pretty pictures, or make up facts, or have a user interface between you and the AI,” Zhao stated. “It’s not a core part for most fundamental AI technologies. But it is the case that these things interface so easily with people. Big Tech has really grabbed onto this as an easy way to make profit and engage a much wider portion of the population, as compared to a more scientific AI that actually has fundamental, breakthrough capabilities and amazing applications.”

The key gamers in tech, whose funding and sources dwarf these of academia, are largely pro-AI. They don’t have any incentive to fund tasks which might be disruptive and yield no monetary acquire. Zhao is staunchly against monetizing Glaze and Nightshade, or ever promoting the tasks’ IP to a startup or company. Artists like McKernan are grateful to have a reprieve from subscription charges, that are practically ubiquitous throughout software program utilized in artistic industries.

“Artists, myself included, are feeling just exploited at every turn,” McKernan stated. “So when one thing is given to us freely as a useful resource, I do know we’re appreciative.’

The group behind Nightshade, which consists of Zhao, Ph.D scholar Shawn Shan, and a number of other grad college students, has been funded by the college, conventional foundations and authorities grants. However to maintain analysis, Zhao acknowledged that the group will seemingly have to determine a “nonprofit structure” and work with arts foundations. He added that the group nonetheless has a “few more tricks” up their sleeves.

“For a long time research was done for the sake of research, expanding human knowledge. But I think something like this, there is an ethical line,” Zhao stated. “The research for this matters … those who are most vulnerable to this, they tend to be the most creative, and they tend to have the least support in terms of resources. It’s not a fair fight. That’s why we’re doing what we can to help balance the battlefield.”