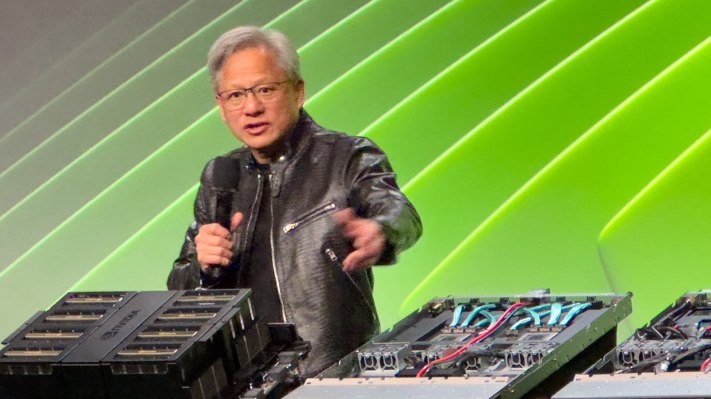

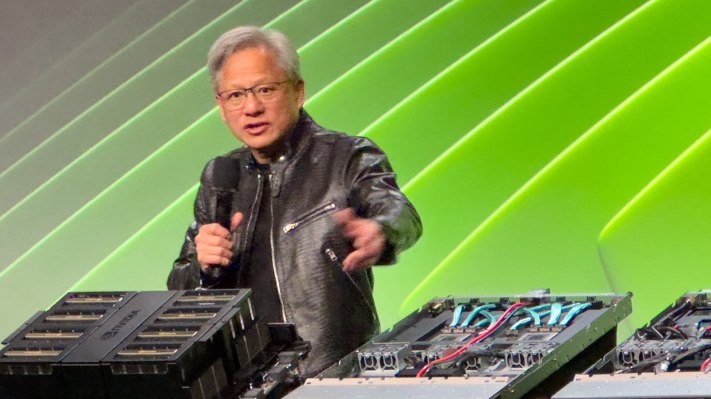

Synthetic Basic Intelligence (AGI) — also known as “strong AI,” “full AI,” “human-level AI” or “general intelligent action” — represents a major future leap within the area of synthetic intelligence. Not like slim AI, which is tailor-made for particular duties (equivalent to detecting product flaws, summarize the news, or build you a website), AGI will be capable of carry out a broad spectrum of cognitive duties at or above human ranges. Addressing the press this week at Nvidia’s annual GTC developer conference, CEO Jensen Huang gave the impression to be getting actually bored of discussing the topic – not least as a result of he finds himself misquoted quite a bit, he says.

The frequency of the query is smart: The idea raises existential questions on humanity’s position in and management of a future the place machines can outthink, outlearn and outperform people in nearly each area. The core of this concern lies within the unpredictability of AGI’s decision-making processes and goals, which could not align with human values or priorities (an idea explored in depth in science fiction since at least the 1940s). There’s concern that when AGI reaches a sure degree of autonomy and functionality, it would turn out to be not possible to include or management, resulting in eventualities the place its actions can’t be predicted or reversed.

When sensationalist press asks for a timeframe, it’s typically baiting AI professionals into placing a timeline on the tip of humanity — or no less than the present establishment. For sure, AI CEOs aren’t at all times wanting to sort out the topic.

Huang, nevertheless, spent a while telling the press what he does take into consideration the subject. Predicting after we will see a satisfactory AGI is dependent upon the way you outline AGI, Huang argues, and attracts a few parallels: Even with the issues of time-zones, you understand when new 12 months occurs and 2025 rolls round. In the event you’re driving to the San Jose Conference Middle (the place this 12 months’s GTC convention is being held), you typically know you’ve arrived when you may see the large GTC banners. The essential level is that we are able to agree on tips on how to measure that you simply’ve arrived, whether or not temporally or geospatially, the place you had been hoping to go.

“If we specified AGI to be something very specific, a set of tests where a software program can do very well — or maybe 8% better than most people — I believe we will get there within 5 years,” Huang explains. He means that the exams may very well be a authorized bar examination, logic exams, financial exams or maybe the power to cross a pre-med examination. Until the questioner is ready to be very particular about what AGI means within the context of the query, he’s not keen to make a prediction. Truthful sufficient.

AI hallucination is solvable

In Tuesday’s Q&A session, Huang was requested what to do about AI hallucinations – the tendency for some AIs to make up solutions that sound believable, however aren’t primarily based in reality. He appeared visibly pissed off by the query, and instructed that hallucinations are solvable simply – by ensuring that solutions well-researched.

“Add a rule: For every single answer, you have to look up the answer,” Huang says, referring to this apply as ‘Retrieval-augmented generation,’ describing an strategy similar to fundamental media literacy: Study the supply, and the context. Examine the info contained within the supply to recognized truths, and if the reply is factually inaccurate – even partially – discard the entire supply and transfer on to the following one. “The AI shouldn’t just answer, it should do research first, to determine which of the answers are the best.”

For mission-critical solutions, equivalent to well being recommendation or related, Nvidia’s CEO means that maybe checking a number of assets and recognized sources of fact is the best way ahead. In fact, because of this the generator that’s creating a solution must have the choice to say, ‘I don’t know the reply to your query,’ or ‘I can’t get to a consensus on what the proper reply to this query is,’ and even one thing like ‘hey, the Superbowl hasn’t happened yet, so I don’t know who won.’