SAN JOSE — “I hope you realize this is not a concert,” mentioned Nvidia President Jensen Huang to an viewers so giant, it stuffed up the SAP Heart in San Jose. That is how he launched what is probably the exact opposite of a live performance: the corporate’s GTC occasion. “You have arrived at a developers conference. There will be a lot of science describing algorithms, computer architecture, mathematics. I sense a very heavy weight in the room; all of a sudden, you’re in the wrong place.”

It could not have been a rock live performance, however the the leather-jacket carrying 61-year outdated CEO of the world’s third-most-valuable company by market cap definitely had a good variety of followers within the viewers. The corporate launched in 1993, with a mission to push basic computing previous its limits. “Accelerated computing” turned the rallying cry for Nvidia: Wouldn’t or not it’s nice to make chips and boards that have been specialised, relatively than for a basic objective? Nvidia chips give graphics-hungry avid gamers the instruments they wanted to play video games in larger decision, with larger high quality and better body charges.

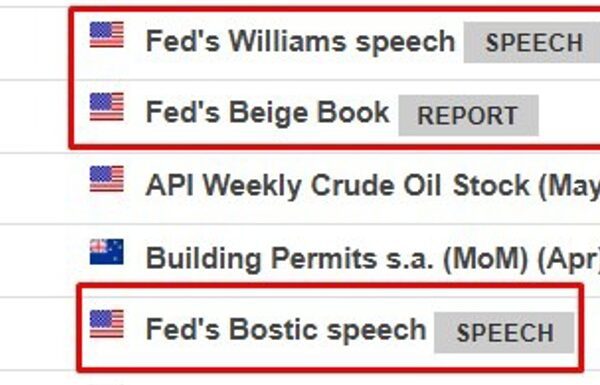

It’s not an enormous shock, maybe, that the Nvidia CEO drew parallels to a live performance. The venue was, in a phrase, very concert-y. Picture Credit: TechCrunch / Haje Kamps

Monday’s keynote was, in a method, a return to the corporate’s authentic mission. “I want to show you the soul of Nvidia, the soul of our company, at the intersection of computer graphics, physics and artificial intelligence, all intersecting inside a computer.”

Then, for the subsequent two hours, Huang did a uncommon factor: He nerded out. Laborious. Anybody who had come to the keynote anticipating him to drag a Tim Prepare dinner, with a slick, audience-focused keynote, was certain to be dissatisfied. Total, the keynote was tech-heavy, acronym-riddled, and unapologetically a developer convention.

We’d like greater GPUs

Graphics processing models (GPUs) is the place Nvidia bought its begin. In case you’ve ever constructed a pc, you’re in all probability considering of a graphics card that goes in a PCI slot. That’s the place the journey began, however we’ve come a great distance since then.

The corporate introduced its brand-new Blackwell platform, which is an absolute monster. Huang says that the core of the processor was “pushing the limits of physics how big a chip could be.” It makes use of combines the facility of two chips, providing speeds of 10 Tbps.

“I’m holding around $10 billion worth of equipment here,” Huang mentioned, holding up a prototype of Blackwell. “The next one will cost $5 billion. Luckily for you all, it gets cheaper from there.” Placing a bunch of those chips collectively can crank out some really spectacular energy.

The earlier era of AI-optimized GPU was known as Hopper. Blackwell is between 2 and 30 occasions quicker, relying on the way you measure it. Huang defined that it took 8,000 GPUs, 15 megawatts and 90 days to create the GPT-MoE-1.8T mannequin. With the brand new system, you possibly can use simply 2,000 GPUs and use 25% of the facility.

These GPUs are pushing a implausible quantity of information round — which is an excellent segue into one other subject Huang talked about.

What’s subsequent

Nvidia rolled out a new set of tools for automakers engaged on self-driving vehicles. The corporate was already a major player in robotics, however it doubled down with new instruments for roboticists to make their robots smarter.

The corporate additionally launched Nvidia NIM, a software program platform aimed toward simplifying the deployment of AI fashions. NIM leverages Nvidia’s {hardware} as a basis and goals to speed up corporations’ AI initiatives by offering an ecosystem of AI-ready containers. It helps fashions from numerous sources, together with Nvidia, Google and Hugging Face, and integrates with platforms like Amazon SageMaker and Microsoft Azure AI. NIM will broaden its capabilities over time, together with instruments for generative AI chatbots.

“Anything you can digitize: So long as there is some structure where we can apply some patterns, means we can learn the patterns,” Huang mentioned. “And if we can learn the patterns, we can understand the meaning. When we understand the meaning, we can generate it as well. And here we are, in the generative AI revolution.”