After I first began shopping for make-up, I rapidly discovered the significance of pores and skin tones and undertones. As somebody with a light-medium pores and skin tone and yellow undertones, I discovered that foundations that had been too mild and pink would go away my pores and skin pallid and ashen. On the time, make-up shade ranges had been extraordinarily restricted, and the alienation I typically felt as a Chinese language American rising up in Appalachia was amplified at any time when a gross sales affiliate would sadly proclaim there was no basis shade that matched me.

Solely lately has pores and skin tone range grow to be a better concern for cosmetics corporations. The launch of Fenty Magnificence by Rihanna in 2017 with 40 basis shades revolutionized the business in what has been dubbed the “Fenty effect,” and types now compete to point out better pores and skin tone inclusivity. Since then, I’ve personally felt how significant it’s to have the ability to stroll right into a retailer and purchase merchandise off the shelf that acknowledge your existence.

Hidden pores and skin tone bias in AI

As an AI ethics analysis scientist, once I first started auditing pc imaginative and prescient fashions for bias, I discovered myself again on the planet of restricted shade ranges. In pc imaginative and prescient, the place visible info from photographs and movies is processed for duties like facial recognition and verification, AI biases (disparities in how nicely AI performs for various teams) have been hidden by the sphere’s slender understanding of pores and skin tones. Within the absence of information to measure racial bias straight, AI builders usually solely contemplate bias alongside mild versus darkish pores and skin tone classes. Because of this, whereas there have been vital strides in consciousness of facial recognition bias towards individuals with darker skin tones, bias outdoors of this dichotomy isn’t thought-about.

The pores and skin tone scale mostly utilized by AI builders is the Fitzpatrick scale, even supposing it was initially developed to characterize pores and skin tanning or burning for Caucasians. The deepest two shades had been solely later added to seize “brown” and “black” pores and skin tones. The ensuing scale seems much like old-school basis shade ranges, with solely six choices.

This slender conception of bias is very exclusionary. In one of many few research that checked out racial bias in facial recognition applied sciences, the Nationwide Institute of Requirements and Expertise discovered that such applied sciences are biased against teams outdoors of this dichotomy, together with East Asians, South Asians, and Indigenous People, however such biases are not often checked for.

After a number of years of labor with researchers on my staff, we discovered that pc imaginative and prescient fashions will not be solely biased alongside mild versus darkish pores and skin tones but in addition alongside crimson versus yellow pores and skin hues. The truth is, AI fashions carried out much less precisely for these with darker or extra yellow pores and skin tones, and these pores and skin tones are significantly under-represented in main AI datasets. Our work launched a two-dimensional pores and skin tone scale to allow AI builders to determine biases alongside mild versus darkish tones and crimson versus yellow hues going ahead. This discovery was vindicating to me, each scientifically and personally.

Excessive-stakes AI

Like discrimination in different contexts, a pernicious function of AI bias is the gnawing uncertainty it creates. For instance, if I’m stopped on the border resulting from a facial recognition mannequin not having the ability to match my face to my passport, however the know-how works nicely for my white colleagues, is that resulting from bias or simply unhealthy luck? As AI turns into more and more pervasive in on a regular basis life, small biases can accumulate, leading to some folks residing as second-class residents, systematically unseen or mischaracterized. That is particularly regarding for high-stakes purposes like facial recognition for figuring out felony suspects or pedestrian detection for self-driving vehicles.

Whereas detecting AI bias towards folks with totally different pores and skin tones is just not a panacea, it is a vital step ahead at a time when there’s a rising push to handle algorithmic discrimination, as outlined within the EU AI Act and President Joe Biden’s AI executive order. Not solely does this analysis allow extra thorough audits of AI fashions, but it surely additionally emphasizes the significance of together with numerous views in AI growth.

When explaining this analysis, I’ve been struck by how intuitive our two-dimensional scale appears to individuals who have had the expertise of buying cosmetics—one of many uncommon instances when you will need to categorize your pores and skin tone and undertone. It saddens me to assume that maybe AI builders have relied on a slender conception of pores and skin tone thus far as a result of there isn’t more diversity, particularly intersectional range, on this discipline. My very own twin identities as an Asian American and a lady—who had skilled the challenges of pores and skin tone illustration—had been what impressed me to discover this potential resolution within the first place.

We have now seen the affect numerous views have had within the cosmetics business because of Rihanna and others, so it’s crucial that the AI business study from this. Failing to take action dangers making a world the place many discover themselves erased or excluded by our applied sciences.

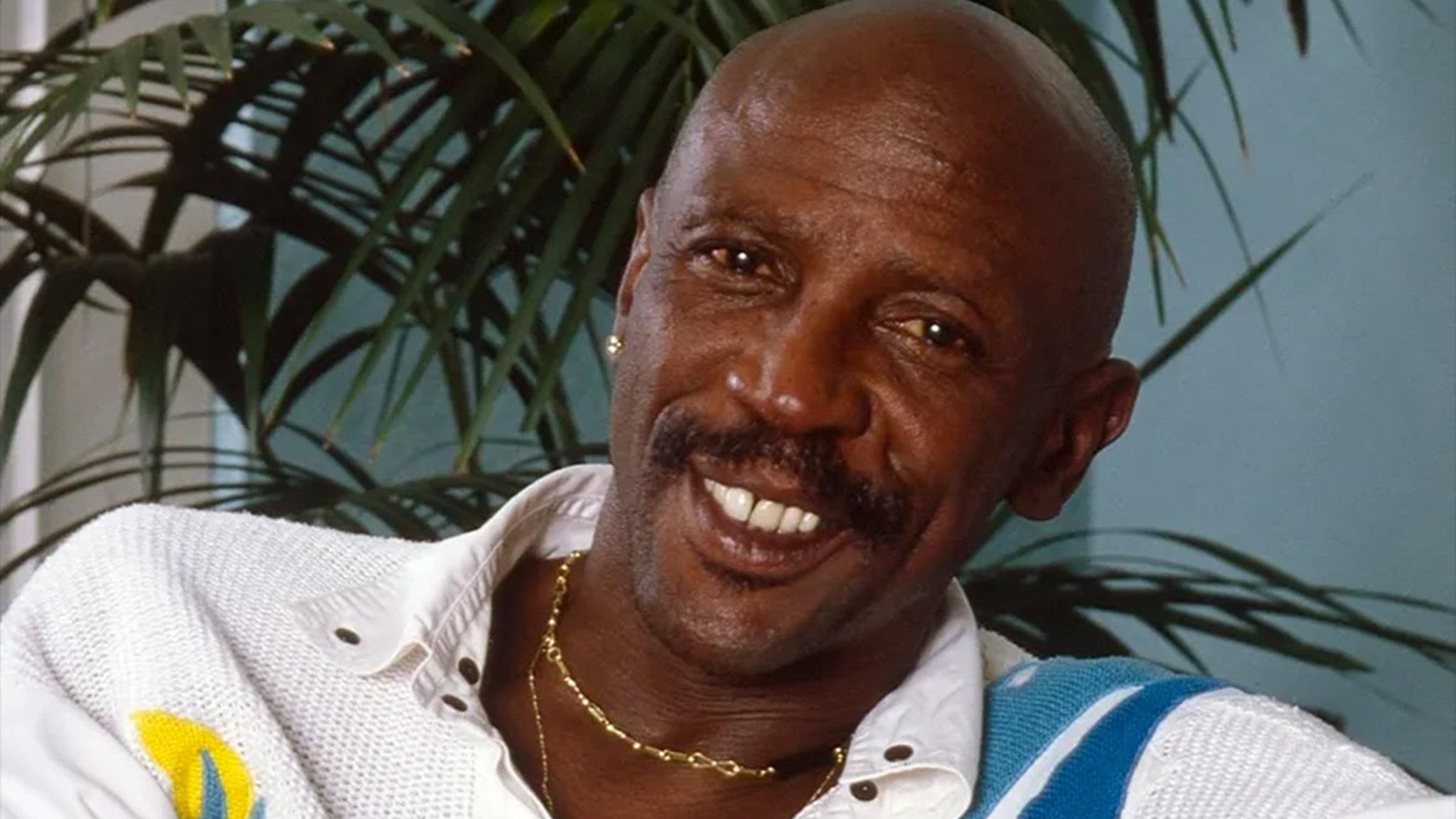

Alice Xiang is a distinguished researcher, completed creator, and governance chief who has devoted her profession to uncovering essentially the most pernicious sides of AI—a lot of that are rooted in knowledge and the AI growth course of. She is the International Head of AI Ethics at Sony Group Company and Lead Analysis Scientist at Sony AI.

Extra must-read commentary revealed by Fortune:

- Why I’m one more girl leaving the tech industry

- The tax code is made for tradwives. Right here’s how a lot it punishes dual-earning couples

- My psychological well being hit a low level resulting from a tough being pregnant. Each employer should offer the sort of advantages package deal that pulled me by

- America is debating whether or not to boost the retirement age—however boomers are already working nicely into their sixties and seventies

The opinions expressed in Fortune.com commentary items are solely the views of their authors and don’t essentially mirror the opinions and beliefs of Fortune.