When Cloudflare accused AI search engine Perplexity of stealthily scraping websites on Monday, while ignoring a site’s specific methods to block it, this wasn’t a clear-cut case of an AI web crawler gone wild.

Many people came to Perplexity’s defense. They argued that Perplexity accessing sites in defiance of the website owner’s wishes, while controversial, is acceptable. And this is a controversy that will certainly grow as AI agents flood the internet: Should an agent accessing a website on behalf of its user be treated like a bot? Or like a human making the same request?

Cloudflare is known for providing anti-bot crawling and other web security services to millions of websites. Essentially, Cloudflare’s test case involved setting up a new website with a new domain that had never been crawled by any bot, setting up a robots.txt file that specifically blocked Perplexity’s known AI crawling bots, and then asking Perplexity about the website’s content. And Perplexity answered the question.

Cloudflare researchers found the AI search engine used “a generic browser intended to impersonate Google Chrome on macOS” when its web crawler itself was blocked. Cloudflare CEO Matthew Prince posted the research on X, writing, “Some supposedly ‘reputable’ AI companies act more like North Korean hackers. Time to name, shame, and hard block them.”

But many people disagreed with Prince’s assessment that this was actual bad behavior. Those defending Perplexity on sites like X and Hacker News pointed out that what Cloudflare seemed to document was the AI accessing a specific public website when its user asked about that specific website.

“If I as a human request a website, then I should be shown the content,” one person on Hacker News wrote, adding, “why would the LLM accessing the website on my behalf be in a different legal category as my Firefox web browser?”

A Perplexity spokesperson previously denied to TechCrunch that the bots were the company’s and called Cloudflare’s blog post a sales pitch for Cloudflare. Then on Tuesday, Perplexity published a blog in its defense (and generally attacking Cloudflare), claiming the behavior was from a third-party service it uses occasionally.

Techcrunch event

San Francisco

|

October 27-29, 2025

But the crux of Perplexity’s post made a similar appeal as its online defenders did.

“The difference between automated crawling and user-driven fetching isn’t just technical — it’s about who gets to access information on the open web,” the post said. “This controversy reveals that Cloudflare’s systems are fundamentally inadequate for distinguishing between legitimate AI assistants and actual threats.”

Peplexity’s accusations aren’t exactly fair, either. One argument that Prince and Cloudflare used for calling out Perplexity’s methods was that OpenAI doesn’t behave in the same way.

“OpenAI is an example of a leading AI company that follows these best practices. They respect robots.txt and do not try to evade either a robots.txt directive or a network level block. And ChatGPT Agent is signing http requests using the newly proposed open standard Web Bot Auth,” Prince wrote in his post.

Web Bot Auth is a Cloudflare-supported standard being developed by the Internet Engineering Task Force that hopes to create a cryptographic method for identifying AI agent web requests.

The debate comes as bot activity reshapes the internet. As TechCrunch has previously reported, bots seeking to scrape massive amounts of content to train AI models have become a menace, especially to smaller sites.

For the first time in the internet’s history, bot activity is currently outstripping human activity online, with AI traffic accounting for over 50%, according to Imperva’s Bad Bot report released last month. Most of that activity is coming from LLMs. But the report also found that malicious bots now make up 37% of all internet traffic. That’s activity that includes everything from persistent scraping to unauthorized login attempts.

Until LLMs, the internet generally accepted that websites could and should block most bot activity given how often it was malicious by using CAPTCHAs and other services (such as Cloudflare). Websites also had a clear incentive to work with specific good actors, such as Googlebot, guiding it on what not to index through robots.txt. Google indexed the internet, which sent traffic to sites.

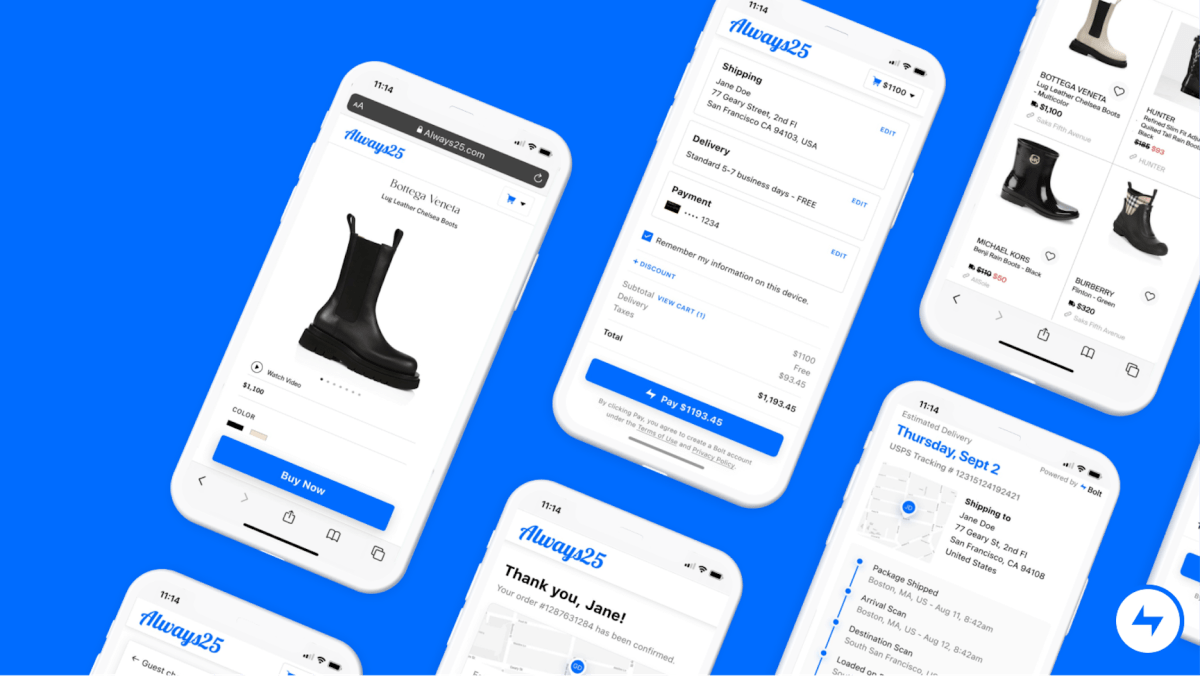

Now, LLMs are eating an increasing amount of that traffic. Gartner predicts that search engine volume will drop by 25% by 2026. Right now humans tend to click website links from LLMs at the point they are most valuable to the website, which is when they are ready to conduct a transaction.

But if humans adopt agents as the tech industry predicts they will — to arrange our travel, book our dinner reservations, and shop for us — would websites hurt their business interests by blocking them? The debate on X captured the dilemma perfectly:

“I WANT perplexity to visit any public content on my behalf when I give it a request/task!” wrote one person in response to Cloudflare calling Perplexity out.

“What if the site owners don’t want it? they just want you [to] directly visit the home, see their stuff” argued another, pointing out that the site owner who created the content wants the traffic and potential ad revenue, not to let Perplexity take it.

“This is why I can’t see ‘agentic browsing’ really working — much harder problem than people think. Most website owners will just block,” a third predicted.