With the most recent examples of generative AI video wowing folks with their accuracy, in addition they underline the potential risk that we now face from synthetic content material, which may quickly be used to depict unreal, but convincing scenes that would affect folks’s opinions, and their subsequent responses.

Like, for instance, how they vote.

With this in thoughts, late final week, on the 2024 Munich Security Conference, representatives from nearly each main tech firm agreed to a new pact to implement “reasonable precautions” in stopping synthetic intelligence instruments from getting used to disrupt democratic elections.

As per the “Tech Accord to Combat Deceptive Use of AI in 2024 Elections”:

“2024 will bring more elections to more people than any year in history, with more than 40 countries and more than four billion people choosing their leaders and representatives through the right to vote. At the same time, the rapid development of artificial intelligence, or AI, is creating new opportunities as well as challenges for the democratic process. All of society will have to lean into the opportunities afforded by AI and to take new steps together to protect elections and the electoral process during this exceptional year.”

Executives from Google, Meta, Microsoft, OpenAI, X, and TikTok are amongst those that’ve agreed to the brand new accord, which can ideally see broader cooperation and coordination to assist deal with AI-generated fakes earlier than they will have an effect.

The accord lays out seven key components of focus, which all signatories have agreed to, in precept, as key measures:

The primary good thing about the initiative is the dedication from every firm to work collectively to share finest practices, and “explore new pathways to share best-in-class tools and/or technical signals about Deceptive AI Election Content in response to incidents”.

The settlement additionally units out an ambition for every “to engage with a diverse set of global civil society organizations, academics” in an effort to inform broader understanding of the worldwide danger panorama.

It’s a optimistic step, although it’s additionally non-binding, and it’s extra of a goodwill gesture on the a part of every firm to work in direction of one of the best options. As such, it doesn’t lay out definitive actions to be taken, or penalties for failing to take action. Nevertheless it does, ideally, set the stage for broader collaborative motion to cease deceptive AI content material earlier than it might probably have a big influence.

Although that influence is relative.

For instance, within the current Indonesian election, numerous AI deepfake components had been employed to sway voters, together with a video depiction of deceased leader Suharto designed to encourage assist, and cartoonish versions of some candidates, as a method to melt their public personas.

These had been AI-generated, which is obvious from the beginning, and nobody was going to be misled into believing that these had been precise photographs of how the candidates look, nor that Suharto had returned from the useless. However the influence of such could be important, even with that data, which underlines the ability of such in notion, even when they’re subsequently eliminated, labeled, and many others.

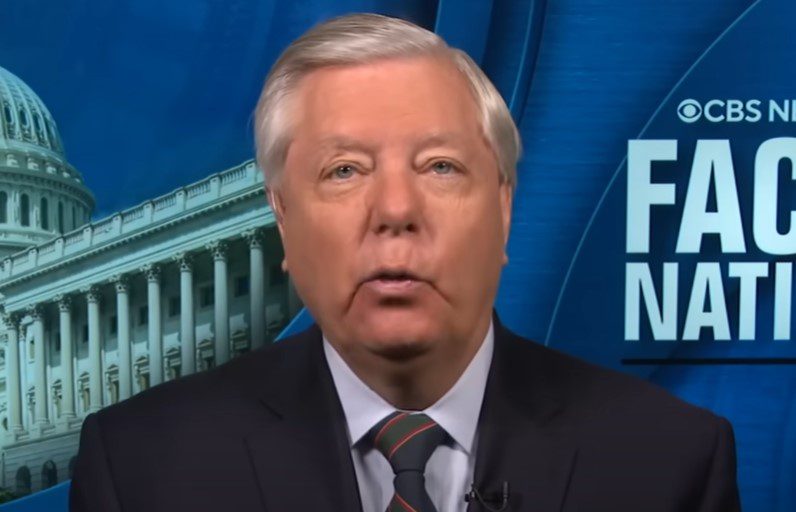

That might be the actual danger. If an AI-generated picture of Joe Biden or Donald Trump has sufficient resonance, the origin of it might be trivial, because it may nonetheless sway voters based mostly on the depiction, whether or not it’s actual or not.

Notion issues, and good use of deepfakes will have an effect, and can sway some voters, no matter safeguards and precautions.

Which is a danger that we now need to bear, on condition that such instruments are already available, and like social media earlier than, we’re going to be assessing the impacts on reflection, versus plugging holes forward of time.

As a result of that’s the way in which know-how works, we transfer quick, we break issues. Then we decide up the items.