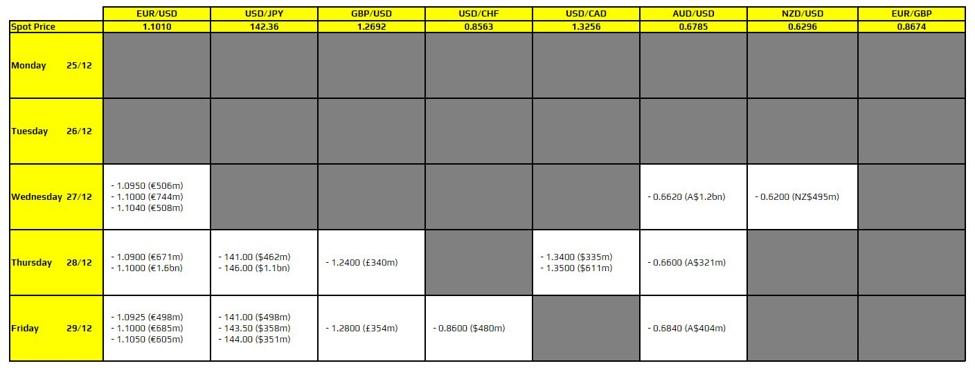

How do you translate “dim sum”? Many English speakers would find the question strange, knowing the term refers to the large array of small dishes that accompanies a Cantonese-style brunch—and so doesn’t need translation.

But words like “dim sum” are a challenge for developers like Jacky Chan, who launched a Cantonese large language model last year through his startup Votee. It might be obvious to a human translator what words are loanwords and which need direct translation. Yet it’s less intuitive for machines.

“It’s not natural enough,” Chan says. “When you see it, you know it’s not something a human writes.”

Translation troubles are part of a growing list of issues when today’s AI models, strongest in English and other major languages, try to work in an array of smaller tongues still spoken by tens of millions of people.

When AI “models encounter a word they don’t know or that doesn’t exist in another culture, they will simply make up a translation,” explains Aliya Bhatia, a senior policy analyst at the Center of Democracy & Technology, where she researches issues related to multilingual AI. “As a result, many machine-created datasets could feature mistranslations, words that no native speaker actually uses in a specific language.”

LLMs need data, and lots of it. Text from books, articles and websites is broken down into smaller word sequences to form a model’s training dataset. From this, LLMs learn how to predict the next word in a sequence, eventually generating text.

AI can now generate text remarkably well—at least, it can in English. In other languages, performance lags significantly. Roughly half of all web content is in English, meaning there’s no shortage of digital resources for LLMs to learn from. Many other languages do not enjoy this same abundance.

Low-resource languages

So-called low resource languages are those with limited online data. Endangered languages, no longer being passed down to younger generations, clearly fall into this category. But widely spoken languages like Cantonese, Vietnamese and Bahasa Indonesia are also considered low-resource.

One reason could be limited internet access, which would prevent the creation of digital content. Another could be government regulation, which might limit what’s available online. Indonesia, for example, can remove online content without offering a way to appeal decisions. The resulting self-censorship may mean that available data in some regional languages might not represent authentic local culture.

This resource gap leads to a performance gap: Non-English LLMs are more likely to produce gibberish or inaccurate answers. LLMs also struggle with languages that don’t use Latin script, the set of letters used in English, as well as those with tonal features that are hard to represent in writing or code.

Currently, the best-performing models work in English and, to a lesser extent, Mandarin Chinese. That reflects where the world’s biggest tech companies are based. But outside of San Francisco and Hangzhou, a legion of developers, large and small, are trying to make AI work for everyone.

South Korean internet firm Naver has built an LLM, HyperCLOVA X, which it claims is trained on 6,500 times more Korean data than GPT-4. Naver is also working in markets like Saudi Arabia and Thailand in a bid to expand its business creating “sovereign AI,” or AI tailored to a specific country’s needs. “We focus on what companies and governments that want to use AI would want, and what needs Big Tech can’t fulfill,” CEO Choi Soo-Yeon told Fortune last year.

In Indonesia, telecom operator Indosat and tech startup Goto are collaborating to launch a 70 billion parameter LLM that operates in Bahasa Indonesia as well as five other local languages, including Javanese, Balinese, and Bataknese.

One hurdle is scale. The most powerful LLMs are massive, made up of billions of word sequences converted into variables known as parameters. OpenAI’s GPT-4 is estimated to have around 1.8 trillion parameters. DeepSeek’s R1 has 671 billion.

Non-English LLMs seriously struggle to achieve this kind of scale. The Southeast Asian Languages in One Model (SEA-LION) project has trained two models from scratch: One with 3 billion parameters and one with 7 billion, much smaller than leading English and Chinese models.

Chan, from Votee, faces these struggles when dealing with Cantonese, spoken by 85 million people across southern China and Hong Kong. Cantonese uses different grammar for formal writing compared to informal writing and speech. Available digital data is scarce and often low-quality.

Training on digitalized Cantonese texts is like “learning from a library with many books, but they have lots of typos, they are poorly translated, or they’re just plain wrong,” says Chan.

Without a comprehensive dataset, an LLM can’t produce complete results. Data for low-resource language often skews towards formal texts—legal documents, religious texts, or Wikipedia entries—since these are more likely to be digitized. This bias can distort an LLM’s tone, vocabulary and style, and limit its knowledge.

LLMs have no inherent sense of what is true, and so false or incomplete information will be reproduced as fact. A model trained solely on Vietnamese pop music might struggle to accurately answer questions on historical events, particularly those not related to Vietnam.

Translating English content

Turning English content into the target language is one way to supplement the otherwise-limited training data. As Chan explains, “we synthesize the data using AI so that we can have more data to do the training.”

But machine translation carries risk. It can miss linguistic nuance or cultural context. A Georgia Tech study of cultural bias in Arabic LLMs found that AI models trained on Arabic datasets still exhibited Western bias, such as referencing alcoholic beverages in Islamic religious contexts. It turned out that much of the pre-training data for these models came from web-crawled Arabic content that was machine-translated from English, allowing cultural values to sneak through.

In the long-term, AI-generated content might end up polluting low-resource languages datasets. Chan likens it to “a photocopy of a photocopy,” with each iteration degrading the quality. In 2024, Nature warned of “model collapse,” where AI-generated text could contaminate the training data for future LLMs, leading to worse performance.

The threat is even greater for low-resource languages. With less genuine content out there, AI-generated content could quickly end up making up a larger share of what’s online in a given language.

Large businesses are starting to realize the opportunities in building a non-English AI. But while these companies are key players in their respective tech sectors, they’re still much smaller than giants like Alibaba, OpenAI, and Microsoft.

Bhatia says more organizations—both for-profit and not-for-profit—need to invest in multilingual AI if this new technology is to be truly global.

“If LLMs are going to be used to equip people with access to economic opportunities, educational resources, and more, they should work in the languages people use,” she says.

Fortune is bringing Brainstorm AI back to Asia on July 22-23 with the latest edition of our Brainstorm AI Singapore conference. Fortune will be convening the smartest people we know—technologists, entrepreneurs, Fortune Global 500 executives, investors, policymakers, and the brilliant minds in between—to explore and interrogate the most pressing questions about AI. Register here!