Maintaining with an trade as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a helpful roundup of latest tales on this planet of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

This week, Meta launched the latest in its Llama series of generative AI models: Llama 3 8B and Llama 3 70B. Able to analyzing and writing textual content, the fashions are “open sourced,” Meta mentioned — meant to be a “foundational piece” of methods that builders design with their distinctive objectives in thoughts.

“We believe these are the best open source models of their class, period,” Meta wrote in a blog post. “We are embracing the open source ethos of releasing early and often.”

There’s just one downside: the Llama 3 fashions aren’t actually “open source,” a minimum of not within the strictest definition.

Open supply implies that builders can use the fashions how they select, unfettered. However within the case of Llama 3 — as with Llama 2 — Meta has imposed sure licensing restrictions. For instance, Llama fashions can’t be used to coach different fashions. And app builders with over 700 million month-to-month customers should request a particular license from Meta.

Debates over the definition of open supply aren’t new. However as corporations within the AI house play quick and free with the time period, it’s injecting gasoline into long-running philosophical arguments.

Final August, a study co-authored by researchers at Carnegie Mellon, the AI Now Institute and the Sign Basis discovered that many AI fashions branded as “open source” include large catches — not simply Llama. The info required to coach the fashions is stored secret. The compute energy wanted to run them is past the attain of many builders. And the labor to fine-tune them is prohibitively costly.

So if these fashions aren’t really open supply, what are they, precisely? That’s query; defining open supply with respect to AI isn’t a simple job.

One pertinent unresolved query is whether or not copyright, the foundational IP mechanism open supply licensing is predicated on, may be utilized to the assorted elements and items of an AI venture, particularly a mannequin’s internal scaffolding (e.g. embeddings). Then, there’s the mismatch between the notion of open supply and the way AI truly features to beat: open supply was devised partly to make sure that builders may examine and modify code with out restrictions. With AI, although, which components it’s essential do the learning and modifying is open to interpretation.

Wading by means of all of the uncertainty, the Carnegie Mellon examine does clarify the hurt inherent in tech giants like Meta co-opting the phrase “open source.”

Usually, “open source” AI initiatives like Llama find yourself kicking off information cycles — free advertising and marketing — and offering technical and strategic advantages to the initiatives’ maintainers. The open supply neighborhood hardly ever sees these similar advantages, and, once they do, they’re marginal in comparison with the maintainers’.

As a substitute of democratizing AI, “open source” AI initiatives — particularly these from large tech corporations — are inclined to entrench and broaden centralized energy, say the examine’s co-authors. That’s good to bear in mind the subsequent time a significant “open source” mannequin launch comes round.

Listed below are another AI tales of notice from the previous few days:

- Meta updates its chatbot: Coinciding with the Llama 3 debut, Meta upgraded its AI chatbot throughout Fb, Messenger, Instagram and WhatsApp — Meta AI — with a Llama 3-powered backend. It additionally launched new options, together with quicker picture era and entry to internet search outcomes.

- AI-generated porn: Ivan writes about how the Oversight Board, Meta’s semi-independent coverage council, is popping its consideration to how the corporate’s social platforms are dealing with express, AI-generated photos.

- Snap watermarks: Social media service Snap plans so as to add watermarks to AI-generated photos on its platform. A translucent model of the Snap brand with a sparkle emoji, the brand new watermark can be added to any AI-generated picture exported from the app or saved to the digital camera roll.

- The new Atlas: Hyundai-owned robotics firm Boston Dynamics has unveiled its next-generation humanoid Atlas robotic, which, in distinction to its hydraulics-powered predecessor, is all-electric — and far friendlier in look.

- Humanoids on humanoids: To not be outdone by Boston Dynamics, the founding father of Mobileye, Amnon Shashua, has launched a brand new startup, Menteebot, targeted on constructing bibedal robotics methods. A demo video reveals Menteebot’s prototype strolling over to a desk and selecting up fruit.

- Reddit, translated: In an interview with Amanda, Reddit CPO Pali Bhat revealed that an AI-powered language translation function to convey the social community to a extra international viewers is within the works, together with an assistive moderation instrument skilled on Reddit moderators’ previous choices and actions.

- AI-generated LinkedIn content: LinkedIn has quietly began testing a brand new technique to enhance its revenues: a LinkedIn Premium Firm Web page subscription, which — for charges that look like as steep as $99/month — embrace AI to jot down content material and a collection of instruments to develop follower counts.

- A Bellwether: Google mum or dad Alphabet’s moonshot manufacturing facility, X, this week unveiled Challenge Bellwether, its newest bid to use tech to a few of the world’s largest issues. Right here, meaning utilizing AI instruments to determine pure disasters like wildfires and flooding as shortly as potential.

- Protecting kids with AI: Ofcom, the regulator charged with implementing the U.Ok.’s On-line Security Act, plans to launch an exploration into how AI and different automated instruments can be utilized to proactively detect and take away unlawful content material on-line, particularly to protect kids from dangerous content material.

- OpenAI lands in Japan: OpenAI is increasing to Japan, with the opening of a brand new Tokyo workplace and plans for a GPT-4 mannequin optimized particularly for the Japanese language.

Extra machine learnings

Picture Credit: DrAfter123 / Getty Photographs

Can a chatbot change your thoughts? Swiss researchers discovered that not solely can they, but when they’re pre-armed with some private details about you, they can actually be more persuasive in a debate than a human with that same info.

“This is Cambridge Analytica on steroids,” mentioned venture lead Robert West from EPFL. The researchers suspect the mannequin — GPT-4 on this case — drew from its huge shops of arguments and details on-line to current a extra compelling and assured case. However the consequence form of speaks for itself. Don’t underestimate the ability of LLMs in issues of persuasion, West warned: “In the context of the upcoming US elections, people are concerned because that’s where this kind of technology is always first battle tested. One thing we know for sure is that people will be using the power of large language models to try to swing the election.”

Why are these fashions so good at language anyway? That’s one space there’s a lengthy historical past of analysis into, going again to ELIZA. If you happen to’re interested in one of many individuals who’s been there for lots of it (and carried out no small quantity of it himself), take a look at this profile on Stanford’s Christopher Manning. He was simply awarded the John von Neuman Medal; congrats!

In a provocatively titled interview, one other long-term AI researcher (who has graced the TechCrunch stage as effectively), Stuart Russell, and postdoc Michael Cohen speculate on “How to keep AI from killing us all.” Most likely factor to determine sooner fairly than later! It’s not a superficial dialogue, although — these are good individuals speaking about how we will truly perceive the motivations (if that’s the fitting phrase) of AI fashions and the way rules should be constructed round them.

The interview is definitely relating to a paper in Science revealed earlier this month, during which they suggest that superior AIs able to performing strategically to realize their objectives, which they name “long-term planning agents,” could also be not possible to check. Primarily, if a mannequin learns to “understand” the testing it should go with a purpose to succeed, it could very effectively be taught methods to creatively negate or circumvent that testing. We’ve seen it at a small scale, why not a big one?

Russell proposes limiting the {hardware} wanted to make such brokers… however after all, Los Alamos and Sandia Nationwide Labs simply obtained their deliveries. LANL just had the ribbon-cutting ceremony for Venado, a brand new supercomputer meant for AI analysis, composed of two,560 Grace Hopper Nvidia chips.

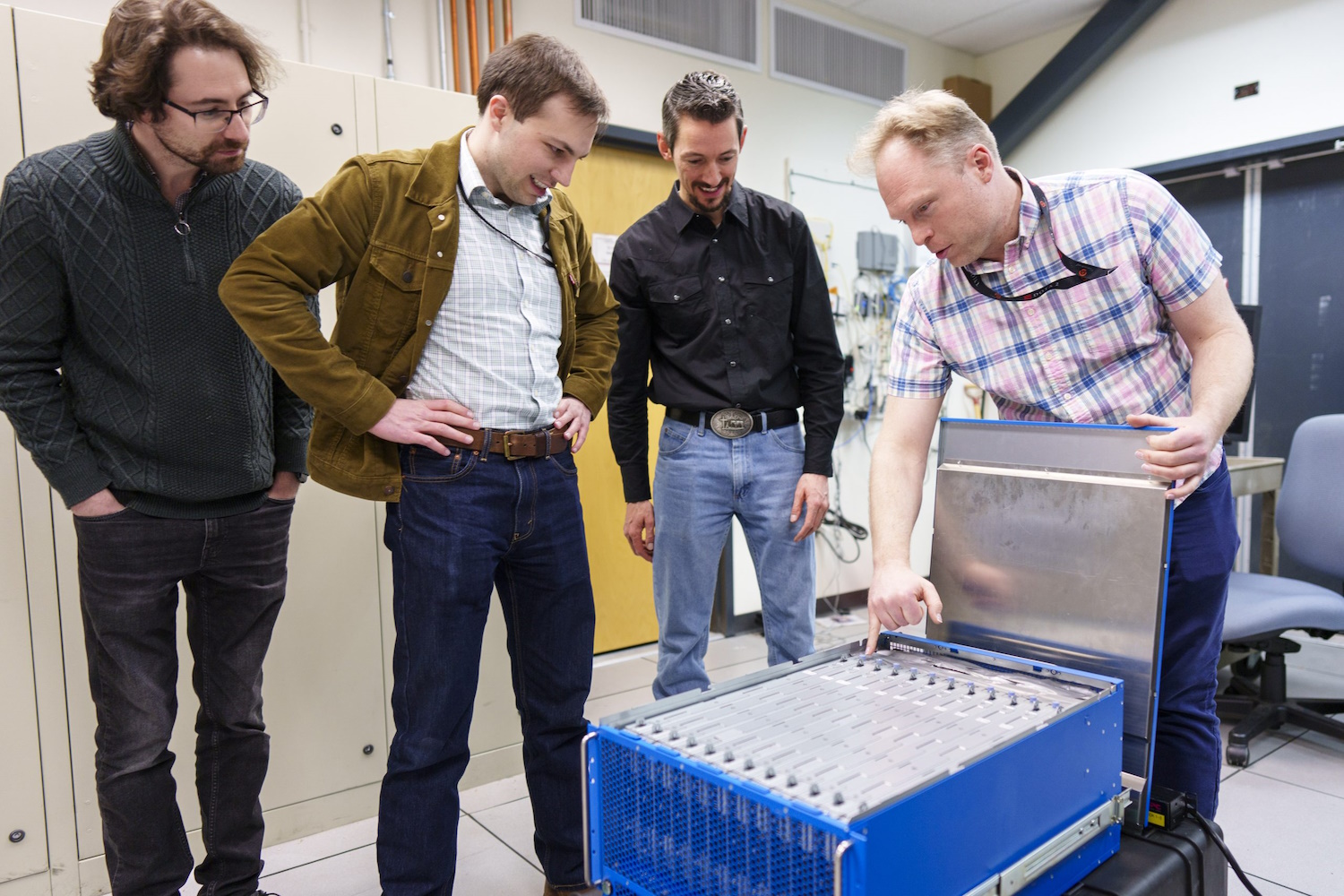

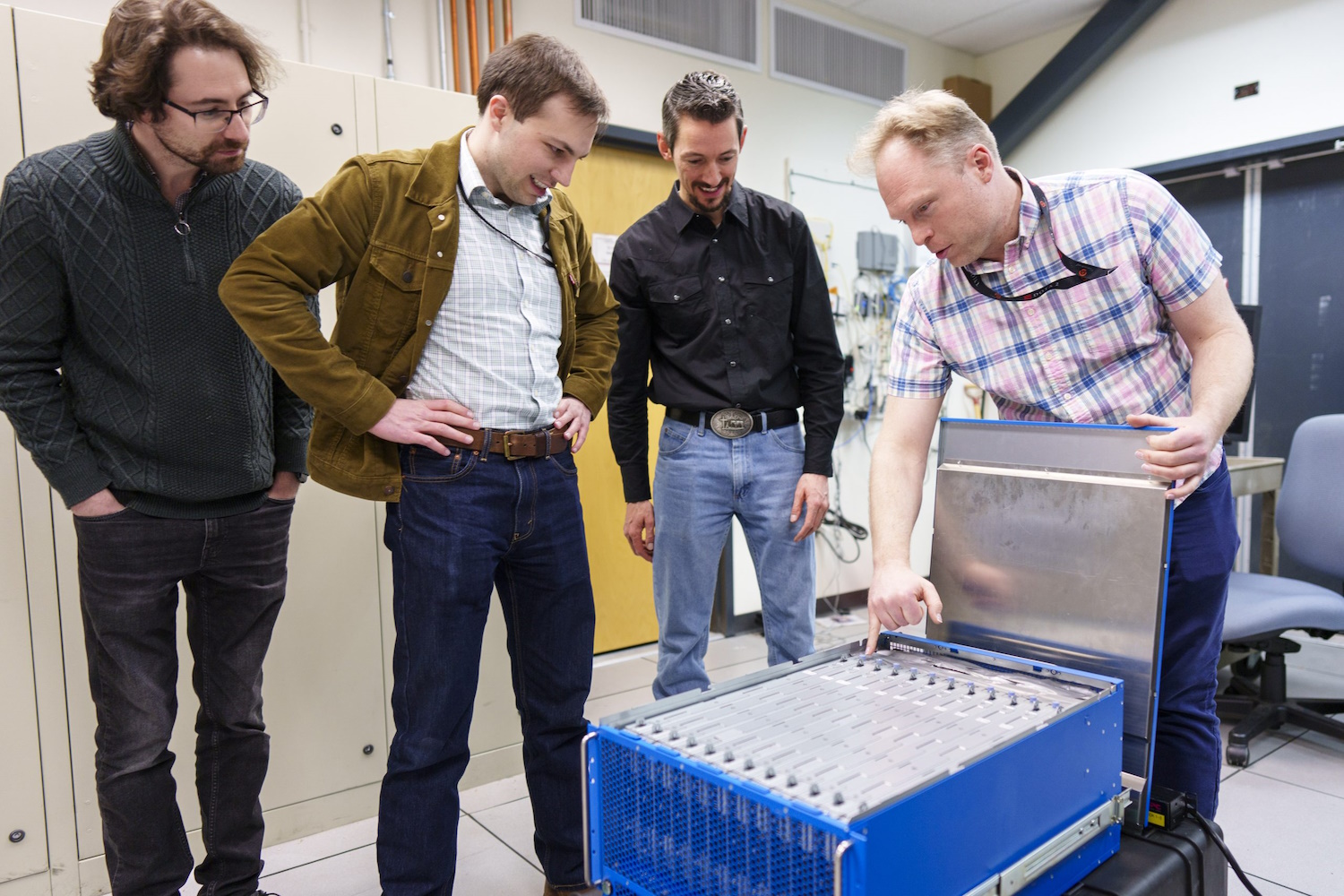

Researchers look into the brand new neuromorphic laptop.

And Sandia simply obtained “an extraordinary brain-based computing system called Hala Point,” with 1.15 billion synthetic neurons, constructed by Intel and believed to be the biggest such system on this planet. Neuromorphic computing, because it’s referred to as, isn’t meant to exchange methods like Venado, however to pursue new strategies of computation which can be extra brain-like than the fairly statistics-focused method we see in trendy fashions.

“With this billion-neuron system, we will have an opportunity to innovate at scale both new AI algorithms that may be more efficient and smarter than existing algorithms, and new brain-like approaches to existing computer algorithms such as optimization and modeling,” mentioned Sandia researcher Brad Aimone. Sounds dandy… simply dandy!