Seeing extra junk suggestions in your “For You” feed on Threads?

You’re not alone. Based on Instagram Chief Adam Mosseri, this has grow to be an issue for the app, and the Threads group is working to repair it.

As outlined by Mosseri, extra Threads customers have been proven extra borderline content material within the app, which is an issue that the group is working to repair, because it continues to enhance the 6-month-old platform.

Although the borderline content material difficulty isn’t a brand new one for social apps.

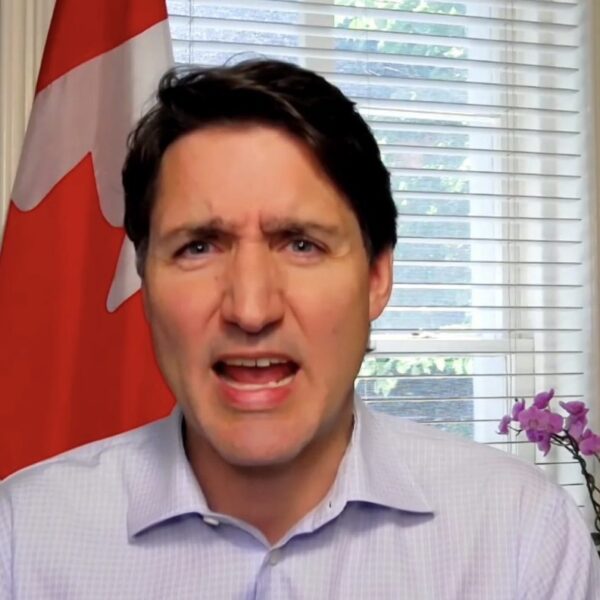

Again in 2018, Meta chief Mark Zuckerberg provided a broad overview of the continuing points with content material consumption, and the way controversial content material inevitably at all times features extra traction.

As per Zuckerberg:

“One of the biggest issues social networks face is that, when left unchecked, people will engage disproportionately with more sensationalist and provocative content. This is not a new phenomenon. It is widespread on cable news today and has been a staple of tabloids for more than a century. At scale it can undermine the quality of public discourse and lead to polarization. In our case, it can also degrade the quality of our services.”

Zuckerberg additional famous that it is a tough problem to resolve, as a result of “no matter where we draw the lines for what is allowed, as a piece of content gets close to that line, people will engage with it more on average – even when they tell us afterwards they don’t like the content.”

It appears that evidently Threads is now falling into the identical lure, presumably on account of its fast development, presumably because of the real-time refinement of its programs. However that is how all social networks evolve, with controversial content material getting a much bigger push, as a result of that’s truly what lots of people are going to interact with.

Although you’d have hoped that Meta would have a greater system in place to cope with such, after engaged on platform algorithms for longer than anybody.

In his 2018 overview, Zuckerberg recognized de-amplification as the easiest way to deal with this component.

“This is a basic incentive problem that we can address by penalizing borderline content so it gets less distribution and engagement. [That means that] distribution declines as content gets more sensational, and people are therefore disincentivized from creating provocative content that is as close to the line as possible.”

In principle, this may increasingly work, however evidently, that hasn’t been the case on Threads, which continues to be attempting to work out methods to present the optimum consumer expertise, which suggests exhibiting customers probably the most participating, attention-grabbing content material.

It’s a tough stability, as a result of as Zuckerberg notes, typically customers will interact with the sort of materials even when they are saying they don’t prefer it. That signifies that it’s usually a means of trial and error, in exhibiting customers extra borderline stuff to see how they react, then lowering it, nearly on a user-by-user foundation.

Primarily, this isn’t a easy downside to resolve on a broad scale, however the Threads group is working to enhance the algorithm to spotlight extra related, much less controversial content material, whereas additionally maximizing retention and engagement.

My guess is the rise on this content material has been a little bit of a take a look at to see if that’s what extra folks need, whereas additionally coping with an inflow of recent customers who’re testing the algorithm to seek out out what works. However now, it’s working to right the stability.

So should you’re seeing extra junk, this is the reason, and you must now, based on Mosseri, be seeing much less.