Hello and welcome to Eye on AI. In this edition…A new OpenAI benchmark shows how good models are getting at completing professional tasks…California has a new AI law…OpenAI rolls out Instant Purchases in ChatGPT…and AI can pick winning founders better than most VCs.

Google CEO Sundar Pichai was right when he said that while AI companies aspire to create AGI (artificial general intelligence), what we have right now is more like AJI—artificial jagged intelligence. What Pichai meant by this is that today’s AI is brilliant at some things, including some tasks that even human experts find difficult, while also performing poorly at some tasks that a human would find relatively easy.

Thinking of AI in this way partly explains the confusing set of headlines we’ve seen about AI lately—acing international math and coding competitions, while many AI projects fail to achieve a return on investment and people complain about AI-created “workslop” being a drag on productivity. (More on some of these pessimistic studies later. Needless to say, there is often a lot less to these headlines than meets the eye.)

One of the reasons for the seeming disparity in AI’s capabilities is that many AI benchmarks do not reflect real world use cases. Which is why a new gauge published by OpenAI last week is so important. Called GDPval, the benchmark evaluates leading AI models on real-world tasks, curated by experts from across 44 different professions, representing nine different sectors of the economy. The experts had an average of 14 years experience in their fields, which ranged from law and finance to retail and manufacturing, as well as government and healthcare.

Whereas a traditional AI benchmark might test a model’s capability to answer a multiple choice bar exam question about contract law, for example, the GDPval assessment asks the AI model to craft an entire 3,500 word legal memo assessing the standard of review under Delaware law that a public company founder and CEO, with majority control, would face if he wanted this public company to acquire a private company that he also owned.

OpenAI tested not only its own models, but those from a number of other leading labs, including Google DeepMind’s Gemini 2.5 Pro, Anthropic’s Claude Opus 4.1, and Grok’s Grok 4. Of these, Claude Opus 4.1 consistently performed the best, beating or equaling human expert performance on 47.6% of the total tasks. (Big kudos to OpenAI for intellectual honesty in publishing a study in which its own models were not top of the heap.)

There was a lot of variance between models, with Gemini and Grok often able to complete between a third and a fifth of tasks at or above the standard of human experts, while OpenAI’s GPT-5 Thinking’s performance fell between that of Claude Opus 4.1 and Gemini, and OpenAI’s earlier model, GPT-4o, fared the worst of all, barely able to complete 10% of the tasks to professional standard. GPT-5 was the best at following a prompt correctly, but often failed to format its response properly, according to the researchers. Gemini and Grok seemed to have the most problems with following instructions—sometimes failing to provide the delivered outcome and ignoring reference data—but OpenAI did note that “all the models sometimes hallucinated data or miscalculated.”

Big differences across sectors and professions

There was also a bit of variance between economic sectors, with the models performing best on tasks from government, retail, and the wholesale trade, and generally worst on tasks from the manufacturing sector.

For some professional tasks, Claude Opus 4.1’s performance was off the charts: it beat or equalled human performance for 81% of the tasks taken from “counter and rental clerks,” 76% of those taken from shipping clerks, 70% of those from software development, and, intriguingly, 70% of the tasks taken from the work of private investigators and detectives. (Forget Sherlock Holmes, just call Claude!) GPT-5 Thinking beat human experts on 79% of the tasks that sales manager perform and 75% of those that editors perform (gulp!).

On others, human experts won handily. The models were all notably poor at performing tasks related to the work of film and video editors, producers and directors, and audio and video technicians. So Hollywood may be breathing a sigh of relief. The models also fell down on tasks related to pharmacists’ jobs.

When AI models failed to equal or exceed human performance, it was rarely in ways that human experts judged “catastrophic”—that only occurred about 2.7% of the time with GPT-5 failures. But the GPT-5 response was judged “bad” in another 26.7% of these cases, and “acceptable but subpar” in 47.7% of cases where human outputs were deemed superior.

The need for ‘Centaur’ benchmarks

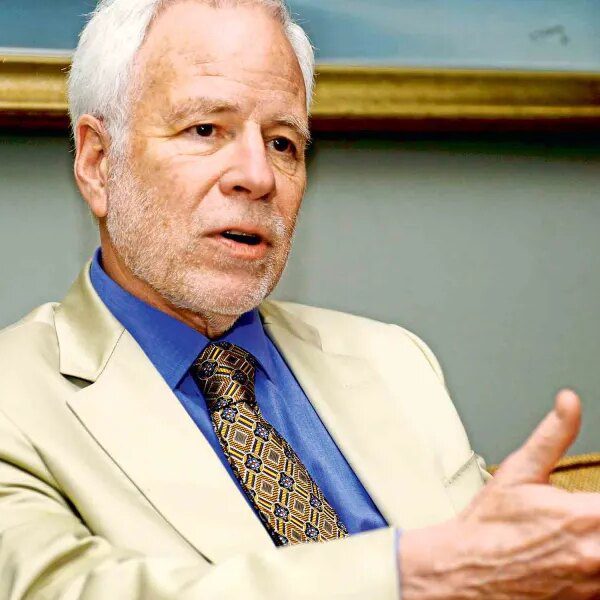

I asked Erik Brynjolfsson, the Stanford University economist at the Human-Centered AI Institute (HAI) who has done some of the best research to date on the economic impact of generative AI, what he thought of GDPval and the results. He said the assessment goes a long way to closing the gap that has developed between AI researchers and their preferred benchmarks, which are often highly technical but don’t match real-world problems. Brynjolfsson said he thought GDPval would “inspire AI researchers to think more about how to design their systems to be useful in doing practical work, not just ace the technical benchmarks.” He also said that “in practice, that means integrating technology into workflows and more often than not, actively involving humans.”

Brynjolfsson said he and colleague Andy Haupt had been arguing for “Centaur Evaluations” which judge how well humans perform when paired with, and assisted by, an AI model, rather than always seeing the AI model as a replacement for human workers. (The term comes from the idea of “centaur chess,” which is what it is called when human grandmasters are assisted by chess computers. The pairing was found to exceed what either humans or machines could do alone. And, of course, centaur here refers to the mythical half-man, half-horse of Greek mythology.)

GDPval did make some steps toward doing this, looking in one case at how much time and money was saved when OpenAI’s models were allowed to try a task multiple times, with the human then coming in to fix the output if it was not up to standard. Here, GPT-5 was found to offer both a 1.5x speedup and 1.5x cost improvement over the human expert working without AI assistance. (Less capable OpenAI models did not help as much, with GPT-4o actually leading to a slowdown and cost increase over the human expert working unassisted.)

About that AI workslop research…

This last point, along with the “acceptable but subpar” label that characterized a good portion of the cases where the AI models did not equal human performance, brings me back to that “workslop” research that came out last week. This may, in fact, be what is happening with some AI outputs in corporate settings, especially as the most capable models—such as GPT-5, Claude 4.1 Opus, and Gemini 2.5 Pro—are only being used by a handful of companies at scale. That said, as the journalist Adam Davidson pointed out in a Linkedin post, the “Workslop” study—just like that now infamous MIT study about 95% of AI pilots failing to produce ROI—had some very serious flaws. The “workslop” study was based on an open online survey that asked highly leading questions. It was essentially a “push poll” designed to generate an attention-grabbing headline about the problem of AI workslop more than a piece of intellectually-honest research. But it worked—it got lots of headlines, including in Fortune.

If one focuses on these kinds of headlines, it is all too easy to miss the other side of what is happening in AI, which is the story that GDPval tells: the best performing AI models are already on par with human expertise on many tasks. (And remember that GDPval has so far been tested only on Anthropic’s Claude Opus 4.1, not its new Claude Sonnet 4.5 that was released yesterday and which can work continuously on a task for up to 30 hours, far longer than any previous model.) This doesn’t mean AI can replace these professional experts any time soon. As Brynjolfsson’s work has shown, most jobs consist of dozens of different tasks, and AI can only equal or beat human performance on some of them. In many cases, a human needs to be in the loop to correct the outputs when a model fails (which, as GDPval shows, is still happening at least 20% of the time, even on the professional tasks where the models perform best.) But AI is making inroads, sometimes rapidly, in many domains—and more and more of its outputs are not just workslop.

With that, here’s more AI news.

Jeremy Kahn

[email protected]

@jeremyakahn

Before we get to the news, I want to call your attention to the Fortune AIQ 50, a new ranking which Fortune just published today that evaluates how Fortune 500 companies are doing in deploying AI. The ranking shows which companies, across 18 different sectors—from financials to healthcare to retail—are doing best when it comes to AI, as judged by both self-assessments and peer reviews. You can see the list here, and catch up on Fortune’s ongoing AIQ series.

FORTUNE ON AI

OpenAI rolls out ‘instant’ purchases directly from ChatGPT, in a radical shift to e-commerce and a direct challenge to Google—by Jeremy Kahn

Anthropic releases Claude Sonnet 4.5, a model it says can build software and accomplish business tasks autonomously—by Beatrice Nolan

Nvidia’s $100 billion OpenAI investment raises eyebrows and a key question: How much of the AI boom is just Nvidia’s cash being recycled?—by Jeremy Kahn

Ford CEO warns there’s a dearth of blue-collar workers able to construct AI data centers and operate factories: ‘Nothing to backfill the ambition’—by Sasha Rogelberg

EYE ON AI NEWS

Meta locks in $14 billion worth of AI compute. The tech giant struck a $14 billion multi-year deal with CoreWeave to secure access to Nvidia GPUs (including next-gen GB300 systems). It’s another sign of Big Tech’s arms race for AI capacity. The pact follows CoreWeave’s recent expansion tied to OpenAI and sent CoreWeave shares up. Read more from Reuters here.

California governor signs landmark AI law. Governor Gavin Newsom signed SB 53 into law on Monday. The new AI legislation requires developers of high-end AI systems to publicly disclose safety plans and report serious incidents. The law also adds whistleblower protections for employees of AI companies and a public “CalCompute” cloud to broaden research access to AI. Large labs must outline how they mitigate catastrophic risks, with penalties for non-compliance. The measure—authored by State Senator Scott Wiener—follows last year’s veto of a stricter bill that was roundly opposed by Silicon Valley heavyweights and AI companies. This time, some AI companies, such as Anthropic, as well as Elon Musk, supported SB 53, while Meta, Google and OpenAI opposed it. Read more from Reuters here.

OpenAI’s revenue surges—but its burn rate remains dramatic. The AI company generated about $4.3 billion in the first half of 2025—up 16% on all of 2024, according to financial details it disclosed to its investors and which were reported by The Information. But the company still had a burn rate of $2.5 billion over that same time period due to aggressive spending on R&D and AI infrastructure. The company said it is targeting about $13 billion in revenue for 2025, but with a total cash burn of $8.5 billion. OpenAI is in the middle of a secondary share sale that could value the company at $500 billion, almost double its valuation of $260 billion at the start of the year.

Apple is testing a stronger, still-secret model for Apple Intelligence. That’s according to a report from Bloomberg, which cited unnamed sources it said were familiar with the matter. The news agency said Apple is trialing a ChatGPT-style app powered by an upgraded AI mode internally, with the aim to use it to overhaul its digital assistant Siri. The new chatbot would be rolled out as part of upcoming Apple Intelligence updates, Bloomberg said.

Opera launches Neon, an “agentic” AI browser. In a further sign that AI has rekindled the browser wars, the browser company Opera rolled out Neon, a browser with built-in AI that can execute multi-step tasks (think booking travel or generating code) from natural-language prompts. Opera is charging a subscription for Neon. It joins Perplexity’s Comet and Google roll out of Gemini in Chrome in the increasingly competitive field of AI browsers. Read more from Tech Crunch here.

Black Forest Labs in talks to raise $200 million to $300 million at $4 billion valuation. That’s according to a story in the Financial Times. It says the somewhat secretive German image-generation startup (makers of the Flux models and founded by ex-Stable Diffusion employees) is negotiating a new venture capital round that would value the company around $4 billion, up from roughly $1 billion last year. The round would mark one of Europe’s largest recent AI financings and underscores investor appetite for next-generation visual models.

EYE ON AI RESEARCH

Can an AI model beat VCs at spotting winning startups? Yes, it can, according to a new study conducted by researchers from the University of Oxford and AI startup Vela Research/ They created a new assessment they call VCBench, built from 9,000 anonymized founder profiles, to evaluate if LLMs can predict startup success better than human investors. (Of these 9,000 founders, 9% went on to see their companies either get acquired, raise more than $500 million in funding, or IPO at more than a $500 million valuation.) In their tests, some models far out-performed the record of venture capital firms, which in general pick a winner about one in every 20 bets they make. OpenAI’s GPT-5 scored a winner about half the time, while DeepSeek-V3 was the most accurate, selecting winners six out of every 10 times, and doing so at a lower cost than most other models. Interestingly, a different machine learning technique from Vela, called reasoned rule mining, was more accurate still, hitting a winner 87.5% of the time. (The researchers also tried to ensure that the LLMs were not simply finding a clever way to re-identify the people whose anonymized profiles make up the dataset and cheat by simply looking up what had happened to their companies. The researchers say they were able to reduce this chance to the point where it was unlikely to be the case.) The researchers are publishing a public leaderboard at vcbench.com. You can read more about the research here on arxiv.org and in the Financial Times here.

AI CALENDAR

Oct. 6: OpenAI DevDay, San Francisco

Oct. 6-10: World AI Week, Amsterdam

Oct. 21-22: TedAI San Francisco.

Nov. 10-13: Web Summit, Lisbon.

Nov. 26-27: World AI Congress, London.

Dec. 2-7: NeurIPS, San Diego

Dec. 8-9: Fortune Brainstorm AI San Francisco. Apply to attend here.

BRAIN FOOD

Are world models and reinforcement learning all we need? There was a big controversy among AI researchers and other industry insiders this past week over the appearance of Turing Award-winner and AI research legend Rich Sutton on the Dwarkesh podcast. Sutton argued that LLMs are actually a dead end that will never achieve AGI because they can only ever imitate human knowledge and they don’t construct a “world model”—a way of predicting what will happen next based on an intuitive understanding of things such as the laws of physics or, even, human nature. Dwarkesh pushed back, suggesting to Sutton that LLMs did, in fact, have a kind of world model, but Sutton was having none of it.

Some—such as AI skeptic Gary Marcus–interpreted what Sutton said on Dwarkesh as a major reversal from the position he had taken in a famous essay, “The Bitter Lesson,” published in 2019, which argued that progress in AI mostly depended on using the same basic algorithms but simply throwing more compute and more data at them, rather than any clever algorithmic innovation. “The Bitter Lesson” has been waved like a bloody flag by those who have argued that “scale is all we need”—building ever bigger LLMs on ever larger GPU clusters—to achieve AGI.

But Sutton never wrote explicitly about LLMs in “The Bitter Lesson” and I don’t think his Dwarkesh remarks should be interpreted as a departure from his position. Instead, Sutton has always been first and foremost an advocate of reinforcement learning in environments where the reward signal comes entirely from the environment, with an AI model acting agentically and acquiring experience—building a model of “the rules of the game” as well as the most rewarding actions in any given situation. Sutton doesn’t like the way LLMs are trained, with unsupervised learning from human text followed by a kind of RL using human feedback—because everything the LLM can learn is inherently limited by human knowledge and human preferences. He has always been an advocate for the idea of pure tabula rasa learning. To Sutton, LLMs are a big departure from tabula rasa, and so it is not surprising he sees them as a dead end to AGI.