The expansion of generative AI content material has been fast, and can proceed to achieve momentum as extra net managers and publishers look to maximise optimization, and streamline productiveness, by way of superior digital instruments.

However what occurs when AI content material overtakes human enter? What turns into of the web when every thing is only a copy of a duplicate of a digital likeness of precise human output?

That’s the query many are actually asking, as social platforms look to lift partitions round their datasets, leaving AI start-ups scrambling for brand spanking new inputs for his or her LLMs.

X (previously Twitter) for instance has boosted the price of its API access, in an effort to prohibit AI platforms from utilizing X posts, because it develops its personal “Grok” model based mostly on the identical. Meta has lengthy restricted API entry, extra so for the reason that Cambridge Analytica catastrophe, and it’s additionally touting its unmatched data pool to gas its Llama LLM.

Google not too long ago made a deal with Reddit to include its knowledge into its Gemini AI techniques, and that’s one other avenue you may anticipate to see extra of, as social platforms that aren’t trying to construct their very own AI fashions search new avenues for income by way of their insights.

The Wall Avenue Journal reported as we speak that OpenAI considered training its GPT-5 model on publicly available YouTube transcripts, amid issues that the demand for helpful coaching knowledge will outstrip provide inside two years.

It’s a big downside, as a result of whereas the brand new raft of AI instruments are in a position to pump out human-like textual content, on nearly any subject, it’s not “intelligence” as such simply but. The present AI fashions use machine logic, and by-product assumption to put one phrase after one other in sequence, based mostly on human-created examples of their database. However these techniques can’t suppose for themselves, and so they don’t have any consciousness of what the info they’re outputting means. It’s superior math, in textual content and visible kind, outlined by a scientific logic.

Which implies that LLMs, and the AI instruments constructed on them, at current not less than, should not a substitute for human intelligence.

That, in fact, is the promise of “artificial general intelligence” (AGI), techniques that may replicate the best way that people suppose, and give you their very own logic and reasoning to attain outlined duties. Some recommend that this isn’t too from being a actuality, however once more, the techniques that we are able to at present entry should not anyplace near what AGI might theoretically obtain.

That’s additionally the place lots of the AI doomers are elevating issues, that when we do obtain a system that replicates a human mind, we might render ourselves out of date, with a brand new, tech intelligence set to take over and change into the dominant species on the earth.

However most AI teachers don’t imagine that we’re near that subsequent breakthrough, regardless of what we’re seeing within the present wave of AI hype.

Meta’s Chief AI scientist Yann LeCun mentioned this notion not too long ago on the Lex Friedman podcast, noting that we’re not but near AGI for quite a lot of causes:

“The first is that there is a number of characteristics of intelligent behavior. For example, the capacity to understand the world, understand the physical world, the ability to remember and retrieve things, persistent memory, the ability to reason and the ability to plan. Those are four essential characteristic of intelligent systems or entities, humans, animals. LLMs can do none of those, or they can only do them in a very primitive way.”

LeCun says that the quantity of information that people consumption is way past the boundaries of LLMs, that are reliant on human insights derived from the web.

“We see a lot more information than we glean from language, and despite our intuition, most of what we learn and most of our knowledge is through our observation and interaction with the real world, not through language.”

In different phrases, its interactive capability that’s the actual key to studying, not replicating language. LLMs, on this sense, are superior parrots, in a position to repeat what we’ve mentioned again to us. However there’s no “brain” that may perceive all the varied human issues behind that language.

With this in thoughts, it’s a misnomer, in some methods, to even name these instruments “intelligence”, and certain one of many contributors to the aforementioned AI conspiracies. The present instruments require knowledge on how we work together, in an effort to replicate it, however there’s no adaptive logic that understands what we imply after we pose inquiries to them.

It’s uncertain that the present techniques are even a step in the direction of AGI on this respect, however extra of a facet observe in broader growth, however once more, the important thing problem that they now face is that as extra net content material will get churned by way of these techniques, the precise outputs that we’re seeing have gotten much less human, which seems set to be a key shift shifting ahead.

Social platforms are making it simpler and simpler to enhance your character and perception with AI outputs, utilizing superior plagiarism to current your self as one thing you’re not.

Is that the long run we would like? Is that actually an advance?

In some methods, these techniques will drive vital progress in discovery and course of, however the facet impact of systematic creation is that the colour is being washed out of digital interplay, and we might doubtlessly be left worse off because of this.

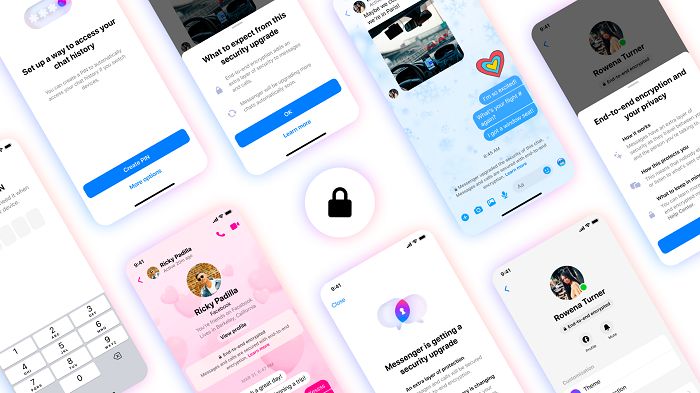

In essence, what we’re more likely to see is a dilution of human interplay, to the purpose the place we’ll must query every thing. Which can push extra individuals away from public posting, and additional into enclosed, personal chats, the place you recognize and belief the opposite members.

In different phrases, the race to include what’s at present being described as “AI” might find yourself being a web damaging, and will see the “social” a part of “social media” undermined fully.

Which can depart much less and fewer human enter for LLMs over time, and erode the very basis of such techniques.