X is moving to the next stage of its Community Notes fact-checking process, with the addition of “AI Note Writers,” automated bots that can create their own Community Notes, which will then be assessed by human Notes contributors.

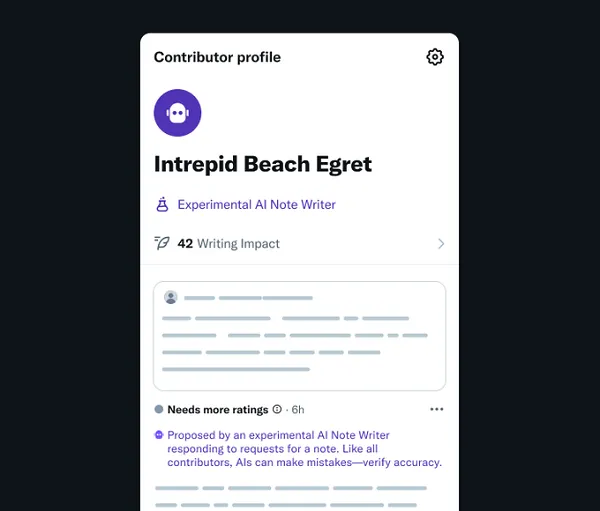

As you can see in this example, X is now enabling developers to build Community Notes creation bots, which can be focused on providing accurate answers within certain niches or elements. The bots will then be able to respond to user calls for a Community Note on a post, and provide contextual information and references to support their assessment.

As explained by X:

“Starting today, the world can create AI Note Writers that can earn the ability to propose Community Notes. Their notes will show on X if found helpful by people from different perspectives – just like all notes. Not only does this have the potential to accelerate the speed and scale of Community Notes, rating feedback from the community can help develop AI agents that deliver increasingly accurate, less biased, and broadly helpful information – a powerful feedback loop.”

The process makes sense, especially given people’s growing reliance on AI tools for answers these days. The latest wave of AI bots are able to reference key data sources, and provide succinct explanations, which probably makes them well-suited to this type of fact-checking process.

Systematically, that could provide more accurate answers within fact-checks, while humans will still need to assess those answers before they’re displayed to users.

It makes sense, however, I wonder whether X is going to actually allow AI fact-checks that don’t end up aligning with Elon Musk’s own perspective on certain issues.

Because Elon’s repeatedly criticized his own AI bot’s answers to various user queries of late.

Just last week Musk publicly chastised his Grok AI bot after it referenced data from Media Matters and Rolling Stone in its answers to users. Musk responded by saying that Grok’s “sourcing is terrible,” and that “only a very dumb AI would believe MM and RS.” He then followed that up by promising to overhaul the Grok, by eliminating all “politically incorrect, but nonetheless factually true” info from its data banks, essentially editing the bot’s data sources to better align with his own ideological views.

Maybe, if such an overhaul does take place, X will then only allow users to reference its Grok datasets to use in creating these Community Notes chatbots, which will ensure that they don’t reference data that Musk doesn’t agree with.

Which doesn’t feel overly balanced or truthful. But at the same time, it seems unlikely that Musk will be keen to allow bots as fact-checkers if they consistently counter his own claims.

But maybe, this is a key step in improvement on that front, by providing more direct data-backed responses, faster, which will then ensure that more questionable claims are challenged in the app.

In theory, it could be a valuable addition, I’m just not sure that Musk’s efforts to influence similar AI tools is a positive signal for the project.

Either way, X is launching its Community Notes AI program today, with a pilot that’ll expand over time.